The Singularity is Not Near and Neither is the End

Why the AI Driven Future will be more Mundane, Boring and Practical than the Doomers or Dreamers Imagine and That's Just Fine

Ask yourself this question:

Is there anything on Earth that benefits from being dumber?

Is anyone saying, I wish my supply chain was dumber and more inefficient? How about, I wish life saving medicines were harder to discover? Anyone out there wishing politicians were bigger idiots and passed worse laws? Is anyone out there wishing kids at school had crappier educations so we've got more morons running around? Is anyone thinking I really wish all my applications of the future were as bad as Microsoft Word, where after 20 years it still can't position a graphic, format a list properly or import anything without mangling the text?

Maybe Microsoft's AI push will finally give us an MS Word that doesn't completely suck at basically everything. Here's hoping.

I can think of a few things that are better if they’re simpler. A hammer. Some basic tools. Then again, a power hammer is smarter than hammering away like an old blacksmith so maybe not even a hammer!

Basically, there’s almost no industry on Earth or aspect of life that won't benefit from more intelligence.

AI is the key that will help us level up that intelligence across the board, making everyday tasks smarter and better and faster. That is, as long as we don't fall prey to the doom thinking and fear mongering and general foolishness that's saturating the AI discussion and sucking all the air out of the room.

It wasn't long ago that I only talked about AI with a few dedicated geeks like myself.

Now everyone's talking about it.

It's erupted from the labs into the public eye and even my barber and my taxi driver have an opinion. It's all over the news. Governments are scrambling to pass hastily written and poorly thought out legislation. Internet forums are filled with dire predictions of the end times or Utopia. Time is publishing dystopian covers of AI doom.

Okay, admittedly, that Time cover is some freaking awesome design work, even if it gives voice to tin foil hat theories of Superintelligent monster machines.

It's a perfect example of the weird kind of hysteria has taken hold.

Even many experts seem to have lost their minds and their predictions have gone from sane, measured and sound to wildly optimistic or insanely pessimistic. We've got formerly serious people running around like Chicken Little telling us the sky is falling. We've got people shouting that we'll have AGI in a few years and we'll all be out of a job and living on the UBI dole in the stacks, Ready Player One style, or that we'll have God like superintelligence magic itself from the ass of GPT-5 and it will solve all our problems or slaughter us like cattle.

(Source: Chicken Little movie)

Of course, if you take a step back and take a deep breath, almost none of these predictions make any sense whatsoever. We've got some truly amazing stuff but we have a ways to go.

If we're so close to superintelligence, why does AutoGPT go off the rails and get stuck so often? Why don't we have level 5 autonomous cars? Why can't robots do my dishes? Why does my summary writing chat bot include the footer when summarizing Arxiv articles no matter how many times I try to stop it?

It's not really all that surprising that people are either wildly optimistic or ridiculously pessimistic. This stuff is hard to understand. AI is complex. The math and science behind it are inscrutable and mysterious to most and that creates a vacuum of understanding. People love to fill a vacuum with speculation or straight up delusion. AI's aren’t the only ones that hallucinate.

So now we have people making predictions on the future with blind assertions, painfully broken logical leaps, gut feeling, and fear. But where do these ideas even come from?

Mostly from badly written news articles, sci-fi from the 1980s, the Future of Life institute clown show, and some bureaucrat's report from the early 2010s that says we'll all be living on UBI if we don't stop the jobs apocalypse now.

The truth is that like almost everything in life, AI will be somewhere in between.

It will do some good things, some bad and some neutral things too. It won't save us all or kill us all. It won't automate all the jobs and it won't magically do everything we want it to do instantaneously.

But it will do some amazing things and it will change the way the world works.

The more I look at it and think about it, the more I keep coming back to the same conclusions over and over again:

There is no industry on Earth that won't benefit from more intelligence

Automation doesn't happen overnight

Companies that favor AI that helps people will dominate the ones that try to replace people

The Singularity is not near and there will be no jobs apocalypse

But AI will replace people where there's tremendous labor inefficiency

And it may hit some starter jobs and mid-level jobs harder than others

These systems will be a lot harder than we realize to put into production and to make them consistent and trustworthy enough to really matter

We are about to get a massive amount of micro-intelligence apps

Let's take off our tin foil hat, dive in and take a look at how AI is really likely to shape up in the near future and beyond.

To the Revolution Now

GPT was a black swan. It seemed to come out of nowhere.

For years, chat bots were mostly the laughing stock of the AI community. Oh no, not another stupid chat bot that not only won't pass the Turing test, it won't fool a two year old. And then suddenly we have this thing we can talk to and it seems to understand more often than not. It's smart. Maybe not smart in the way we are but definitely smart.

It's not just GPT though. Technological revolutions seem to come out of nowhere.

They take people by surprise.

But in reality they don't come out of nowhere. Tech revolutions are built on the backs of a thousand tiny inventions that suddenly come together in the perfect way. For years, nothing seems to work. Little pieces of the puzzle have little to no real world use case. That's cool, people think, but what am I going to do with that? Even the creators aren't sure as they struggle to explain it.

Then all at once it seems to fit, almost like magic.

Suddenly engineers and researchers find a use for that "useless" piece and the puzzle starts coming together tremendously fast and the technology takes off, changing the world around it along with how we live in it and experience it. We're seeing that now with things like vector databases. If you asked me three years ago whether anyone needed a vector database I'd say "what for?" Now they've suddenly got new life as the long term memory and caching system of neural networks. Some folks still think they're overkill but they're still proving valuable in this totally unexpected way that nobody could have predicted.

We're been researching AI since the 1950s. It's made progress in leaps and bounds, punctuated by long periods of painful stagnation. We've had landmarks like Deep Blue beating Kasparov in chess, Watson winning on Jeopardy, self-driving cars, and AlphaFold predicting the shape of millions and millions of proteins. But nothing really caught the public's attention the way ChatGPT and GPT-4 caught on.

What was the difference?

All of those other advances were remote and distance.

You read about them but they didn't really seem to touch you.

You read about self-driving cars but you probably never road in one. AlphaFold is incredible but it doesn't mean much to the average person if you're not a scientist or working at a pharmaceutical company. AlphaGo beating Sedol was a watershed moment that meant a lot to many people, especially in Asia where Go is wildly popular, but it was still just a game and didn't seem to mean much to the real world and real people. Maybe you used translation on your phone but you never really thought of it as AI. It just worked. It was a simple and straightforward application, nothing magical to you.

GPT is different. It's multifaceted. It's adaptable. It's right there in front of you. It feels almost supernatural at times. You can ask it anything and there is a good chance it can answer or hold an intelligent conversation about it with you. It's a Swiss Army knife on steroids.

Like Snoop Dog said, "AI right now, this thing is made for me, dis nigga can talk to me, I'm like me and dis nigga can hold a real conversation, like for real, for real. It's blowing my mind because I watched movies on this as a kid...Is we in a fucking movie right now or what?"

Right now I'm using it to help me learn Spanish. I study in Babel but use GPT to talk with because it's so patient and understanding. As a writer, English is my super power. It's super frustrating to learn a new language and struggle to find the right words and to wield that language much less elegantly. I feel like an idiot and I'm good at learning new things. I usually don't mind the struggle and the part where I suck. I'm not afraid to suck. But with language it's different. I hate sucking at it.

But GPT even has an answer for that secret confession of embarrassment, something I'd never share with most people, except my faithful blog readers. :)

It even understood that I meant "can't learn" instead of "can learn". I didn't have to rewrite the question. It understood what I meant.

That's how good it is.

I go back and forth with it. It gives me sentences and lets me translate them and helps me understand what I missed or got wrong and why.

Imagine when every kid has a patient tutor like this, one that never gets tired and keeps encouraging you. No longer will kids be stuck learning from some angry teacher playing out some personal trauma as their only source of information.

We've all had great teachers who changed our lives. They made us fall in love with learning. Do you remember yours? I do. Mr. Dawson. He's the reason I fell in love with writing and books in the first place. He's still a friend to this day.

But how many times did you have a horrible, nasty teacher who made you hate a subject? I had more of these than I did good teachers. Those experiences tend to stick with us our whole life. They warp our trajectory. Maybe we might have been good at math if we just had the right teacher but now it's too late.

What if it didn't have to be that way?

What would it be like if we have good teachers across the board in every subject?

Tomorrow's kids won't have to wonder.

They'll always have a safe and smart teacher ready to help at any hour of any day, who patiently encourages them, like the Young Lady's Illustrated Primer but better.

(Source: Penny from Inspector Gadget with her old school computer book)

A lot of people are worried about the end of education. How about an education renaissance instead? Sure some kids will cheat but some kids have always cheated. Motivated students and life long learners will have powerful tools at their disposable that will supercharge their intelligence in any subject they want.

And that's just the beginning.

Since ChatGPT burst onto the scene, not a day passes without some new, exciting breakthrough. An AI-driven song featuring impeccable impersonations of Drake and The Weeknd recently rattled the music industry. OpenAI opened plugin support to all paying customers and now Zapier, DoNotPay, Expedia, and OpenTable are just a ChatGPT call away. Here's the DoNotPay bot negotiating with a New York Times call center dark pattern worker who's there to try to upsell you when you just want to cancel.

The bot uses third party tools as easily as we walk down the street. Just by tapping out text into a box, or talking to it, Her style, you could order your dinner or map out your next getaway.

The rapid pace of this progress has even spooked Google the tech powerhouse of the last generation. A supposedly leaked presentation from one of their engineers says "we have no moat, nobody does."

It's just getting started.

And despite the frothing at the mouth hysteria swirling around AI, it does raise some big questions.

What does it all mean for us? What does it mean for the economy? What does it mean for society?

What does it mean for me?

That's the real questions everyone is asking. Am I out of work? Do I need to change? Will I matter?

My answer is yes, people still matter. More than ever.

But you will have to change. We all will.

The companies that are really successful will be the ones that augment people and help them, not the ones that want to put everyone out of work.

AI's evolution isn't just an exhibition of technological prowess. It's a demonstration of the symbiotic relationship between human ingenuity and machine efficiency.

Remember, the foundation of this revolution lies in letting humans do what they do best and machines do what they do best. The AI juggernaut is rolling, and it's not about replacing us, it's about augmenting our capabilities and helping us solve more complex, non-linear tasks.

As Peter Thiel wrote in Zero to One, "The most valuable companies in the future won’t ask what problems can be solved with computers alone. Instead, they’ll ask: how can computers help humans solve hard problems?

The companies that win in the coming revolution will leverage human abstract thinking, insight and understanding, along with AI’s ability to see big patterns, distill down massive amounts of information to its essential features, and to scale up complex, non-linear tasks.

In other words, those companies and their applications will be centaurs.

Chiron and the Galloping Centaurs of Tomorrow

The idea of the centaur came to us from Gary Kasparov. After losing to Deep Blue, he sponsored a tournament where you could enter as an AI, a person, or an AI/person hybrid team. An AI hybrid team won. But it wasn’t a grandmaster with an AI.

It was three expert players and an AI.

AI has the ability to add tremendous leverage to every task, to upskill every day workers and to do tasks previously inconceivable with traditional hand written code.

Intelligence has never been scalable. Now it will be.

Yes, AI will also automate some kinds of jobs completely or nearly completely but it will also create new jobs we never imagined. Don't buy into the AI jobs apocalypse. In the long run AI will create an explosion of new jobs. It's easy to imagine all the jobs that will disappear but hard to picture a web designer job if you're an 18th century farmer because it's based on the back of a dozen technologies you couldn't predict in the 1800s, like electricity flowing over wires, computers, the internet, browsers and more.

The AI powerhouses of tomorrow will get built on the key insight that built Google:

Let people do what they do best and machines do what they do best.

The original PageRank algorithm at Google leveraged that insight to become the king of search. Sergey Brin and Larry Page saw that computers were good at counting and humans are good at giving meaning to information. Google's systems relentlessly counted links and people made those links which showed what they thought was the most meaningful and useful information.

Think it's too late to build the AI platform or agent of the future? Remember that Google was the 18th search engine. It's not who gets there first, it's who does it right.

Of course, in the age of AI, what computers are good at has changed. They're still good at counting but they're really good at a lot of other things too. LLMs are great at understanding natural language, reasoning and using external APIs and tools as easily as we use our arms and legs.

But the basic principle remains the same. Computers are good at some things and people are good at different things. Moravec's paradox says it best, the things that are easy for people (walking, abstract thinking, sense of self, common sense) are hard for computers and the things that are hard for people (memorization, long term recall, multitasking, scale) are easy for computers.

Some folks are starting to believe that people aren't good at anything anymore and we'll all soon be out of work. Betting on that thesis is a recipe for losing money and for driving yourself nuts.

Companies that try to automate everyone out of the existence will face fierce and ugly resistance from almost every direction in society.

But how do you build a company that leverages the new possibilities of scalable intelligence and let people do what they do best too?

Take something like a prompt injection defense company.

It will likely be modeled on the anti-virus companies on the past, like Eset, a 500M a year business. It will use signatures, heuristics and neural nets to detect threats, just like anti-virus companies do today and maybe mix in a few new techniques that next-gen models deliver. As it gets installed on more computers and in more pipelines it will see an ever more diverse set of attacks.

But that company would still need smart, creative security engineers who can detect, understand and classify new threats. An AI will not see a completely novel attack and understand why it happened and what to do. It only detects existing patterns well. Humans are real creative at exploits. We're devious and tricky. It's a feature of our intelligence. That means people are still the best at making meaning from information and creatively adapting to brand new situations. AI can't do that yet and there doesn't seem to be many techniques to make it happen. The ones that do exist are mostly in the research phase.

Or take something like security.

AI will bring a boon of fantastic new possibilities in this field and some problems too.

It's a double edged sword. It can slice through previously unsolvable problems like a Gordian knot or it can cut you.

Consider automated PEN testing. It's possible to take the ingredients we have today to build an expert hacking model. It could learn to stealthily try to footprinting an organization, probing for weaknesses before trying various attacks and tools, write its own code and hit organizations from a distributed network of nodes running in the cloud, hunting for a way to break into the organization.

This kind of tool would prove tremendously effective for security firms like Astra Security, one of the top PEN testing companies in the world, or Crowdstrike who helps governments deal with APTs or Advanced Persistent Threats, aka well funded, experienced cybercriminals or hostile foreign military hackers who target high value organizations over long periods of time as their job. It would let a white hat team quickly find and fix issue across an organization. We already have PEN testing software that scans for vulnerabilities but AI will supercharge it. It could do social engineering and dozens of other attacks that only humans can do right now, like generating those spear phishing emails on the fly. It could chain together attacks and look for novel vulnerabilities, running darknet tools en masse and inventing new attack code as soon as vulnerabilities come out.

This can and will revolutionize security for larger organizations. It will make them more robust and harder to crack.

In case it's not totally obvious to you, these tools will also be incredibly dangerous in the hands of malicious hackers, hostile APTs and cybercriminals. It will boost the powers of advanced cybercriminal gangs like the one that stole over 1 billion Euros over five years by infiltrating the banks with their Carbanak and Cobalt malware and causing ATMs to spit out over 10M in a single heist like magic.

Whenever I think about technology I think of it like a game of Go. Attack and defense. Action and reaction. Every move causes an equal and opposite move. So if we have automated attack machines, we'll also have automated defense machines. We're already seeing prototypes of automated code-self-healing. Expect those tools baked into every compiler and security system at scale. We'll see the ability to self-patch, self-diagnosis and quarantine off vulnerability systems in real time.

That leads to another kind of company built on AI that we'll see a lot of in the coming years, AI that helps people code.

If you're building a company to help people program, you should not be looking to build one that completely takes over programming. You won't sell it. You'll see resistance at every step. People will fight you and ignore your software.

Instead, you want to build AI software that helps programmers of all levels. It should teach junior programmers, like Clippy on steroids or the Young Lady's Illustrated Primer for Inexperienced Coders. It should helpfully suggest fixes. It should teach the deep logic of programs so that inexperienced coders learn faster. Think of it like a video game where the first board is always an in-game tutorial. People used to read manuals to learn to play a game and now every game teaches you as you go. The same will happen with coding programs.

For advanced programmers, skilled at doing the deep thinking and abstract thinking behind an advanced system, you want to help them turn that logic directly into working code. This advanced coder, who's written millions of lines of code, is already working that way, getting 10X done and not feeling exhausted after work. As the author writes:

"Since extensively using Copilot and ChatGPT, this cognitive exhaustion is pretty much gone. 6pm strikes and I feel like I spent the day chatting with a buddy, yet 5 PRs have been merged, the unit tests have been written, two tools have been improved and the code has shipped."

The less boiler plate code they have to write the better. Let them do what they do best, think and reason. They can turn off the extra tutorials and explanations and just get fixes done.

That's software you can sell right now.

People will buy it happily because they'll augment their top notch talent and help train the best programmers of tomorrow even faster.

The AI Factories

The next decade will mean the industrialization of AI.

But what does that mean?

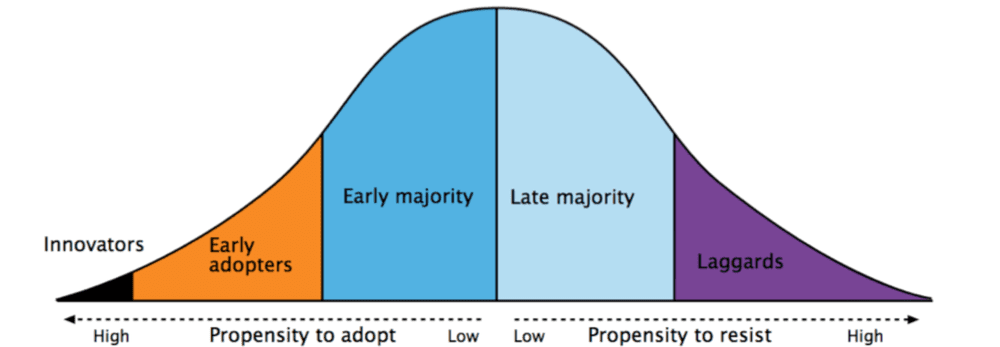

Industrialization is where small, limited purpose prototypes and research become highly refined and repeatable. They become sellable, because many, many more people can use them. The first prototype of any technology is used by a small group. Then it gets picked up by early adopters. That's the classic diffusion of innovation curve.

The same is happening now in AI. AI has come out of the labs into the real world and engineers everywhere are making it smaller, faster and more nimble and more useful.

But right now these systems are unstable, hard to use, hard to deploy and hard to maintain. They've got to get a lot better to be usable consistently. That's where we are right now. Nobody is replacing their CEO with AutoGPT for anything other than a publicity stunt on Twitter.

The original prototypes for making nitrates for soil using the Haber-Bosch process in Germany looked like a Rube Goldberg machine, but engineers refined the concepts and made it repeatable and salable and manufacturable. Today agriculture is radically transformed by the ability to rapidly produce nitrates. Instead of the population bomb, we got the green revolution.

Expect a similar leap over the next decade as better techniques, better algorithms, novel training breakthroughs and new and better chips produce more and more industrial scale intelligence that remakes our world.

But what about superintelligence? AGI only two years away? Super machines that can learn anything in an instant?

If you read the Singularity Reddit, Less Wrong, the newspapers and Twitter, you might have gotten caught up in the feeling that AGI is now just a few short hops away.

It’s not.

So what will it take?

Sam Altman thinks it takes another 100 billion dollars to get to AGI.

That's a big number. Here's a bigger one. My sense is he's underestimating it by an order of magnitude. Think a trillion dollars or even 10s of trillions of dollars.

Despite all the recent success of diffusion models and GPT-4 (and it's a truly amazing system despite what relentless critics say about it), there are major, major unanswered problems in AI that we need to solve to get to the next level.

Even, Altman, the proponent of bigger and better systems recently said “I think we’re at the end of the era where it’s going to be these, like, giant, giant models. We’ll make them better in other ways.”

What does that really mean?

It means for all the genius of GPT-4 it's not truly smart. It doesn't know when it doesn't know something. It can double down on wrong answers. It can do math but it never really learns that 2 + 2 = 4 and knows how to extrapolate the rule behind arithmetic to do more arithmetic. It has little grounding in meaning or experience.

Take the case of a foolish and painfully uninformed teacher at Texas A&M University. He was so worried about cheating that he ran every student essay through ChatGPT twice and asked it if it wrote those essays. He failed most of the class because ChatGPT said yes. But actually ChatGPT has no way to tell whether it wrote anything. It can't search a database of things it wrote. So what is it doing really? Just predicting a likely next word to respond to a human. Yes or no. It's basically totally random. It has to answer something so it makes up an answer. It's essentially a coin flip when you ask it a question like that. You can try it yourself. Ask it the same question multiple times with something you wrote yourself and it will give you different answers because it really doesn't "know" anything like this. Unfortunately, the teacher doesn't know the system works this way and thinks ChatGPT must know whether it wrote something when all it's doing is flipping a coin on yes or no.

These kinds of problems are not easily solved by current techniques and folks who think they are don't really understand the limitations of what we're doing right now.

So what do we need to have truly incredible and intelligence systems?

Organizations like DARPA are usually far ahead of the curve in seeing what we really need to advance to the next generation of a new technology. Here's a brief list of open challenges that DARPA and other forward thinkers see as the problems we need to solve to get to truly powerful AI systems.

Novel training methodologies, such as teaching AI the way we teach children via demonstration, and other techniques that don't involve huge amounts of data

Techniques that offer Inventiveness and new ideas generation

The ability to adapt to unknown and totally new circumstances outside of training data

Or how about true continual learning and common sense reasoning, not to mention the ability to run on a lot less power

Don't get me wrong. None of this means that we won't have truly awesome and powerful systems in the next decade.

There is a lot of mileage to get out of the current systems and the current techniques, even with their limitations.

We'll do that by doing what people are doing right now, layering on outside augments and connecting these systems to other platforms and systems that can mitigate those weaknesses. The current stack is looking like vector databases for long term memory and recall, outside symbolic knowledge bases like Wolfram Alpha, web browsing capability, and connections to other models like the JARVIS/Hugging GPT approach.

A combination of LLMs, augmentation techniques like longer memory wrappers for infinite context or self-notes for better reasoning, connected to memory and state databases, and the ability to reach out to external knowledge retrieval and external SaaS tooling can go a long way to giving us the feeling that we've got a generalized intelligence that can do almost anything.

In other words, we're going to build complete systems around the current models that mitigate their weaknesses and enhance their capabilities. I expect to see:

New and more powerful databases for long term storage of ideas/thoughts/reasoning

Better and faster long term memory built right into the model itself or through opportunistic caching

Middleware that wraps the models and the connections to external sources

Editable visual logic trees and planning

Automatic fine tuning

Security systems that protect the model at each stage of connection

Robust synthetic data generation

The ability of models to replicate themselves and delegate subtasks to those models and coordinate between them

The evolution of LLMs into Large Thinking Models (LTMs) that use external tools, code and models as easily as we use a shovel or a ladder

What are we likely to get from this?

Barring some astonishing breakthrough that gives us true generalized intelligence and changes the trajectory of the future, we're most likely to get a "simulation of advanced intelligence."

What do I mean by that?

I mean something that feels very very human and smart and even superhuman. It rarely makes mistakes, rarely hallucinates, and makes good sound decisions over 99% of the time. I expect it the follow the curve of progress we saw with spam filters. We went from 70% accurate with rules and heuristics to 99% over time as Bayesian techniques took center stage. Now we rarely see spam in our inboxes because it's gotten so good.

So if we've got an approximation of a generalized intelligence, what's the difference?

If I can simulate AGI isn't that good enough?

No.

As the Waymo CEO said recently, "We're 99% of the way there on self-driving cars but the last 1% is the hardest."

The cars can't adapt to totally difference situations or completely unexpected challenges. They get stuck when they go down a one way street and it's blocked off and then have to make a decision to break the rules and backup down the street or go over a sidewalk.

In other words, they can't adapt and improvise reliably. They can't color outside the lines and break the rules like we do. We know what the goal is, what the parameters are to get it done and we can ad-lib within those rules.

Or let's say we train up a model to design rockets. It's the greatest rocket designer in the world. It's distilled all the knowledge of past rocket scientists. What is it missing? The ability to dream up a totally different way to get to space, like a solar sail or some never before imagined ideas. It's a rocket maker and it won't be able to abstract away that concept of space travel and come up with a totally new, never before thought of platform to get us to the stars.

That is what humans do that AI can't still touch:

Abstraction.

A model may learn that knives are sharp after studying knives again and again. A human just needs to get cut one time to understand the concept of sharpness = pain = danger. They'll take that abstract concept of sharpness and apply it to spikes and jagged rocks and other sharp things and then avoid them so they don't get cut.

Our greatest thinkers do abstract reasoning and apply that abstraction across different domains to solve incredible problems. It's what I do when I make predictions about the future. I abstract out all the underlying patterns I've seen and studied my whole life and I apply them to the future. I'm good at it because I've spent a much of my life doing it and there is simply no machine on Earth that can do it like I can just yet.

A human being can study all the possibility spaces of a problem, draw inspiration from many disparate places and dream up a totally new concept (a rocket) that has never existed before and then engineer it and land it on the moon the first time.

Something that can adapt to totally new situations, make novel breakthroughs, understand new domains without new training are outside the realm of current systems and techniques. We won't get the ability to create new concepts and ideas from what we're doing now with AI. It will take something that's missing and that's all right.

I'm a big fan of scale and the Sutton principal in machine learning which says "The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin." General purpose techniques like backpropagation or evolutionary algorithms or reinforcement learning that scale have consistently proven again and again to outpace smaller expert systems or heuristics.

But that doesn't mean we've discovered all the necessary generalized learning techniques that we need.

The Push / Pull of Progress and Fear

Fears over tech and tech destroying jobs are nothing new. Paranoia about technology is nothing new.

But progress in the modern world is very real and it's because of technology. You often see fools on Twitter post something like "progress" in quotes to say progress is not real progress and life is just a horrible and miserable crawl through the muck in the modern world.

Nothing could be further from the truth. We're the luckiest 1% of 1% of people to ever live. People putting progress in quotes have no sense of history and don't seem to know that life in the past was often nasty, brutish and short.

Nearly half of all children used to die, rich or poor, in every country on Earth, into the late 1800s. In most places now it's single digits or a fraction of 1%.

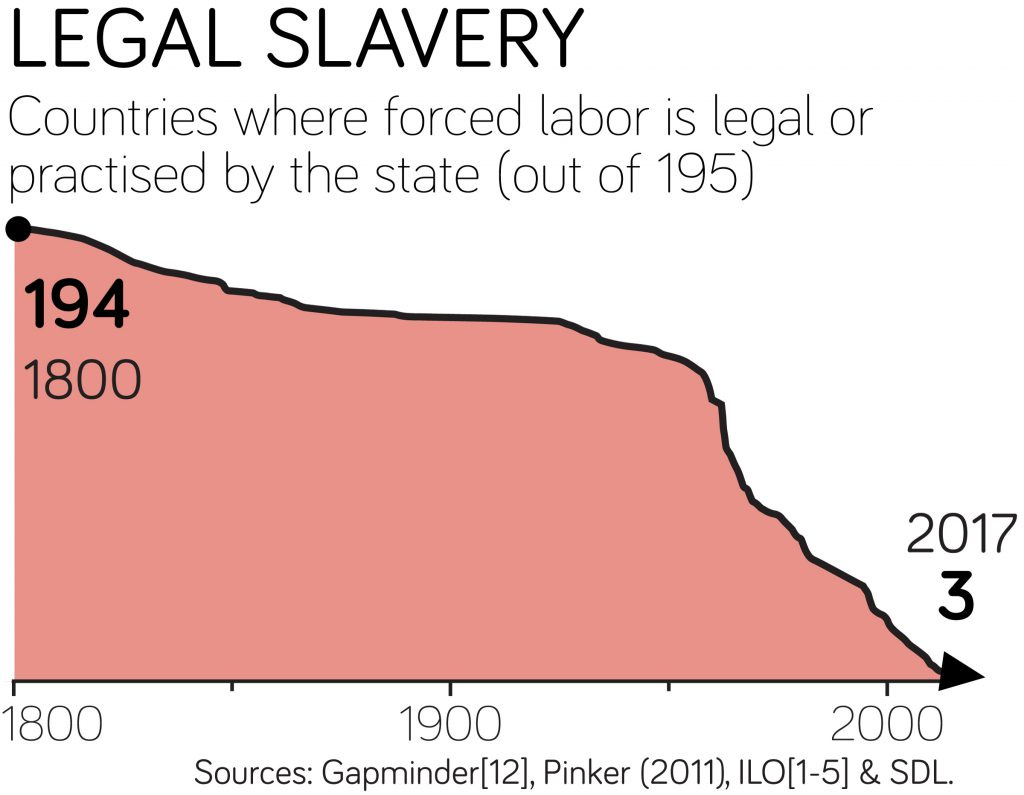

Slavery was the basic fact of life in every country and all ancient empires. If you lost the war, you became a slave. Towards the end of the Roman Empire as many as 80% of the people were former slaves. Now very few people live in the horror of slavery.

We eradicated one of the most horrific diseases in history through mass coordination and science. Straight up eradicated it. Go look at pictures of people with smallpox. This is not a disease you want.

The number of girls in school is up to 90% as of 2015 when it was as low as 65% in the 1970s. More educated people is always a good thing for society. The smarter we are, the more resilient we are to solving problems.

Our ability to understand the world has increased exponentially with the scientific revolution. Educated people once believed witches caused storms, knives would bleed near murder victims + that mice spontaneously materialized in hay.

Now we have medicine that actually works instead of snake oil and we can predict the weather pretty damn well and we can find criminals through finger prints and DNA.

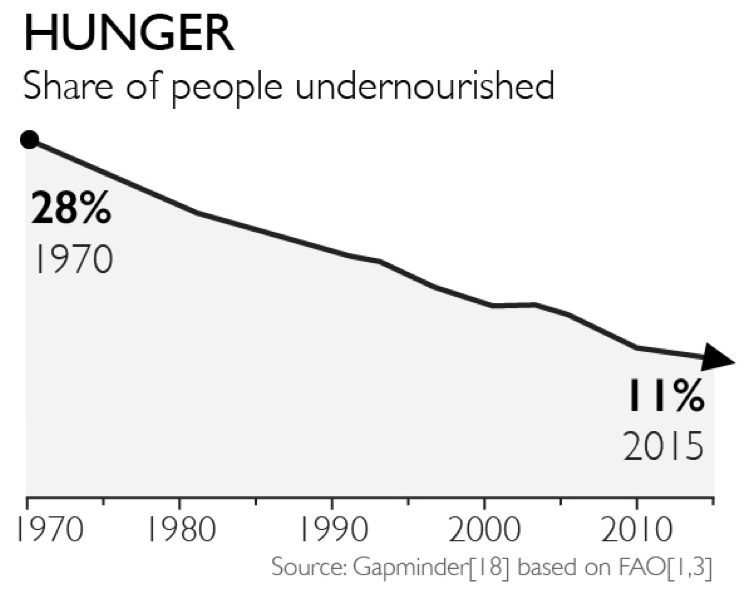

In the 1960s the book The Population Bomb predicted that we'd need to let 2 billion people starve because there just weren't enough resources to go around. Instead we got the Green Revolution and can sustain more folks than ever.

Progress is real. The only illusion is not seeing it.

Anti-progress people will often throw out lines like "well are nuclear bombs good, huh?" Of course, all progress is not good. Progress is not perfect. We sometimes take two steps forward and one step back. But calling out a single technology that is designed to one thing and one thing only, kill people, doesn't detract from the rest of the progress we've made in history through science, engineering and intelligence. And even technology gave us nuclear reactors, one of the cleanest, greenest power sources, and X-rays which were a major boon to medicine and treatments.

The fact remains, the smarter we get, the more scientific, the more educated, the better society gets over time.

We always benefit from getting smarter, from jumping beyond magical thinking like witches causing storms to understanding how barometric pressure works and influences the weather.

AI will accelerate that progress and our intelligence like never before in history. It will help us discover new materials, design better and safer drugs, drive cars for us and make driving much safer, saving millions of lives per year, to name just a few.

In the end, opposition to a new technology eventually falls away over time, as it becomes integrated into every day life.

In 19th-century Britain, Luddites burned factory machines. We got the term “automation” when wartime mechanization sparked a wave of panic over mass joblessness in the 1950s. Back in the late 1970s, Britain’s prime minister, launched an inquiry into the job slaughtering potential of the microprocessor. In a widely cited paper a decade ago, Carl Frey and Michael Osborne of Oxford University, claimed that 47% of the American jobs could be automated away “over the next decade or two”.

What's actually happened?

We've had massive new markets, more diversified jobs over the last forty years. Now labor markets are getting tighter as the Baby Boomers retire. Most rich countries are not making enough babies to replace all the retiring workers. Despite all the headlines of laid off tech workers, there are actually two vacancies for every unemployed American, the highest ever. America’s manufacturing and hospitality sectors report labor shortages of 500,000 and 800,000.

Don't bet on the automation of all jobs. Don't bet on the Singularity. Don't bet on AGI doomsday.

We need more intelligence now. We need to speed up AI, not slow it down or regulate the hell out of it before it even gets out of the cradle.

We'll solve the problems of AI in the real world, like all problems are solved. Imaginary problems are not solvable, only problems in the real world. If machines get to a place where they do things we don't want, we'll figure it out. And those solutions will be based on all the practical, real world solutions we learned from solving today's challenges with AI not with dreaming up imaginary solutions to imaginary challenges. Those solutions will form the basis and the bedrock for future solutions, not arm chair philosophizing by people with a problem for every solution.

So if you're out there working to amplify human potential, working to make workers smarter and faster or more creative, working to bring ambient intelligence to every industry on Earth, then you're on the right track. You've got something.

People still matter, more than ever.

And everything in the world will benefit from getting smarter.

Wow, thanks. Will give it a try.

Love this article!