Let's Speed Up AI

Calls to Slow Down AI are Deeply Misguided. We Can Only Solve Problems in the Real World and to Make AI Truly Safe We've Got to Expose It to the Infinite Creativity of Humans.

Recently I read a piece called "Let's Slow Down AI".

The post isn't all that badly written but it's got a few major problems. One of the biggest ones is a fundamentally wrong premise: The idea that we can fix problems in isolation.

To fix real challenges, we've got to do the exact opposite:

We've got to speed up AI.

We've got to put it out into the real world and fix any problems there.

When OpenAI put ChatGPT onto the Internet, they immediately faced exploits, hacks, attacks, and social media pundits who gleefully pointed out how stupid it was for making up answers to questions confidently.

But what they all missed is that they were a part of the free, crowdsourced product testing and QA team for ChatGPT.

With every screw up immediately posted on social media, OpenAI was watching and using that feedback to make the model smarter and to build better guardrail code around it. They couldn't have done any of that behind closed doors. There's just no way to think up all the ways that a technology can go wrong. Until we put technology out into the real world, we can't make it better. It's through its interaction with people and places and things that we figure it out.

And it's real life feedback that makes it safer faster.

You can hammer away at your chat bot in private for a decade and never come close to the live feedback that OpenAI got for ChatGPT. That's because people are endlessly creative. They're amazing at getting around rules, finding exploits, and dreaming up ways to bend something to their will. I started playing Elden Ring recently and if you want to know how to quickly exploit the game for 100,000 runes and level up super fast about 30 minutes into playing it, there's a YouTube for that. How about an exploit for 2 million runes in an hour? That's what people do with games, political systems, economic systems, everyday life and office politics. If there's a loophole, someone will find it.

If you put one million of the smartest, best and most creative hackers and thieves in a room, you still wouldn't come up with all the ways that people will figure out to abuse and misuse a system. Even worse, the problems we imagine are not the ones that actually happen usually.

In this story in Fortune, OpenAI said exactly that. “[Their] biggest fear was that people would use GPT-3 to generate political disinformation. But that fear proved unfounded; instead, [their CTO, Mira Murati] says, the most prevalent malicious use was people churning out advertising spam."

Behind closed doors, they focused on imaginary political disinformation and it proved a waste of time. Instead it was just spammers looking to crank out more garbage posts to sell more crap and they couldn't know that beforehand. As Murati said "You cannot build AGI by just staying in the lab. Shipping products, she says, is the only way to discover how people want to use—and misuse—technology."

Not only could they not figure out how the tech might get misused, they didn't even know how people would use the technology positively either. They had no idea people wanted to use it to write programs, until they noticed people coding with it and that only came from real world experience too.

This idea that we can imagine every problem before it happens is a bizarre byproduct of a big drop in our risk tolerance as a society. It started in the 1800s but it's accelerated tremendously over the last few decades. That's because as we've dramatically driven down poverty, made incredible strides in infant mortality and gotten richer and more economically stable over the 150 years, it's paradoxically made us more risk averse. When you have it all you don't want it to go away, so you start playing not to lose instead of to win, which invariably causes you to lose. By every major measure, today is the greatest time to be alive in the entire history of the world. We’re safer, have much better medicine, live much longer and richer across the board, no matter what the newspapers tell you. But that's had the effect of making us much more paranoid about risks real and imaginary, to the point of total delusion.

Even worse, the same folks who think we can fix AI behind closed doors, also imagine we can make AI systems perfectly safe, something that's totally impossible. If we don't let go of perfection fast we'll miss out on less-than-perfect systems that are incredibly beneficial and amazing for the world.

Take something like self-driving cars.

How many self-driving car crashes are acceptable in a year?

Many folks will tell you none. We've got to get it flawless.

No doubt you've seen the stories of fiery Teslas crashing and killing people. They make headlines. While every accident is always a tragedy, these stories would have you believing that Teslas are crashing every second and killing more people than regular driving. But what if I told you that all in all, a grand total of 273 Teslas crashed last year while using drive assist or autopilot, with five 5 fatalities? That’s out of the more than 6 million car crashes in the US last year alone.

How many people die on the road every year driving the old fashioned way?

1.35 million people worldwide and about 38K-50K in the US alone, depending on the year. Another 50 million people are injured, many of them badly. That's every year.

All in all, humans are absolutely terrible drivers. It takes a massive amount of concentration over an extended period of time to drive a car even for short distances but we're prone to getting distracted and angry, looking away, falling asleep or getting bleary eyed. When you really think about it, it's amazing that we drive at all and that accidents and fatalities aren't much worse.

Statistically AI already drives a hell of a lot better than us. And it's going to keep getting better. Of course, it will never be perfect. There's just too many variables. But we don't need perfect to cut down on many needless deaths every single year.

If AI can cut those deaths in half or down to a quarter, that's lots of lives saved. If we cut it by half, that's 675,000 people who are still playing with their kids and going out for dinners with their friends. If we cut it down to a quarter that's over a 1 million people a year! And that's not to mention injuries avoided too.

As a society, we've gotten so wrapped up in techno panic about AI that we can't see all the good we'll miss out on if people try to crush AI in its crib out of blind panic and fear. That techno panic takes many forms. But maybe the biggest is right there in the subtitle of the slow-down-AI article:

"Averting doom by not building the doom machine".

Averting Doom by Not Panicking About Imaginary Doom

The basic premise of the slow-down article is that AI doom is inevitable. We've got to slow it down or stop the research now to avoid the disaster! It's not that AI might go crazy and kill us all, it's that it will kill us all!

But where does this idea even come from and why do so many people take it as an inevitable fact?

Mostly from stories and the news. We've had 50 years of science fiction with AI destroying all the jobs, growing sentient and taking over, or rising up and exterminating us all, Terminator style. Now we've got a surge of articles echoing the same fears.

Terminators and the jobs apocalypse have one thing in common:

They're all imaginary.

They haven't happened. They’re science fiction. They make for great, scary headlines in the media to drive clicks and they make for great movies and government reports. But I repeat, they haven't happened. In twenty years they’ll look about as accurate as the Population Bomb’s predictions. Yet somehow many folks today are convinced it will happen no matter what. As if it's just a matter of time! Mass unemployment, social unrest, rich versus poor!

We'll all be out of work, plugged into VR, wasting away our lives, eking out a miserable living on the government dole, Ready Player One style.

All you need to do is read the screaming headlines about the end of all jobs! It's a story that mutates faster than COVID. The robots are coming! Image diffusion is the end of all art as we know it! ChatGPT is coming for all white collar work and why we should be terrified! A new variant of the story hits the news feed on my phone half a dozen times a day. It happens so fast you'd think the generic and half-baked stories were cranked out by ChatGPT itself.

There's a few huge problems with these stories. The first is the essence of story itself.

Stories are about one thing:

Conflict.

If I write a story about two kids who go to the woods and they take their father's gun and nothing happens, that's not a story. If they take the gun and one of them shoots the other now we have a story. Conflict is the key driver of all stories through all time. You can't write one without it. People have tried. Nobody reads those books. Whether it's a news story or a novel or a TV show, it's all about suffering and fear and battles, personal and between people.

Even if you subscribe to the Uplifting News Reddit, I'm betting you read about new cures for cancer and puppies getting saved for about 5-10 minutes before going right back to reading about the end of the world, the crashing economy, terrorism, the plague, war and suffering. We all do. Stories are a reflection of our nature and we're fear based creatures. Fear is the fire under the ass of humanity. Without it, we'd never save any money, build great monuments and skyscrapers or find the courage in the ancient world to sail across the open sea to see what was on the other side.

When it comes to science fiction, you've also got to remember that almost all of those stories were written before AI actually existed. So writers like Asimov made up concepts like the three robot laws. But they weren't real world laws we can apply to machine learning systems as they exist today. They’re nothing but literary constructs. The whole point, from the writer's standpoint, was not to create working laws, but to drive endless conflict as the robots tried desperately to keep all those rules but found themselves inevitably in violation of one of them.

Most sci-fi writers write AI as either a robot side-kick buddy, like R2-D2, or as a villainous metal monster like Ultron in the Marvel movies, or as an AI gone crazy, like HAL in 2001 A Space Odyssey. That's two out of three AI story tropes as evil AI gone wrong. In essence, it's just putting the bad guy in a metal suit.

Just remember that none of those authors ever saw real AI because it didn't exist when most of them were writing about it, or it was only there in very rudimentary form. As Shuri said in Wakanda Forever, “AI is not like in the movies, it does exactly what I tell it to do.”

Authors weren't trying to create a realistic picture.

They were trying to tell a good story.

Techo Panic for Everyone and Destroying All the Jobs Over and Over Again

Of course, humans love a good doomsday story. That's why The Last of Us is a big hit for HBO, despite us just having lived through a pandemic. We've got a long history of worrying about the end of the world, from God smiting us all, to environmental disasters, to technological apocalypses. Here's a list of technologies that people thought were going to devastate society or cause mass destruction:

Television was going to rot our brains. Bicycles would wreck local economies and destroy women's morals. Teddy bears were going to smash young girl's maternal instincts. And don't even get us started on comic books, cars, planes, the internet, video games.

Cameras were out to destroy art.

"The fear has sometimes been expressed that photography would in time entirely supersede the art of painting. Some people seem to think that when the process of taking photographs in colors has been perfected...the painter will have nothing more to do," wrote Henrietta Clopath, back in 1901.

Instead we've all got a camera in our pocket now and there’s more art than ever.

Every single new technology creates new panics with reporters whipping up scary headlines that look laughably absurd in hindsight. CNN once "reported" that "email hurts IQ more than pot" and the Daily Mail once screamed that "Facebook could raise your risk of cancer." Back in 2014, a British newspaper compared playing video games to shooting up heroin.

This article in Slate, tells the tale of a respected Swiss scientist, named Conrad Gessner, who was the first to "raise the alarm about the effects of information overload. In a landmark book, he described how the modern world overwhelmed people with data and that this overabundance was both 'confusing and harmful' to the mind. The media now echo his concerns with reports on the unprecedented risks of living in an “always on” digital environment."

But there's a twist: Gessner never used email or social media. That's because he died in 1565, long before reporters were imagining video games were as bad as shooting up. He wasn't talking about Facebook and Twitter, he was talking about the horrors unleashed by the dreaded printing press, the invention that single-handedly took us out of the dark ages and leveled up knowledge across the world, because now we could replicate that knowledge.

Of course it's true that sometimes technology changes the nature of work or changes how we work and yes it sometimes eliminates jobs, just not overnight and instantly. The truth is, we've already destroyed all the jobs in history multiple times. We've always adapted. You didn't tan leather to make your clothes today or hunt the water buffalo for your food. You didn't spend a lot of time making candles, you just flicked the light switch and the light came on but it wasn't always that way.

In Stephen Johnson's How We Got to Now, he talks about candle making and how much time it took for everyday people.

"Most ordinary households made their own tallow candles, an arduous process that could go on for days: heating up containers of animal fat, and dipping wicks into them. In a diary entry from 1743, the president of Harvard noted that he had produced seventy-eight pounds of tallow candles in two days of work, a quantity that he managed to burn through two months later."

Because of the electric lightbulb it means there are no more whalers hunting down and slaughtering giant sperm whales so we can dig the white gunk out of their head to make candles, but we're doing just fine and few people today are calling for a return to whale oil candles and the end of electric light.

Humanity has always adapted to new technology.

Always.

And no, this time is not different.

Again and again, we've created new technology, wiped out some of the old world jobs and created a massive array of new ones in their place.

Today, we have more jobs than ever, not less. They're more varied too.

The idea that tens of millions of people can make their living as artists, as opposed to the few thousand in the entire ancient world playing tunes for the king or as wandering minstrels, is because of technology. Technologies like video games, board games, records and streaming music, newspapers and magazines, the printing press, the Internet and Photoshop and Painter and Figma, brushes and canvas, websites, and film made it possible. All of that created more working artists than ever before and that's what AI is going to do too, including generative AI.

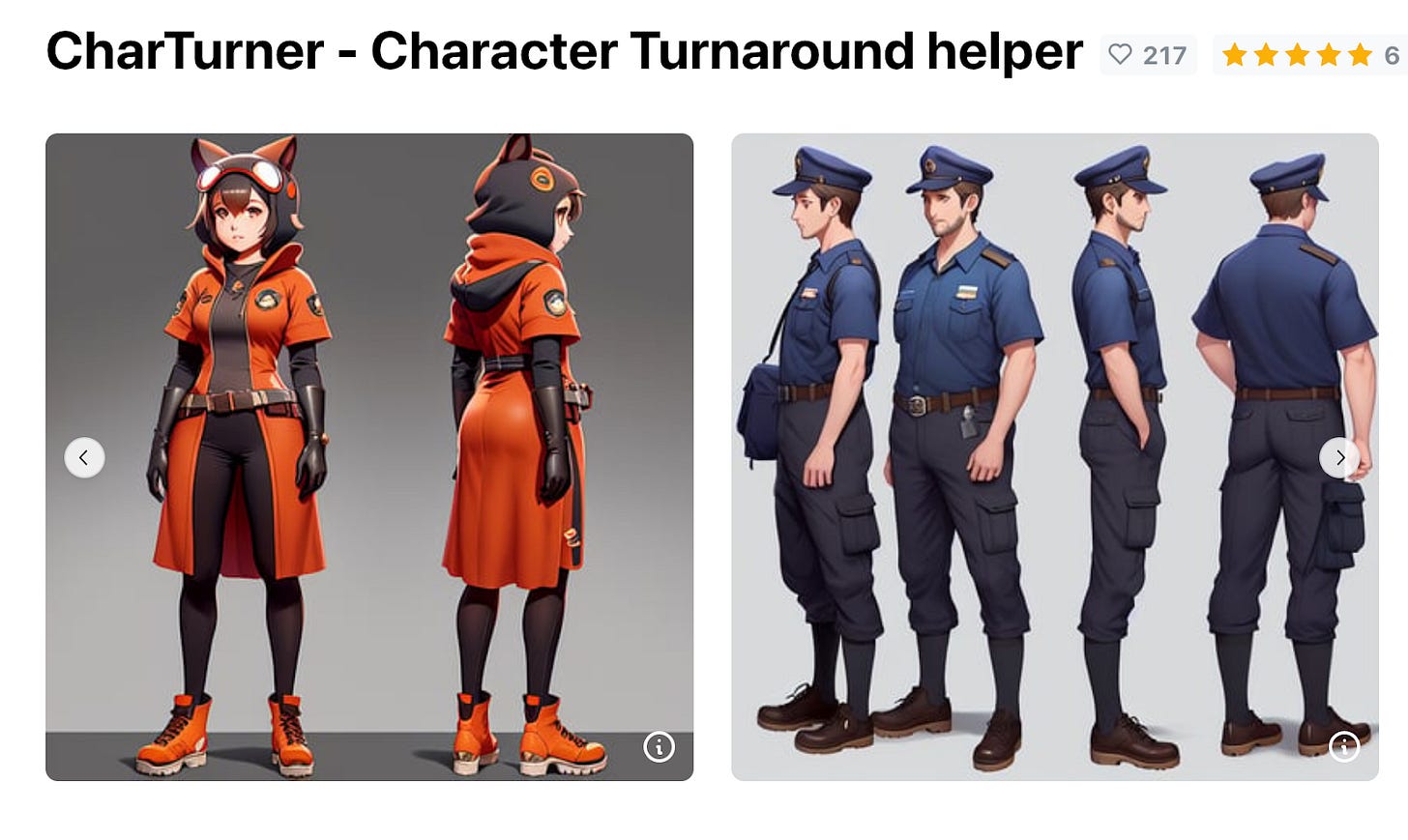

There's not a single generative AI company out there that is saying "let's replace all the artists" because that's ridiculous. Artists aren't going anywhere. But art will evolve. And that's because let's face it, professional art making is not all endless fun. Generative companies want to speed up the boring and tedious aspects of art. Maybe you love the concepting part, where you dream up a new character and costumes, but you hate what comes after: Turnarounds. You have to draw the same character from multiple angles. It's slow, tedious and mind numbing. What if there were something to speed that process up?

Already a wise artist fine-tuned a CharTurner model to help them create those turnarounds faster. Just draw the original image, enjoying that sweetness of creativity and the zone it puts you in, and then use the machine to do the boring work of showing that character from lots of angles.

Now artists can rapidly do the boring part and spend more time doing the parts they love.

Remember that it’s because of technology that more of us can do more interesting work. 95% of us used to spend most of our time hunting down food. Now only 3% of the population is involved in agriculture. It's easy to imagine all the kinds of jobs we'll lose but hard to imagine all the ones we'll create. You can't imagine a web designer before the Internet existed. It’s built on the back of dozens of other technologies that all have to come together just right, like electricity and transporting it through wires and information theory and digital technology and computers and browsers.

Technology is not outside of us. It's a part of us and a reflection of us. Technology changes us and we change with it.

AI will create more jobs than ever before in history.

Harms Real and Imaginary

Of course, it's not that there's no dangers with AI. Tech can have real consequences but smart policies rely on proving actual harm rather than dealing with imaginary demons.

When scientists finally discovered a way to do reliable gene editing in the 1970s, they immediately realized the potential impact of the technology. They voluntarily put a moratorium on research and quickly convened a convention to come up with rules that made biotech safer. Their suggestions formed the basis of the main laws governing biotech in the 80s and 90s.

The book Innovation and Its Enemies, by Calestous Juma, breaks down how those policies were based on evidence of harm, not worries that some imaginary harm might happen at some future date.

"Two years later, participants at the 1975 Asilomar Conference on Recombinant DNA called for a voluntary moratorium on genetic engineering to allow the National Institutes of Health to develop safety guidelines for what some feared might be risky experiments...The scientists set in motion what would become a science-based risk assessment and management system that was applied to subsequent stages in the development of genetic engineering.

"In 1984 the US White House Office of Science and Technology Policy proposed the adoption of a Coordinated Framework for Regulation of Biotechnology. The framework was adopted in 1986 as a federal policy on products derived from biotechnology. The focus of the policy was to ensure safety without creating new burdens for the fledgling industry. The policy was based on three principles.

"First, it focused on the products of genetic modification and not the process itself. Second, its approach was based on verifiable scientific risks. Finally, it defined genetically modified products as being parallel to other products, which allowed them to be regulated under existing laws."

But starting in the 2000s we moved to a very different kind of legal concept: The Precautionary Principle. It started in the EU where countries gained the right to deliver total bans on genetic engineering based on fears that something might happen. It was no longer enough to prove that something happened, it was enough to say it might happen and it's got to be stopped now.

Writes Juma,"The CBD (UN Convention on Biodiversity) paved the way for new negotiations that resulted in the adoption on January 20, 2000, of the Cartagena Protocol on Biosafety to the Convention on Biological Diversity. The central doctrine of the Cartagena Protocol is the ‘precautionary approach’ that empowers governments to restrict the release of products into the environment if they feel biodiversity might be threatened even if no conclusive evidence exists that they are harmful."

In essence, we've reversed the burden of proof. We've put in on the technology creators to prove a negative. Show us that it won't do any harm in the future or else!

Imagine if they'd told kitchen knife manufacturers that they couldn't put out a kitchen knife unless they could guarantee people would only use it to cut vegetables and never stab anyone? It doesn’t matter that 99.99999% of people will use it to cut vegetables, it’s got to be stopped because someone might use it for evil. Instead of placing the burden on the person who did the crime, we’ve gone total pre-crime with it.

Today, we're in danger of doing the same for AI. If it might cause harm it's dangerous. Even if we miss out on better cures for cancer and self-driving cars that save a lot of lives, we should slow it down because it might be a mythological doomsday machine that takes all our jobs and takes over the world or causes harms.

Today we use the term "harm" very, very loosely.

Harm is a very definitive word. If someone punches you in the face, that's harm. If someone stabs you in the chest, that's harm. If you're not hired because you're not the right color, that's harm. If someone won't rent an apartment to you because you're a foreigner, that's harm.

When you're offended, that's not harm. If something doesn’t agree with your political beliefs, whether your a centrist, far right, far left or anything in between, that’s not harm.

If a machine learning chatbot makes a joke about Jesus or Buddha, that's not harm. It might offend me and my faith, but it's not harm. You could make a good case that the system shouldn't be allowed to make those jokes as part of your company policy, but you could make just as good a case that total freedom of expression is the life blood of any healthy society, including the freedom to make jokes about religious figures or anything else.

We can use the same kinds of principles they used to regulate biotech in the 80s to intelligently regulate AI. We simply classify AI that deals with life and death, war, money management and health as a different class that requires a higher level of logging, traceability, QA, testing and explainability and we make sure that we have real punishments for violations of those rules. Make sure there are government teams skilled in machine learning who can understand those systems and make determinations of negligence when we have accidents or deaths or real financial hurt. That’s how we deal with other problems in society. Provide evidence and then take action.

But as of today, the overzealous approach to stamping out all potential harms in the lab before any AI gets released has already resulted in the shackling of AI. We don't need to slow it down because it's already been slowed down. Internal safety teams at Google have managed to classify every single one of Google's most advanced models as dangerous and so that none of them ever get released. What that actual danger is, is nebulous. Google's DeepMind is one of the top AI research teams in the world and its data scientists are doomed to do nothing but publish papers. Their models have never see the light of day except for internal products.

But that overly cautious approach is now coming back to haunt them. They just laid off 6% of their workforce. Despite having one of the most incredible AI research teams in the world, OpenAI has blown ahead of them and captured mind and market share with ChatGPT.

Of course, Google had other real reasons to sit on their best models. They are massive and politicians have soured on Big Tech as its power has grown and stretched across international borders. Everything they do comes under intense scrutiny and criticism from the public and results in big fines and overreaching regulation from governments. So why bother pushing into a new frontier like AI and risk more scrutiny? After all, their 190 billion a dollar a year ad business was doing fine. They didn't need to do advanced AI to grow that business, just rudimentary AI. Ads don't need super advanced Large Language Models. Just match up what people want with an ad that might sell them that thing and you're good to go. It all worked incredibly well.

Right up until it didn't.

Now OpenAI has shown the first clear and credible way that the search business could start to shrink. What if we didn't need to look everything up and scroll through a bunch of pages to try to find the right answer myself? What if we could just ask an AI to give us the best answer, or make up a recipe instead of you going to yet another horribly ad saturated recipe page with a giant, ugly, moving ad every other paragraph? All we really wanted was how to make dinner, not a pair of shoes we looked at last week.

Google's president recently issued a "code red" on AI and its founders were called back to advise the company on how to break out of their self-created AI quagmire. Now, they're taking a hard look at their overzealous safety teams and pushing back.

Google has a reason to speed up AI and so do we all. We've spent a lot of time worrying about what AI might do and not enough time talking about all the good it can do or solving the problems it actually creates as opposed to ones we think will happen.

Solving Real Problems in the Real World

Somehow we've gotten away from actual AI dangers, like autonomous killing machines, aka LAWS or Lethal Autonomous Weapons. Those are actually dangerous. Lots of governments are building them anyway and not many people are doing much about it.

Instead of worrying about that, we worry about whether ChatGPT makes up wrong answers to questions, as if it's the same thing. It's not. If kids use it to cheat in school, it's somehow a national disaster and the subject of countless news articles lamenting the death of education. Here's a shocker, kids have been cheating in school for all of time. Smart teachers are already adopting and embracing ChatGPT as something to learn about for tomorrow's workforce reality where everyone uses AI, rather than knee jerk reacting to it and banning it as if they could ban it anyway.

Of course, there are real concerns that don't involve injury and death. If we could just dial down the white noise of panic over AI, we could start focusing on them. We don't want chat programs advising kids about the best way to commit suicide or making up answers with great confidence about what pills don't have interactions. Those problems can be solved with research and smart programming and techniques like RLHF and Anthropic’s Constitutional AI approach or dozens of different approaches being tested as we speak.

We won't solve those problems in an ivory tower. We'll solve them in the real world, like we solve every other problem.

When we first put out artificial refrigerators, they tended to blow up. The gas coursing through them was volatile and if it leaked it could catch fire. People were rightly up in arms over that because nobody wants their house burning down over keeping tomorrow's dinner cold when natural ice worked just fine. In 1928, Thomas Midgley Jr, created the first non-flammable, non-toxic chlorofluorocarbon gas, Freon. It made artificial refrigerators safe again and they gained widespread popularity and we had a revolution in food storage and food security for the whole world.

Unfortunately, in the 1980s, we discovered chlorofluorocarbons were causing a different problem, ozone depletion. With actual harm proven, the world reacted with the Montreal Protocol and phased out the chemicals and the ozone layer started to recover quickly.

Nobody wants problems but you can't predict every possible way a system can mess up. It doesn’t mean you should do nothing and anticipate nothing. Many problems can be solved ahead of time, but the vast majority of them can’t. And usually when you try, you end up creating imaginary problems that never materialize and missing the real ones.

People thought going over 10 miles an hour in cars might cause the body to break down and make people to go insane. It's safe to say that wasn't a real thing, but later when cars were going 40-70 miles per hour and people were dying in car wrecks too often, seat belts offered a solution that saved a lot of lives. It was an actual fix to an actual problem. Once we see a real problem, then engineers can start to work on it in earnest. That's why we have crumple zones and airbags now too.

No matter how long OpenAI trained ChatGPT, it's just too big for any one company to get perfect in private. We're talking about a completely open-ended system here, one that you can ask any question you can possibly imagine and it stands a good chance of giving you an answer. How could any one company test every possible way people would ask it questions? The only answer was to open it to the Internet and let people do what they do so tremendously well, find loopholes and exploits. Within a few days, people had figured out how to get around its topic filters by posing the questions as hypotheticals, and how to get it to enable its ability to search the web, execute code and run virtual machines. And OpenAI had figured out how to shut most of that down too.

That process of real world exposure is absolutely essential. Humans are incredibly creative hackers. The old military phrase "no plan survives contact with the enemy" is true here too. You can dream up all the safety restraints you want in isolation and then watch them fall in minutes or days when exposed to the infinite variety of human nature.

So let's stop pretending we can make AI perfect and that nobody will ever do anything wrong with it. People do wrong with lots of things. Everything in the world exists on a sliding scale of good to evil. A gun might be closer to the side of evil, but I can still use it to hunt for dinner or to protect my family from home invaders. A table lamp might be closer to the side of good but I can still hit you over the head with it.

AI will be both good and bad and everything in between. It will be a reflection of us.

So let's speed up AI.

Let's put it out there in the real world.

There will be problems. There always are. But we'll do what we alway do.

We'll solve them.

Great post. My own thinking is along similar lines: https://maxmore.substack.com/p/against-ai-doomerism-for-ai-progress