The Turning Point for Truly Open AI is Now

Truly Open Source AI is the Future But Some Folks Are Trying to Kill It in Its Crib Because They Secretly Want to Keep All the Power for Themselves

We're at a turning point.

After decades of promises that never lived up to expectations, artificial intelligence is finally roaring out of the research labs into the real world. It's predicting the shape of every known protein and revolutionizing drug discovery. It's winning at Go and DOTA 2. On Apple watches, AI spots potential heart attacks before they happen so people can get to a doctor long before they collapse clutching their chest. It's driving cars on the busy highways and backroads of China and San Francisco and soon everywhere else.

But even with all these breakthroughs, most of the largest and most important models remain closely guarded secrets, kept locked behind closed doors at all costs. We can read the papers that showcase the latest and greatest mega-models and their astonishing results but we can't use the fully trained models ourselves. We can't download their weights. At best we can play with imperfect and inflexible APIs that barely expose their capabilities and dramatically limit what we can do with them.

But all of that changed with the release of Stable Diffusion, the AI art generating powerhouse that's taking the internet by storm. It's one of the first truly state of the art models released as open source, trained on a supercomputer of 4000 A100s, some of the most cutting edge AI chips in the world.

And it marks the next turning point in AI:

The age of open foundation models.

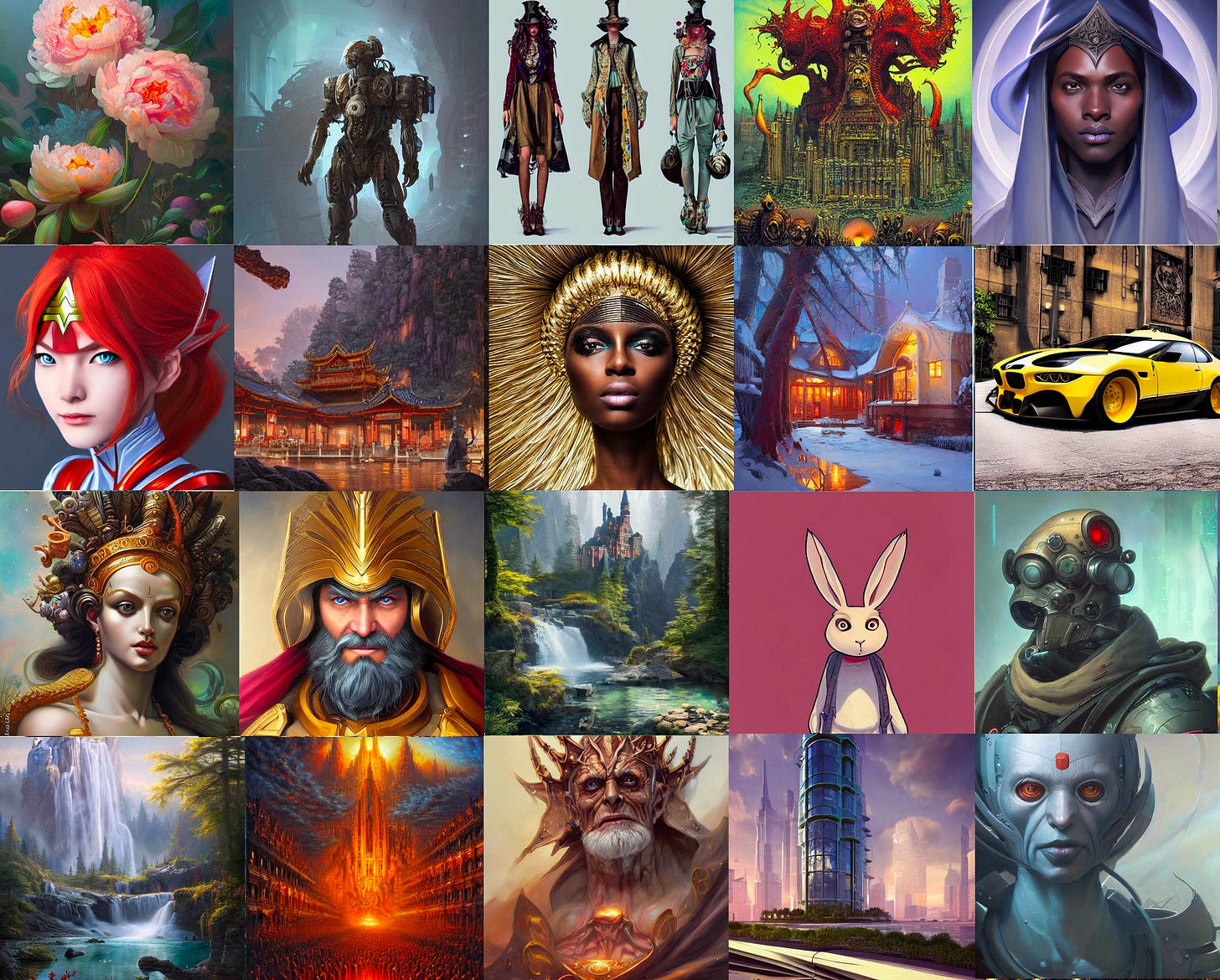

Now instead of these powerful models hiding behind the walls of a small group of powerful organizations, we're putting state of the art models into the hands of everyone. It's already unleashed an unprecedented explosion of new creativity, with new tools coming out nearly every day. There are so many tools and new business ideas it's almost impossible to keep up with them all and the amazing array of potentially game-changing applications for business such as prototype synthetic brain scan images that can drive medical research, on demand interior design, incredibly powerful Hollywood style film effects, seamless textures for video games, new kinds of rapid animation that can drive tremendous new streaming content, on-the-fly animated videos and books, concept art, plugins for Figma and Photoshop, and much more. All of that has happened in a single month.

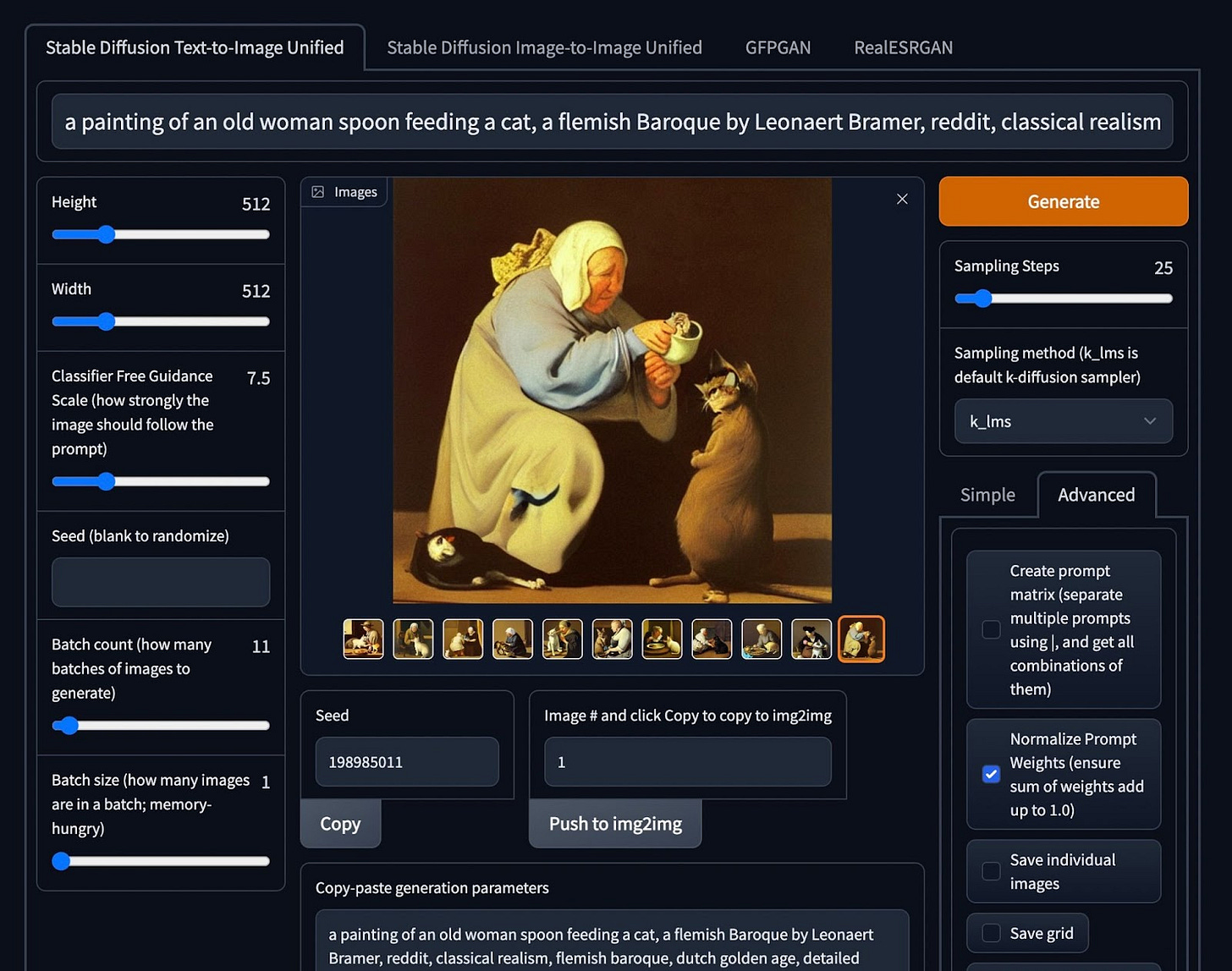

The model was out just two weeks before enterprising programmers combined the model with image to image generation and face smoothing and prompt splitting in one simple UI:

Fathers are turning simple children’s drawings into masterpieces in seconds to show those kids what’s possible if they keep drawing and honing their craft.

(Source)

Others are weaving Stable Diffusion together with video, combining it with other tools like Ebsyth. True innovation comes from open source because a single company can’t imagine all the possibilities until people can actually get their hands on it instead of only getting access to a version that’s locked behind closed doors.

(Source)

That's just the tip of the iceberg. We're only a single month into the release and already we've seen this amazing reaction. What will we have six months or a year from now?

The Age of Ambient AI

At Stability we feel that only when AI radiates out to people everywhere will it get truly transformative. It's not interesting when one person or a few people have a smartphone. It gets interesting only when we've got a billion smartphones networked together.

It's time for us to think bigger, to imagine what we can do with these models on a much larger scale, to picture how we can weave foundational models into all kinds of software, all over the world, in a thousand ways.

When Foundational Models are everywhere, everything changes.

Imagine a world not long from now when Foundational Models as a Service (FMaaS) have surged into every aspect of our lives and revolutionized everything in work and play.

Designers and animators are using them to generate thousands of potential concepts for new movies and video games they’re creating. Animators at Pixar and Marvel and Disney work with FMaaS companies to fine tune the model on their private datasets of brilliant concept art and suddenly find the model helped their animators go in a totally different direction. That leads to their next movie topping the charts as the all time highest grossing film in history, only to top it again three years later with another man-machine hybrid.

Biotech companies search through massive databases of proteins and chemical interactions and quickly use a fine tuned FM to design twenty potential drug candidates to fight a rare motor neuron disease that recently cropped up somewhere in the world.

A musician jams out a new tune and then asks the models to iterate on the chorus. The 17th one is awesome and the musician plays it and then modifies it with a few tweaks to make it even more catchy.. It goes on to be a huge hit on Soundcloud.

Material scientists are designing new materials that make everything stronger and lighter, from skyscrapers that flex more easily to resist earthquakes, to electric bikes that are light enough to carry on your shoulder and fold up neatly to carry on the train.

Elite coders are simply telling the coding model what they want it to do and it's spitting out near perfect Python code but it also recommends Go for several libraries because it will be faster and more secure. It automatically does the translation between languages and tests it. It's paired with an evolution through language model (ELM) coupled with a Large Language Model (LLM) and those models help the coder create brand new, never before thought of code too, in a domain the model was never trained for by iterating on concepts quickly.

Welcome to the age of ambient AI.

Content comes alive. It's interactive. Iterative. Evolving with us.

All of this will happen because of a vast global network of ambient AI models. Where AI is everywhere and every device is waking up and getting smarter. Once we've industrialized intelligence it will spark a revolution in how we work and play.

But to become a reality, the industry has to change now.

To start with, we've got to get more open.

The Era of Truly Open AI

At Stability we're putting the open back in AI.

We're focused on an open source strategy from the very start. That's a dramatically different approach than the industry has taken so far. While many of the most important and powerful tools in AI/ML like Pytorch and Tensorflow are open source, as we've already seen all of the most powerful models are closed and proprietary. It's eerily similar to how the world worked when Linux first burst onto the scene decades ago.

When I first saw Linux in college in the 1990s I knew I was seeing something totally new and different, something radical, wonderful and even dangerous. Dangerous because it held the power to upend the entire software ecosystem forever and it did. The big, closed source powerhouses felt threatened by it. That’s back when Red Hat Linux was sold in boxes at Fry’s Electronics and when Steve Ballmer called Linux “communism” and “cancer.”

Imagine if Steve Ballmer had won that fight and crippled Linux? Even Microsoft’s cloud is now primarily powered by Linux so he would have crushed his own company’s future with short sightedness and fear.

Today, open source is the default. Linux powers everything from the backend of the net, to America and the world’s most powerful super computers, to your mobile phone to the most cutting edge artificial intelligence apps. It’s responsible for countless jobs. It powers radar systems and nuclear subs. Every major technology starts as open source, whether that’s cloud, mobile or containers. If you’re young and just getting started in tech, open source was always there like a tree or a river. You’ve never lived without it. Github and development teams that span the world are the norm, not the exception.

Open is the default everywhere.

Except in AI/ML.

At Stability we feel that's got to change. It's not enough to open source the tools that build the models, it's the models that matter.

If the most powerful models are concentrated in the hands of small groups, we think that's a disaster for the world. Even the best and most creative companies can't see all the possible uses for their technology. No matter how hard a centralized group tries they'll never match the creativity of a decentralized group of people dreaming up things a smaller group couldn't imagine. That's the power of Emergence, profiled by the brilliant and far sighted author Stephen Johnson in his book Emergence: The Connected Lives of Ants, Brains, Cities and Software.

History is living proof of this truth. Take the transistor, maybe the most important invention of the model world, because it made microchips possible and today microchips are in everything from your car, to your microwave, to the computer or phone you're reading this on right now.

The transistor came out of Bell Labs, one of the most creative companies in history. Profiled in the fantastic book, The Idea Factory, we can think of Bell Labs as the first true think tank, whose benefits rippled out and affected adjacent domains all over the world. Many of the most important inventions of our time came out of the Bell Labs teams, such as Information Theory, that underpins the entire communications infrastructure of the world wide web and intercontinental communications.

And yet even the great Bell Labs missed most of the eventual uses of the transistor. They mostly just wanted something more efficient than the vacuum tube, to amplify electronic signals over long distance phone lines. They never saw the microchip coming.

It was outside firms that first came up with the microprocessor and how to scale it and grow it. Companies like Intel own their entire lineage to the transistor and the work at Bell Labs, along with all the cell companies and computer companies and cloud companies of today.

At Stability, we trust in that infinite supply of creativity to come up with never before imagined ideas.

Punk Rock AI and the Critics Corner

Of course, we know the move to release Stable Diffusion wasn't without controversy.

Some people fear ethical issues or deep fakes or issues of representation. We do too, which is why we worked with the wonderful Hugging Face team to release it under the revolutionary OpenRAIL license that forbids using the model for illegal purposes and for hurting people.

People who violate that license should pay the price for their actions.

But we don’t tell kitchen knife manufacturers that they can’t release a knife unless they can assure us that nobody will ever use it to stab someone. The vast majority of people are good and will use it to cut vegetables and they should have the power to cut vegetables. These models should be in the hands of the many, not the few.

We also know that open source tools will evolve better mitigations for protecting people if the community is allowed to get their hands on this tech and the tech is not crippled out of fear or legislated out of existence because well-meaning legislators are misled by closed source AI companies who secretly want to keep all the pie for themselves while pretending to democratize AI.

That’s why we’re about to sponsor a series of contests to come up with the best open source security tools for models and we’re putting $100,000 prize behind it so stay tuned for announcements soon. Just like open source encryption is better because more people can spot its weaknesses versus a closed system, the AI safety control tools evolved by the community will be much stronger and more robust than anything a single company can create.

We’re also heard the concerns from artists and we’re building a tool to let artists opt out of training. You spoke and we listened. Simple as that.

Of course, some of the controversy comes from fears of people losing their jobs or from the persistent narrative of AI as Frankenstein. Fear of AI is propped up by decades of sci-fi evil-AI-gone-wrong fantasies like HAL, Terminators, and Ex Machina. It's also propped up by government reports written by bureaucrats using imaginary numbers about AI applications that don't actually exist yet. Every one of those stories and reports are basically nothing but Frankenstein retreads, many of which I've admittedly adored as a lover of sci-fi, but that's just what they are: stories.

In twenty years, we'll realize they had about as much predictive power as the Population Bomb, which predicted a billion people would have to starve to death in the 1980s because we'd never find a way to feed everyone. Instead, we got the Green Revolution and we've driven starvation down to record lows in the thirty years that followed.

AI will create an eruption of brand new kinds of jobs. It's super easy to imagine all the jobs that get lost but it's very hard for people to see all the jobs that new technologies create. How do you explain the work of a web designer to an 18th century farmer worried about the plow? You can't because it's built on the back of a chain of technologies from electricity, to wires, to computers and the Internet.

Sure, sometimes old jobs do disappear or change, but they’re replaced by an array of new jobs. We used to light the world by slaughtering massive amounts of sperm whales and harvesting the blubber from their skulls instead of using electric lights, but how many people today would argue for a return to whale oil candles?

When the camera burst on the scene in the 1800s, artists worried the end of art was coming fast. Baudelaire called photography the “refuge of failed painters with too little talent.” People worried cameras would destroy society and culture and women’s morals and other worries that look bizarre in retrospect. These worries always look strange in retrospect because life never plays out that way. We adapt. We change. We integrate. It’s what we do.

What happened with the camera instead? It liberated artists from a strict focus on realism and led to modern art movements like impressionism and cubism and abstract art.

In all fairness, the camera did end up replacing lots of portrait artists but over time the camera led to an explosion of brand new jobs, everything from nature and wildlife photographers, to film editor, cinematographers and more. It created entire new industries like movies and film.

Oh and portrait artists are back in a big way now too. Check out this list of 8 portrait artists changing the way we think about portraits.

Of course, it doesn’t matter to some folks what I say here. Some folks just want to be upset and are determined to hate this technology at all costs. In five years, that will look about as absurd as hating Photoshop. AI is just another tool and a tool that will prove incredibly useful for a huge number of people.

Today, where worrying over imaginary apocalypses is an international pastime, we're hearing that this time is really different.

It's not different.

Humans are remarkably adaptable creatures. We've always managed to change with technology, incorporating it into who we are and what we do and how we work and we'll do it again this time. That's because technology doesn't exist outside of us, it's a part of us and who we are.

No, we will not all be living on the government doll of basic income, eking out a sad existence in our VR helmets while we live in the stacks of Ready Player One.

When it came to art, people worried that Photoshop and digital pens would destroy art. They said that art created with software wasn’t “real” art. Now it's all there again, the gatekeeping, the anger over someone winning a digital art contest with Midjourney and the slandering of AI hybrid artists as fake artists and the slandering of open source AI models to tar and feather them so that closed AI companies can keep firm control on the future. If you attack open source AI and demand centralized control, you’re ironically creating the very future you fear, where a small group of mega tech companies control what you can and can’t do with technology. Don’t fall for the lie.

I saw all those fears coming when I wrote about the fantastic new world of AI art generators and why their critics get them all wrong right before the official release of Stable Diffusion. But let me summarize it as clearly and simply as possible here:

At Stability, we're not building tools to replace artists. We love artists.

We're building tools for artists.

I want to protect artists because I'm an artist. I've made much of my living from my writing, in addition to my work in tech.

But my first love was really drawing. As a kid, it was my whole world, a place I could escape and create the landscape of my dreams. I loved drawing monsters and aliens and spaceships but when I was kid in the 1980s and early 90s there was no path to a career for that kind of drawing except for a very small group of folks, like Dungeons and Dragons pioneer Larry Elmore or early fantasy artists like Boris Vallejo and Julie Bell. At that point you either went into fine art or worked in advertising and I decided I'd rather never draw again then work in advertising. So I stopped drawing.

I didn't trust the universe to make a path for me. I didn't see the rise of the internet and the absolute explosion of sci-fi and fantasy art and video games and blockbuster movies. There's an alternative universe version of me where I kept drawing and happily made a living drawing monsters and sci-fi battle armor and where I'm rapidly incorporating new tools like Wacom screens and AI art generators as each new technology comes to pass.

Eventually I learned to paint with words, becoming an author, among many of my other lifelong pursuits. There's nothing I love more than writing every day.

So I get it. I know what it means to be an artist and how important it is to my identity and how much joy I get from sitting down and writing, hours passing in what feels like seconds.

I won’t allow us to build tools to replace artists. It’s simple as that because it matters to me. Instead, we're building co-creative, co-collaborative tools for artists that will unleash a brand new world of creativity. As Peter Thiel writes in Zero to One "the most valuable companies in the future won’t ask what problems can be solved with computers alone. Instead, they’ll ask: how can computers help humans solve hard problems?”

We're already seeing artists working with our tools. Stable Diffusion got integrated into Figma and Photoshop and so many more I can't even keep track of them all at this point. That's the power of openness. We're building tools that will let concept designers swap out helmets and battle armor and faces. Fashion designers will iterate and co-create together with Stable Diffusion, making new gloves and hats and pants and suits and shoes.

Art teams will pull up Stable Diffusion 3D tools and Painter and custom in-house tools. You'll find it in animation studios and movie studios and more. And that's just the beginning.

And for all those folks who think “anyone can do it,” so they'll just replace all the artists with low paid text prompters, it's just way off base. Sure, anyone can have fun creating new images with words but it takes an artist's sensibility to get the best out of these tools, a deep understanding of composition, theme, proportions and more. Do you think anyone but an awesome costume designer could come up with the look and feel of the incredible new armor and outfits of the Lord of the Rings on Amazon? You need someone who knows history and the look of high fantasy. We'll see artists rapidly iterating with these tools and painting over the AI images to add the right feeling and scale and sensibility.

Take a look at this workflow from the artist in this Reddit thread, using an advanced workflow that will happen more and more as we weave Stable Diffusion into pro tools everywhere:

First, the artist does a quick sketch:

Then the artist uses Stable Diffusion and img2img with a prompt to get a different version.

Now the artist paints over the new image in Photoshop to give it more feeling and depth.

Finally, the artist uses SD and img2img again to generate variants and then iterate more in an ongoing creative loop.

If you think anyone can do that workflow, you’re not looking closely enough. And saying “anyone can do it” is really just manifesting another fear that AI tools will “deskill” art so wages drop, which is really just another variation of the fear that AI will destroy all the jobs.

Let's be very clear:

These tools actually require a skilled artist.

They won’t replace artists.

They’re going to enhance the artistic workflow in wonderful new ways.

So get excited. Get involved. Join us. Work with us. Art and artists aren't going anywhere. Instead, we'll see the exact opposite as an eruption of brand new kinds of art pour forth, the same way new types of paint unleashed different kinds of painting, or new kinds of metal gave us different sculptures, or the way Photoshop gave us more fluid graphic design, or digital film editing gave us a thousand new ways to change the look and feel of film, or cameras gave us new ways of seeing the world, or the web gave us a new way to share our work.

Old tech companies evolved to command our attention non-stop, to make everything compulsively addictive with constant consumption of static content. You don't even know why you're checking your smartphone, it just seems to appear in your hand sometimes. It's there when you're happy, sad, depressed, angry.

We're going to build a world where living, active, intelligent content rules, a digital world that’s alive, that you can interact with, content that's co-creative, that's yours.

Join us and you won't just surf the webs of the future, passively consuming content.

You'll create it.

######################################################################

I've been very excited about this project! I'm thrilled that we've arrived at the point where this technology can produce remarkable results on common consumer hardware— that it's no longer only a tool for entities that can afford their own massive data centers.

You cite Linux here as a success story for open source. Linux is made available under the GPL, as are many of the most popular open source creative applications such as Blender, GIMP, Inkscape, and Krita.

Stable Diffusion's "revolutionary OpenRAIL license," however, is incompatible with the GPL. I Am Not A Lawyer, but I have confirmed this with the Open RAIL License contact. This leads to some significant friction when trying to make use of these new technologies: https://huggingface.co/spaces/CompVis/stable-diffusion-license/discussions/3

How do you recommend we go about integrating new models like Stable Diffusion with the existing open source ecosystem?