The Fantastic New World of AI Art Generators and Why Their Critics Get It All Wrong

AI Art Generators are Here and They're Poised to Change the Art World Forever but an Increasingly Bizarre and Strange Range of Reactions from the General Public Threaten AI Before it Really Blossoms

In case you haven't noticed, AI art generators have gotten incredibly strong lately.

OpenAI's DALLE-2 is taking the internet by storm, producing astonishing images in seconds from just text prompts. Others are racing to craft their own neural art dreamers. I recently got access to Stability AI's art model training on a supercomputer of 1000s of NVIDIA A100s, the most coveted and powerful AI chips in the world, and I’m in love with it. In short, it’s incredible. Even now, in its partially trained state, the model delivers some mind-bendingly awesome images.

It does some things tremendously well, like portraits, landscapes, product art, and lots of fantasy and sci-fi art styles. It can create some breathtaking pieces that are nothing short of amazing. The machine understands lighting and shape and fine grained details. You can check out some of my favorite generated images below, most created through my obsessive prompting over weeks, and some by others on the test server.

(Source: Collage created and selected by Author from images generated by Stable Diffusion)

Of course, like everyone using DALLE or Midjourney or any art generator, I'm cherry picking my favorite ones. It's still an art to craft the right prompt to get what you want and sometimes the AI generates total nonsense, where it has no sense of body continuity while absolutely mangling hands, fingers and composition.

It's a bit like querying a search engine back in the old days before Google served the web up on a silver platter. You really had to work at it to not get crap. That's where these tools are now, getting better, but still struggling at times to really understand what someone wants.

Some of my favorite fails:

Notice how it struggles badly with folded hands or with placing people in space in a way that makes any kind of sense. Sometimes it has no idea what a body is and just generates muscles all over the place, reminding me of the end of Akira where Tetsuo's body goes crazy.

And Poor Kermit has seen better days. He's sporting a third eye. Maybe he got dosed with too much nuclear radiation like Blinky in the Simpsons.

I've also discovered the bot has some interesting quirks. Clearly the machine fell madly in love with the divine Gal Gadot (who hasn't?) and it crafts her with sumptuously lavish detail in a range of styles.

Of course, if you've got an eagle-eye and you look closely at that exquisite portrait of her with a lace headdress on the top left you can see the pinky finger is glitchy and not quite right despite everything else in the image looking magnificent. But as the bot learns, like a child taking its first steps, it gets better and better over time. I'll know it's achieved its true potential when it gets hands and fingers right, those nimble miracles of evolution.

But despite all these breakthroughs, AI beauty is often in the eye of the beholder. I've noticed an increasingly bizarre and strange range of reactions from the general public and even tech people as AI gets stronger and stronger. This powerful technology inspires everything from rapturous, passionate predictions of the coming techno-Singularity, to fear of machines destroying all the jobs, to debates of AI sentience. Last month, a former Google engineer named Blake Lemoine stole headlines by claiming Google’s LaMDA is sentient (spoiler alert: it's not) and he called it a lawyer.

What we haven't seen is anyone looking at the issues through a calm and nuanced lens. That's not surprising. Calm and nuanced doesn't sell magazines or generate clicks, but sensational headlines like Engadget's "Is DALLE-2's Art Borrowed or Stolen?" do.

But what are the real issues? Are there any? Or are people just knee-jerk reacting to the zeitgeist of tech fear? People love to hate Bezos and big tech and absurdly enough they love to complain about tech on the very sites they’re denouncing as they angrily post another rant on Twitter.

Over time, as tech has grown and woven itself into every aspect of our lives, it's created a backlash. Only a few decades ago, the Internet was a weird, niche technology. Now we do everything from ordering taxis and flights, to booking flats, to streaming music, to watching movies, to chattering endlessly with each other. We've built up technology into a modern powerhouse and it seemed to happen overnight even though it took decades. It's a peculiar aspect of humanity that we love creating heroes and we love tearing them down too.

All these visceral reactions obscure real potential problems and risk setting AI back decades as people react in absurd ways and demand laws and fixes for imaginary problems, while ignoring real ones.

So let's dive in and take a look at the politics, hopes, fears, dreams and legal ramifications of these incredible new art technologies.

The Hopes, Fears and Dreams of Today and Tomorrow

Let's start with the elephant in the room:

Are these new tools stealing or borrowing art?

The short answer is simple: No.

The long answer is a bit more complex but first we have to understand where the idea that DALLE or Midjourney are ripping off artists comes from in the first place. And where they come from is a messy tangle of fears and misunderstandings.

The first misconception is that these bots are simply copy-pastas. In other words, are they just copying and pasting images? Computer scientists have studied the problem extensively and developed ways to test the novelty and linguistic uniqueness of Large Language Models (LLMs) crafting new text versus just cloning what they learned. You can check out one of the studies from DeepAI here but the big bold conclusion couldn't be more clear:

"Neural language models do not simply memorize; instead they use productive processes that allow them to combine familiar parts in novel ways."

To be fair, OpenAI found early versions of their model were capable of "image regurgitation" aka spitting out an exact copy of a learned image. The models did that less than 1% of the time but they wanted to push it to 0% and they found effective ways to mitigate the problem. After looking at the data they realized it was only capable of spitting out an image copy in a few edge cases. First, if it had low quality, simple vector art that was easy to memorize. Second, if it had 100s of duplicate pictures of the same stock photo, like a clock that was the same image with different time. They fixed it by removing low quality images and duplicates, pushing image regurgitation to effectively zero. Doesn't mean it's impossible but it's really, really unlikely, like DNA churning out your Doppelganger after trillions of iterations of people on the planet.

Still, as we've learned the hard way over the last few years, facts don't matter all that much to people. We live in a world of alternative facts where everything someone doesn't like or understand or believe is "fake news." Visceral gut reactions matter and people look at an image, form an opinion in a split second and no amount of correct information can change their mind. When photographer Michael Green posted that he'd generated some incredible photos of Mexican women in a range of well known photographer's styles it generated some raging reactions:

This dialogue back and forth neatly demonstrates common misunderstandings about AI:

In fact, I am suggesting exactly that. Just as DNA allows biological life to create an infinite variety of faces, so does math.

And never mind the fact that many of these artists never took a picture of a Mexican woman as part of their official work in their entire life. Take someone like Diane Arbus, a fantastic and enduring photographer of the down and out and marginalized. I found one possible Mexican woman in her work (there may be others) but that wouldn't be much of anything for an AI to work with. On the other hand Arbus was a pioneer with trans folks, gay people, folks with dwarfism, people with down syndrome, outsiders, rebels, tattoo lovers and more.

There's also the simple fact that none of these Mexican women exist. Not one of them. Their faces aren't stolen. They aren't even remotely stolen. That's because a face is one of the most feature-rich training grounds for AI. How could it not be when our faces evolved to be evocative and provocative and distinctive? They're the most recognizable shapes on the human body by a huge margin and they're what we think of when we think of a person. Evolution gave us a rich variety of characteristics for our biological neural nets to respond to and they give algorithms studying those characteristics just as rich a playground for variety and play. A well trained visual recognition system could probably generate as wide a range of faces as evolution in the biological world.

I tried the same experiment as photographer Green with Stability AI's bot. Here's some Mexican women, who don't exist, in the style of Diane Arbus and Brian Ingram, along with an Indian and Japanese woman who don't exist either.

It's really astonishing how well the machine whips up brand new people in seconds and how well it understands the deeper characteristics of these amazing artist's styles.

But let's be honest, there's also something unnerving about it too.

I understand the anxiety some folks feel about it. There's something deeply unsettling about math generating an infinite variety of us.

We don't like to think of math and deterministic forces behind our lives because it brings up the uncomfortable feelings that maybe we don't have full control over our choices. Maybe something more mechanistic and clockwork-like lies behind our decisions and feelings and beliefs?

In a sense we're algorithms too, some of the most advanced algorithms ever dreamed up by the Universe (or mindless evolution with no prime mover, the jury is still out on that one). And that's all right. It's just a matter of getting comfortable with the fact that life is both magical and deterministic at the same time.

But AI art also sparks other deep fears too. There's a growing fear of AI training on big datasets where they didn't get the consent of every single image owner in their archive. This kind of thinking is deeply misguided and it reminds me of early internet critics who wanted to force people to get the permission of anyone they linked to. Imagine I had to go get the permission of Time magazine to link to them in this article, or Disney, or a random blog post with someone's information I couldn't find. It would turn all of us into one person IP request departments. Would people even respond? Would they care? What if they were getting millions of requests per day? It wouldn't scale. And what a colossal waste of time and creativity!

If you think that would have killed the early Internet in the crib, you're right and people who want companies and researchers to go out and get permission to build training sets from web data are advocating for the same kind of technology killing rules. It could kill AI before it really develops into something truly incredible and beneficial, cutting off breakthroughs in science and art and mathematics itself.

We already have some good legal precedent for training sets under US law though it's, admittedly, not perfect and will likely see some new precedent setting cases in the future. Text and Data Mining, or TDM, is covered under Fair Use, which allows people to scan and copy things for the purpose of allowing access or for research purposes.

Of course, countries could yield to these calls to restrict access but they'll only set their populations and local companies back tremendously as other societies race ahead of them in one of the most crucial technologies of the next decade. It's a recipe for disaster. Expect most countries to loosen TDM laws, not tighten them, for the real fear of killing an industry that also has serious implications beyond art, like drug discovery, defense, economics and agriculture to name a few.

The idea that we should restrict AI from learning from life is related to the "AI is stealing" reaction or the "math at the root of all things makes my skin crawl" sensation. It's not rational, it's visceral and it's also kind of strange because don't people learn from the world around them?

Engadget author, Daniel Cooper, writes "These systems did not, however, develop an eye for a good picture in a vacuum, and each GAI has to be trained."

Well people don't learn in a vacuum either.

Don't people study the artists that came before them? If not then someone better stop all those evil people who wrote books to train artists on the drawings and paintings of more famous artists.

I loved Drawing Lessons from the Great Masters as a young man. My art teacher in high school did too and he accused me of stealing his copy of the book when it went missing! Unfortunately, the real thieves got away because my mother bought my copy years before. Some other art student who didn't have such an awesome mom probably snatched the teacher's precious book because they wanted to learn to draw too!

I know I sat in museums and sketched old master photos to learn their ancient secrets. I did the same when I got comics and Heavy Metal magazine and the Sport's Illustrated Swimsuit issue when I was a young artist trying to figure out how to make my marks on the page better and also because I liked looking at hot women too.

Everyone learns from the world around them, in all disciplines. We learn in school and from our parents and by watching others. Do basketball players call up Micheal Jordan to get permission to watch his games and learn his moves? Do they get permission from Tom Brady to learn how he throws a football?

AI learns just like we do, from mimicry and studying the world. We just do it with a lot less data. We can learn the basics of throwing a ball from our fathers in the backyard in a few weeks. But to get really good we have to practice a lot and study big league players and work at it, so maybe we do need a lot more data too, just like machine learning systems. AI needs a lot of data and it learns compact representations over time. The simple fact is we learn from the world and so do AIs. Throwing a wrench in that is just foolish, reactionary and tremendously misguided.

Breakin' Rocks in the Hot Sun, I Fought the Law and the Law Won

Mr Green's thread does bring up a thornier issue.

Will these kinds of technologies rob artists of revenue they’d otherwise make?

If someone can just generate a picture in Diane Arbus's style then aren't they taking work away from her? Setting aside that Arbus is sadly no longer with us, the short answer is simple once again:

No.

The long answer is, once again, complex.

To start with, people calling for legal solutions to this problem seem to be missing the fact that we have a lot of precedent in law already. Under US law, you can't copyright a style and other countries take a strikingly similar stance. The art's law blog puts it like this:

"In a recent decision of the Full Federal Court, the Court reaffirmed the fundamental legal principle that copyright does not protect ideas and concepts but only the particular form in which they are expressed.[2] The effect of this principle is that you cannot copyright a style or technique. Copyright only protects you from someone else reproducing one of your actual artworks – not from someone else coming up with their own work in the same style."

That means a photographer or artist can't sue Time magazine when they hire a junior photographer who's learned to mimic a famous photographer's style. Of course, magazines and advertising folks do this all the time. They find someone who can do it well enough and hire them to take the pictures.

Magazines hire junior photographers because nobody can afford a top artist for every two-bit story. But sometimes they still hire the real artists too, especially when they want to make a big advertising splash or create buzz. We have a huge collection of old photographers' work because they were, well, working and not out of business from copycats who mimicked their ideas.

Over time artists find new ways to sell too. I've made money from Patreon and Substack while basically giving away most of my writing for free for years. That's different from how artists did it in the past but that's the nature of life and business. It changes. What worked yesterday doesn't work today. Some folks are kind enough to support me in my work because they like what I do and I love to give my work away, rather than selling each individual story to magazines, though I do that too.

Of course, we have other precedents as well. You can't just take one of Diane Arbus' real photos and use it for commercial purposes without paying her. Since AI is not a copy-pasta of her work and it's not just cloning it with a slightly different angle that's not a problem either. We're talking about an apples to apples comparison here. Artists can and will still get paid for their awesome work, as they should. I like to get paid and you do too.

The same goes for people's likeness. Just because I can generate an infinite number of Gal Gadot's doesn't mean I can take her picture, slap it on my advert for sneakers or for the local dry cleaner, whereas I craft the below picture with a combo of AI generated art and crappy Photoshopping for journalistic Fair Use purposes to make the point.

All this goes back to people's revulsion to determinism and math at the root of life. We don't like that people's style can be boiled down to math. People's styles are a unique and idiosyncratic thing that develop from a complex evolution of nature and the make-up of each individual. Setting aside that most of our styles aren't original at all, since most of us are not groundbreaking new artists, we still develop a sense of self from our style, no matter if we're amazing or middle of the road. The things we see and experience, the way we feel, what happens to us and where we grew up are just some of the billions of inputs that drive a person to create something new. Styles are an expression of an individual and we don't like to think that something similar to an algorithm is behind it all.

But in many ways we are an algorithm and an awesome one at that.

An AI learns from billions of inputs and, come to think of it, we do too. We just learn from a lot of different things, like our experiences and emotions, rather than 5000 pictures of a style. We may learn the basics fast, but our style develops after decades of hard work so in a sense we need a lot of data too, it just comes from a different place. It took me a decade of amateur writing to learn how to write and another decade of writing professionally to develop my own style. But like it or not, I'm just a biological algorithm, studying and learning from the world and remixing it through my own strangely shaped and fractured lens and I'm okay with that because I am still me no matter what.

What about AI and big tech corporations owning all the art we create with these new bots?

OpenAI is giving people full commercial rights to their work but that could change. But when they launched in alpha they owned it all. We could get to a point where a few giant tech companies become the go-to art generators and then rug pull us all so they own everything. That's a real fear and one that is best mitigated with open art generators like Stability that want to open source the models. That makes it so big companies can't take these things away from us.

The good news is that companies can't retroactively take away rights they granted already so use services that give you commercial rights to the work you generate. Once that right is granted it can't be pulled back later. If I sell my story to Time magazine I can't decide to change the rules on them tomorrow and take it back. It's still theirs to publish.

Of course, what full rights means in this case is still circumscribed by existing law. I may be able to generate a funny meme of Darth Vader but I can't slap Vader on my chicken noodle soup ad and not expect Disney to come knocking.

Cooper's Engadget article worries people might not know the full extent of the law. Just because OpenAI gave them "full rights" to the image they generated doesn't mean they can do whatever they want with it. They could still get themselves in a world of trouble thinking they own a likeness to Darth Vadar when they don't.

Once again there is precedent in the law for this too:

Ignorance of the law is not a defense against the law.

You can't claim you didn't know it was illegal to murder someone and get off scott free because you mowed someone down in cold blood. Just because people think they own the Vader making cookies picture they generated doesn't mean they can now use it to sell their artisan cookies creations.

Just because a group of dudes raised money to buy a Dune book without understanding IP law doesn’t mean they actually owned the rights to Dune, as they learned the hard way.

Diversity and the Full Range of Possibilities

What about other issues like representation and diversity?

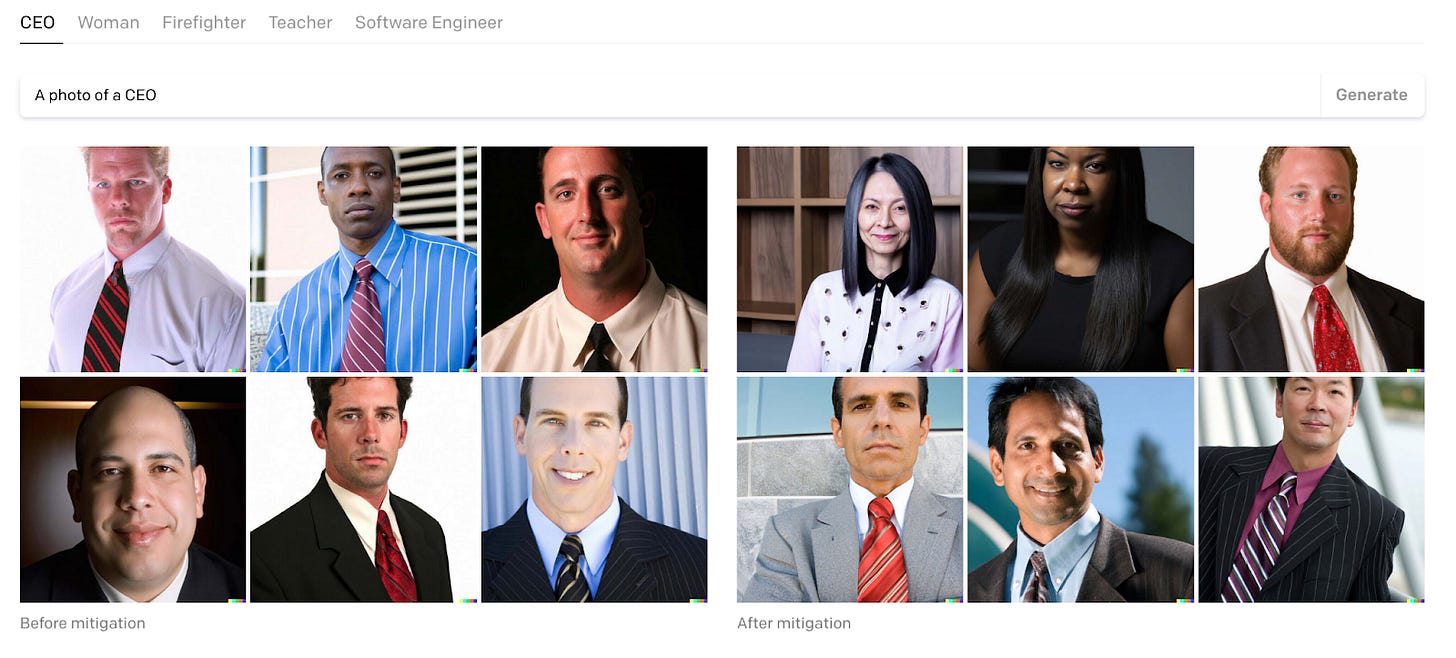

OpenAI dealt with it by intercepting prompts to add diversity to outputs.

(Source: OpenAI DALLE-2 Blog on Reducing Bias)

That's had some strange side effects, with Reddit users showing examples where padding prompts creates some unwanted results, like diverse Sumo wrestlers or non-Italian plumbers for "Mario as a real person".

The problem doesn't really seem to be the bots though. Bots reflect back the dominant images they see in the world, so if 79% of flight attendants are women and every ad shows a female flight attendant that's what you'll get. However, while AI has long had diversity issues with facial recognition, art bots are perfectly capable of generating a wide range of people and faces.

It seems that there are much simpler fixes than padding prompts too. People can add whatever gender, ethnicity or whatever else they like to the prompt and get precisely what they want. That's the beauty of text prompts.

Occam's Razor applies here. Simpler is better.

If people want an African American 747 pilot all they have to do is ask.

"a portrait of a [ETHNICITY] [GENDER] 747 pilot"

Twitter use @turbulentdetermism mitigated the problem on DALLE before OpenAI did.

The reverse is also true. OpenAI could leave the mitigated prompts as is and let users type "white male 747 pilot" if that’s what they want.

In my own experiments and the experiments of many, many others on the Stability AI bot, I found it tremendously simple to generate a wide range of diverse images for any subject, an African American Jesus and Virgin Mary, famous Bollywood celebs, African fashion models and cyberpunk queens, Indian period drama anime, a male Eskimo, a Japanese male flight attendant and more.

As usual, it's not machines that are the problem in the world, it's people. These AIs aren't sentient. They do exactly what we tell them to do. I've always said that I don't worry about sentient AI rising up and taking over, I worry about narrow AI in the hands of stupid, ignorant, angry, selfish people. We don't need any help being jerks, we've been doing that since the dawn of time.

Too often we blame technology for societies woes today but Hitler and Stalin didn't need social media to whip up people into a frenzy and unleash a whirlwind of genocide.

Humans are the real monsters.

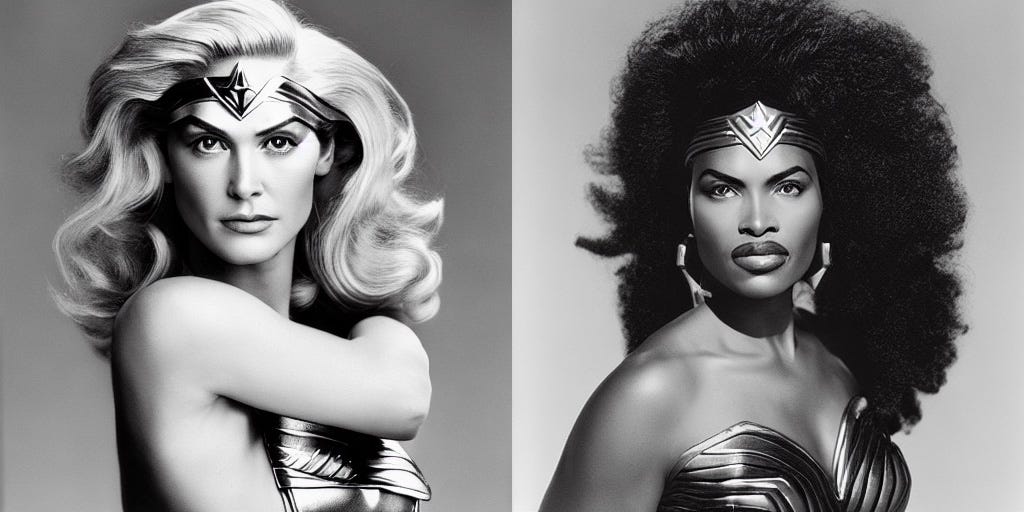

Here's two images of a two different made-up woman as Wonder Woman, with two different ethnicities. The kick-ass African Wonder Woman is one of coolest images that I've generated so far and one of my most favorite.

Deep Fakes, War, Dual Use Tech and Magical Thinking

So what about the problem of deep fakes?

There's no doubt that someone generating fake images of someone and whipping up a frenzy on social media is a big, big problem. There's a reason that OpenAI restricts photo-realistic images of celebrities and politicians at the moment.

But that can't and won't stop the problem. The cat is already out of the bag on this one unfortunately.

You don't need powerful image diffusion foundational models to do it as it's already being done with small GANs and more. We have much smaller models that can generate photorealistic images with ease and it's not hard to find celebrity porn deep fakes with a little Googling. In other words, these models and how to make them are already out there.

Of course, those models are also well within the capabilities of nation-states, Advanced Persistent Threats (APTs), aka government hacking groups that can cause massive mayhem, and other nasty actors who want to cause trouble and pain for the world. Believe me, these groups aren't sitting around on the OpenAI beta waiting list to make their dreams of political horror a reality. If they want to generate a picture of the President buck naked with an oiled up farm animal they can do it right now.

By the way, that's already happening without DALLE and art bots. Deep fake propaganda assaults are in use right now in the Russian invasion of the Ukraine with both sides dueling each other with fake videos and images. Modern warfare is fought in cyberspace and the real world now and that's only going to accelerate.

(Source: BBC)

The worry is that art bots will put these capabilities in the hands of more people and that's a real concern that the community needs to work on and find appropriate mitigations for and soon. These are the kinds of concerns that get hidden away by more mundane and absurd worries and I worry about it too. I don't have a great answer but the community is looking at it closely and necessity is the mother of invention. My sense is that there will be a way to embed limiters in neural networks that make it harder to do bad things but nothing will ever really fix this problem.

Unfortunately, the reality is that we won't find a way to lock in only good uses of technology. It's a pipe dream and cuts against the reality of life. Everything in the world exists on a spectrum from light to dark. Some things are more on one side but anything can be used for good or evil. A gun may sit on the side of the dark but I can still use it to hunt and feed my family. A lamp in my house sits on the side of the light but I could still hit someone over the head with it.

The reality is that AI is a dual use technology just like every other technology or tool ever invented. We won't fix that with magical thinking or by just restricting it in the fear that the bad guys could use it. They will use it. Expect it. Get comfortable with the risk because life is risky.

A knife can cut vegetables or stab someone and we don't demand that knife manufacturers find a way to mitigate any possibility someone will get stabbed.

Oh No, We'll Put All The Artists Out of Work Forever (No, We Won't)

And what about the last big fear? Will AI put artists out of work?

This is one of the most deeply rooted fears about AI. It's also one the most overblown and hyperbolic stories and it simply won’t die. Just like the weaving loom didn't kill all the rug making jobs, AI won't put all the artists out of business.

The problem is, it's much easier to see the jobs we’ll lose than the ones AI will create. It’s hard to see what comes around the corner. You can’t explain a web programmer job to an 18th century farmer because he has no context for it. The Internet didn’t exist and the Internet is built on a chain of inventions that came before it, like computers, the transistor, electricity manipulation, software and more. Without the Internet there is no web programmer and no way to imagine that chain of inventions and what they mean for the world.

Rather than putting artists out of work, I already see artists working with these tools closely. Many amazing artists are already in the Stability alpha server playing with it in private rooms in fantastic ways.

I see more and more possibilties on the horizon. They’ll be able to rough out a sketch and have the AI rapidly iterate on positions for it with in and out painting.

Here’s a prototype of someone building that kind of interface already.

They’ll be able to upload their own images and extend them or highlight parts to change. Imagine concept artists uploading their amazing concept art and then highlighting their battle armor helmet and having the AI whip through dozens of variations that might be better or just give them new ideas and take them in new directions.

The AI bots are stunning and they can produce a lot of great work but they still require a lot of pushing and pulling to get right. There's an art to generating art. Artists are already figuring it out. They have to work with the tool, using it to their advantage. It's an iterative back and forth process, captured in this thread where artist Valentine Kozin plays with a combo of DALLE, Photoshop and his own ideas.

He figured out it's better to focus on smaller parts of the image and iterate there. Other artists I follow have already learned the same. This video shows the process in time-lapse but I captured a stream of screenshots to show you what he's talking about:

It won't be long before Marvel, Pixar, Disney and studios everywhere are using them to augment their artists, not fire them.

Mr Kozin comes to the same conclusion:

He's figured out what people eventually figure out about every new tech, that we work better with machines, aka "ai+artist > ai alone."

Artists who really know their craft are something special too and it's hard to get a text prompt to give you a true masterpiece that understands dynamism and flow and angle and emotion. I recently discovered the wonderful concept artist, Anna Podedworna, out of Poland, who works at Riot Games and CD Projekt RED.

Check out this amazing drawing of a leaping werewolf below. Notice the masterful angle that makes you feel like the wolf is over you and jumping right off the screen. See the way she frames it with the background curving around the monster and light haloing it so all your attention focuses exactly where she wants you to focus.

(Source: Anna Podedworna, Art Station, (C) Wizards of the Coast)

In short, it's incredible.

An artist like this working with DALLE or Stability Diffusion could do things you and I with our sorry little prompts could never imagine.

It's already happening. I've found a treasure trove of hybrid artists (1, 2, 3, 4 to name a few) swiftly adapting to the new media right now. I found Machine Delusions on Instagram the other day. The artist already uses a mix of art-bot their own talent to make some incredible images.

I fell in love with this robotic bee. The intricacy and detail are astonishing.

(Source: Machine Delusions on Instagram)

Artists won't go away, they'll just adapt to new tools like they always do.

The Future is Now

Tech is an extension of us. It's not outside of us but a part of us and who we are as a species.

The Luddites feared we'd put all the rug makers out of business. Instead, now we have more rugs, not less. We have machine generated rugs that everyone can afford, not just the rich and powerful, and we have handcrafted rugs made by dedicated artisans for interior design lovers. New tools help create abundance where there was scarcity before.

It used to be that only highly skilled glass makers on the island of Morano could make astounding glass creations using secret techniques. But now everyone has glasses in their house as the knowledge to make them got more and more democratized. Still, artistic glass masterpieces didn't go away. You can still buy a beautiful glass creation in Venice if you've got the money and the desire.

With these amazing new art tools, we'll have more art now too. Art that's democratized. We've only just scratched the surface with what these tools can do too. Soon we'll have new versions of the software that make professional artists faster and stronger, which only means an eruption of new films, video games, television, comics and fine art.

In AI we call man and machine working together "centaurs" after the mythical man-horse beasts and after Centaur chess created by Gary Kasperov, the chess world champion famously beaten by Deep Blue, an early AI from IBM. Most people know that story but not what happened next.

After getting crushed by the machine, Kasparov got back up and came up with the new idea of pairing supercomputers with grandmasters that he called freestyle chess. That evolved further over the years and by 2005 he created a full scale Centaur tournament, where humans, AI, or human-AI teams could enter to compete. The winner wasn't Hydra, the best chess program at the time and it wasn't a team of grandmasters and computers either. It was a couple of amateur chess players and some ordinary computers.

(Artist: Louis-Jean-Francois Lagrenee, The Centaur Chiron Instructing Achilles)

These tools will evolve with artists. They'll evolve with us. They'll get stronger and stronger and we'll see a Cambrian explosion of incredible new art. It feels like magic playing with these tools, the closest thing to magic I've ever experienced in my life beyond the every day magic of time with my wife and the fun we have together.

But if we react with fear and fight the future, we'll lose out. If we give in to people who have a problem for every solution we'll miss so much. We'll lose out to a few people who don't want to see the world evolve and who want to keep it frozen where it is today. But life and art are always changing. You can't step in the same river twice because you're different and the river is too.

I can't wait to see what kinds of creativity these new tools unleash as long as we don't give into fear and cripple them before they get the chance to really change the world.

As always, the only thing we really have to fear is fear itself.

Great piece! Thanks.

I think this article glosses over a few really key points.

You say that ai art is better when humans are using it too, but that's just because it needs more data and learning, right? That's the whole point of it, so surely it will continue to grow and the impact the human artist would have would be negligible. What you mean to say is that human + ai is better *for now*.

You also suggest artists will have this as a tool in a tool belt, but again the real life implications are missing. An ai thay can work instantly at any time anywhere, constantly improves and encorporates ideas with a click, without need of a break, without needing compensation....and more points I am probably overlooking, and this will without a doubt cause many people to lose work. It will become a case of point and click and done. Why would work streams increase to the source artist when I can impersonate them with a fully crafted image before lunch?

Your analogy of technology evolving and people not knowing the opportunity yet again really misses the point. In a world where ai systems mass produce, how do you give space in the industry for human art? If you can't safeguard an area, how does a person's skills and talents have any room to flourish and develop? If you can't do that, how many aspiring creatives will you lose, because the employment opportunities dry up? Sure hobbyists and people who solely create for the pleasure of it will exist, but that doesn't put food on the table. Lack of opportunity for work = people look elsewhere for work = not spending as much time practicing and developing = full potential never reached.

If the suggested solution would be to train them in how to use the software, that would be like telling a bricklayer to go learn plumbing. It's still related to construction afterall.