The Middle Path for AI

People Love to Spin Tales of AI Doom or AI Utopia, But It's Time to Take a Realistic Look at AI. Reality is Often Stranger Than Fiction.

Sam Altman and Dario Amodei, CEOs of OpenAI and Anthropic, the top two frontier AI labs in the world, recently published their vision for a sparklingly hopeful AI future with The Intelligence Age and Machines of Loving Grace. There's much to love in both of these essays and they were much needed because the narrative around AI has turned positively poisonous over the past few months.

We've seen a status game of ever-more unhinged predictions about the end of the world, amplified by a mass media which thrives on fear-driven clicks, and pushed by a billionaire backed Doomsday cult doing an all out media blitz for radical legal intervention with California's SB1047, which thankfully failed, though other attempts to strangle AI development are on the horizon with more than 700 convoluted, overlapping bills advancing at the state level.

But the biggest problem is not whether we need more utopias or dystopias.

What we most desperately need is a heavy dose of realism.

We need the middle path of AI.

Unfortunately, nuance and reality don't sell very well. You win more friends, money and social media followers screaming extreme ideas about heaven and hell.

We're all going to going to live forever, dancing into the sunlight in a bold new age, and they'll be no more disease and no more suffering and no more pain! Or, if we don't act right now we're all going to dieeeeeeeeeeeeeee!

To get a realistic picture of AI we need to concentrate on seeing through the fog and the noise. The middle path means focusing on the most likely futures, the ones at the meaty center of the normal distribution.

The Utopian future fantasies of the Culture sci-fi series cited by Amodei, versus the runaway, recursive AI self-improvement of Yudowski, aka AI FOOM, are binary, extreme visions that make for great stories but they're not a great reflection of actual reality. If we're always focused on heaven or hell, we have no hope of getting any kind of clear picture of how AI will actually impact life, work and the world. Even worse, we have no hope of building useful legal frameworks or strong guardrails and of letting regular folks just go about their business without jumping through useless hoops because we've shackled all these systems with a suffocating bureaucracy.

This kind of black and white, all-or-nothing, binary thinking warps and distorts reality. It's a dark, oily smoke that covers everything and makes it impossible to see what's actually right in front of us. We can't get a clear signal because it's drowned out by the constant screeching.

The big story leading up to the election was how AI driven misinformation would overwhelm our ability to tell reality from fantasy. Turns out it was yet another phantasm of the imagination and we learned once again that politicians didn't need any help creating misinformation. They've been doing it just fine for tens of thousands of years.

The imagined problems from extreme predictions rarely play out. Extremes are the edge cases on any probability distribution of the future.

Most often life is somewhere in between.

History may seem like one big event after another but it's only because you can't tell a good story without conflict. Stories are conflict. They don't exist without them. That's why we rarely see history how it really is: long periods of peace, punctuated by short periods of war.

While we do have periods of extreme darkness, like World War II, or brilliant light, like the pastoral pre-colonial days of the Americas, they're usually short intervals in the grand flow of time, sharp spikes before life inevitably returns to a mundane, every-day reality that's somewhere in between.

It's easy to think of dark societies, like Russia under Stalin or Germany under the Nazis or China under Mao. These societies have one thing in common almost inevitably:

They're self-created.

Notice how these tend to be societies ruled by a single psychotic personality cult that creates a nightmare for a time until that person dies and gets replaced by something much less extreme. They're created by the very people prone to extreme binary thinking: "If we don't take over and impose communism on everyone and kill everyone who disagrees the world will end!"

In a horrifying irony, it's extremist thinkers who create the worst histories.

They create the very things they fear through their paranoia and delusions or their desire to achieve a "perfect" world or to avoid a great "apocalypse", one that usually goes through a river of blood created by the extremists.

In the same way, some societies are immensely positive for the vast majority of their people. We can think of early native American societies or the vast nation states of America and Europe and even China today, with far more wealth, prosperity and technology than it ever had in its 5000 year history.

Even if China today lacks in political freedom and free speech, it's still a world away from the insane era of Mao that saw 100s of millions of Chinese die from starvation, war and political violence in the Great Leap Forward and the Cultural Revolution, or the time of warlords pre-Mao, where a few hundred people had the power of life and death over nearly a billion people and could hang any peasant for stealing a loaf of bread to feed their families.

If we're clouded by these fantasy fears and utopian dreams we're virtually guaranteed to miss the most likely futures, one where AI does some good and some bad but it exists on a sliding scale that's tilted closer to good or closer to bad depending on our choices but where AI is not "catastrophic" or curing all diseases overnight and solving the Riemann Hypothesis in its spare time.

Here's the truth: No matter what we do, AI will do good and bad things.

Let's be even more clear:

We will not stop all the bad uses of AI and guarantee that AI only gets used for good.

That's impossible.

No matter what policies we set, no matter what kind of government we choose, life always has positives and negatives. There is no society in history, ever, and there never will be, that is all positive or all negative. That is for children's books and great, door stopper fantasy epics. Instead it's best to look at societies and cultures on a continuum of light to dark at the same time.

The question we have to ask is:

Does a society, on the whole, produce more positive outcomes and lives for its people or more negative ones?

To get more of the good and less of the bad, we have to ask ourselves what kinds of policies are likely to lead towards less negative uses and more beneficial uses of AI?

To understand how to get more of the good things and less of the bad things, we have to see clearly. We have to set aside Heaven and Hell and focus on the more likely parts of the probability distribution of the future so we can make practical plans and shepherd ourselves into a better tomorrow.

So what does a realistic picture of the future look like?

The World of Tomorrow

To start with, AI is not coming, it's already here and it's already radically changing the world.

ChatGPT, the "technology nobody wanted" to its detractors is the the fastest growing software app in the history of the world, rocketing to 100M users in just two months. It took TikTok nine months to hit the same marker and Instagram three and half years. ChatBot usage is estimated at over 500M users worldwide in the two years since ChatGPT launched (Nov 2022) on the direct consumer guide, and if you factor in service chatbots on websites, its billions.

AI is everywhere already.

AI is already driving cars, beating people at ancient board games like Go, pummeling people in complex tournament video games like DOTA 2, and detecting diabetes.

(Source: Valve)

When you talk to your phone and it understands you want directions to a Thai food restaurant, that’s AI. We just don't think of it as AI anymore because it just works.

My direct use of Google dropped 80% in the last six months because I now turn to Perplexity an AI intermediary to search. In its early days it hallucinated wildly but now it lasers in on near perfect answers almost every time. It's faster for me to do research and get exactly the answers I want without wading through pages and pages of irrelevant text and ads trying to answer one simple question about what wax tablets were called in ancient Greece.

(It's called a deltos.)

Machines hunt down terrorist’s money and Taylor Swift used facial recognition to keep her safe from psychotic predators at concerts. She’s not the only one. As early as 2018, long before ChatGPT rocketed AI into the public consciousness, Chinese police were using it to track criminals and dissidents. "Chinese police arrested a 31-year-old suspect who was hiding among nearly 60,000 people during a concert at Nanchang International Sports Center. This was made possible by the early stages of China’s own 'Xue Liang,' or 'Sharp Eyes,' monitoring system, which will be tasked with monitoring the movements of its citizens."

Facial recognition is rolling out to airports everywhere all over the world and it’s coming to a street corner near you. The last few years when I roll in from overseas with Global Entry the system just takes my picture and that's it. I don't even need to scan my passport.

Meanwhile, Alphafold has already predicted over 200 million proteins and then moved on to understanding how they connect or "dock" with each others along with open source mirroring their approach, like ESMFold from Meta. That's a catalogue of almost every "protein known to science, including those in humans, plants, bacteria, animals and other organisms" writes the Washington Post in their article on the three people who just won the Noble prize in chemistry. Two of them, Demis Hassabis and John Jumper of DeepMind, led the effort on AlphaFold.

The article goes further. This year’s Nobel Prize in chemistry honored "a real-world example of how AI is helping humans today with astounding discoveries in protein structure that have far-reaching applications".

Right now AI is a mustard seed.

But from those seeds will grow a wild forest that ripples through every aspect of life from top to bottom.

So that's where we are. But where are we going?

Back to Reality

First off, we likely won't get AGI (Artificial General Intelligence) in two years, like Dario promises but that's just fine. I've written before about what's missing to get to AGI and how we might get there in my article Why LLMs are Smarter Than You But Dumber Than Your Cat. The short answer is new algorithms and new breakthroughs are needed and I don't see them on the horizon just yet.

"Ilya Sutskever, co-founder of AI labs Safe Superintelligence (SSI) and OpenAI, told Reuters in a recent article that results from scaling up pre-training - the phase of training an AI model that use s a vast amount of unlabeled data to understand language patterns and structures - have plateaued."

'The 2010s were the age of scaling, now we're back in the age of wonder and discovery once again. Everyone is looking for the next thing,' Sutskever said. 'Scaling the right thing matters more now than ever.'"

But that's just fine.

What we will get is still incredible and that's rapid growth in ambient intelligence (more on that in a moment) over the next few decades and beyond. And we don't even need AGI to get there.

Specialized intelligence is amazing too.

We tend to think of human like, ultra flexible intelligence as the ultimate goal, but we're just another kind of specialized intelligence. By being good at lots of things we're jacks of all trade, masters of none. Generalized intelligence is, weirdly enough, a kind of specialized intelligence in itself, one that has big trade-offs. Beyond gorillas, monkey, people, octopus, dolphins and a few other creatures, generalized intelligence is in the minority.

Instead, nature abounds with specialized minds.

You might not think of a squirrel as intelligent, but try to keep that squirrel out of your garden and you'll find an intelligence that's hyper-tuned to get into your garden.

And that's where we're going for sure. Hyper-specific, hyper-tuned AI that's perfectly suited to the task at hand as the vast majority of AI systems.

Welcome to the age of ambient intelligence, with embedded intelligence infusing every aspect of our daily lives.

It will track shipping containers around the world in realtime, looking down from on high through the eyes of satellites. It will drive cars, track your health, help your doctors figure out what's wrong with you when you're sick, spot tumors before they can grow, do science and material discovery faster, design better and faster chips and more.

Once dumb objects will wake up. Your shirt will babble away with your shades while having a conversation with your girlfriend’s pearl earrings when she’s traveling to a vacation in Brazil.

Everything from our houses, to weapons, to planes, trains and automobiles, to roads, clothes, jewelry, headphones, glasses, and eye contacts will grow wild with thoughts.

Deep learning, symbolic reasoning and genetic algorithms will look primitive by comparison to the rapid learning systems of the 2030s to the 2040s, with hardware based neural networks that run at ridiculously low power, with tightly coupled memory and processing in a single pass running on memristors and light based chips and organic wetware chips.

Our smart bandages will monitor our wounds and tell us when to change the dressing or whether the infection is healing or getting worse. Tiny patches in the bandages will react to infection by releasing antibiotics.

Pill bottles will remind us to take our medicine or warn us not to take it if we wake up groggy from a night of drinking and try to take it twice.

Self-driving trucks with no cab for humans will race along the highway at 200 miles per hour on special roads across China and the US. They’re nothing but stripped down flatbeds with wheels, carrying standard smart shipping containers. The containers will know every single object inside of them, the objects scanned by nanosats and tracked in space forever on the AR/IoT web. As soon as dishes come off the industrial 3D printer at the autofactory, they’re tracked right into your house.

(Source: Logan movie: Self-Driving trucks in a surprisingly subtle and amazing take on the future)

The containers will arrive on self-piloting boats. When pirates try to hijack the containers, the boats will go to evasive maneuvers and the containers will screech and call for the coast guard or the navy or they'll unleash a torrent of deterrent drones that swarm and crash down on the pirates to sink their boats.

Your next black leather jacket in 2030 will hit your house via drone delivery. When you go to Berghain in Berlin to dance the day away, your jacket will adjust its threads as the temperature changes or loosen the threads as it gets hotter. No more wearing layers as you move from hot to cold in the ice and snow of winter to the warmth of a self-driving car.

We already have Meta smart glasses that just look like regular shades. They're already light years away from the hideous Google Glass of a decade ago. This is the worst they will ever be. They will only get better from here.

(Source: Ray Ban shop and Meta)

Our smart glasses will evolve into designer contacts and eventually designer prosthetic eyeballs, that show us a constant overlay of meta-information about the world around us, from the contact information of people we know, to details of historical landmarks, to warnings of high crime areas to avoid, to the location of friends and family who have given us permission to see where they are during certain times of the day.

(Source: Psycho-Pass hologram AI assistant.)

An AI personal assistant will split time between your ear buds and your augmented reality contacts, processing half of its routines on distributed cloud and foglet platforms. It will do all kinds of tasks that used to take hours. It will bug you to get up in the morning, set meetings, find a nice bottle of wine on the way to a party, craft an awesome workout to get you into summer shape, suggests the latest fashions and finds the perfect restaurant to take a date, while telling you what to say on that date to help you seal the deal with a night of passion.

It'll keep your secrets like Di-Di, Judy Jetson's floating digital diary in the Jetson's, and act as a trusted friend and advisor.

(Source: The Jetsons)

Kids used to have to use their imagination with their toys. Remember army men and action figures? You had to pose them yourself and give them voices and stage epic war sagas with them in the backyard.

Tomorrow's kids won't want a teddy bear that just sits there slack-jawed, staring at the wall. Teddy bears will walk and talk and get kids to beg their parents to buy things. And action figures that don’t run and leap and charge the backyard battlefield screaming will be worthless to tomorrow's children except to collectors who pine for simpler times when the mind’s eye had a lot more work to do.

Toys used to say “batteries not included.” Now they'll say “no imagination needed.”

It’s Toy Story come to life.

(Source: Teddy from the underrated movie A.I.)

Bikes will have wobble detection and bizarre alien frames that look strange because were designed by machines to make them more stable. You could probably peddle them if you wanted to, but if you get tired the AI coupled with millimeter precise GPS takes over and drives for you.

Fire suppression systems won’t just put out fires in houses, they'll direct families where to run and what rooms to avoid, all while keeping them calm with a soothing voice and calling autonomous fire trucks.

But let's look closely at some of the most powerful uses cases for AI.

Dario Amodei covered one of them beautifully in Machines of Loving Grace:

Health care and biology.

Health Care and Biotech Boom

Amodei rightly covers many of the limiting factors to dramatically improved science and biotech:

"In the “limiting factors” language of the previous section, the main challenges with directly applying intelligence to biology are data, the speed of the physical world, and intrinsic complexity (in fact, all three are related to each other). Human constraints also play a role at a later stage, when clinical trials are involved."

He writes that "we could get up to 1000 years of progress in 5-10 years":

"Thus, it’s my guess that powerful AI could at least 10x the rate of these discoveries, giving us the next 50-100 years of biological progress in 5-10 years.14 Why not 100x? Perhaps it is possible, but here both serial dependence and experiment times become important: getting 100 years of progress in 1 year requires a lot of things to go right the first time, including animal experiments and things like designing microscopes or expensive lab facilities. I’m actually open to the (perhaps absurd-sounding) idea that we could get 1000 years of progress in 5-10 years, but very skeptical that we can get 100 years in 1 year. Another way to put it is I think there’s an unavoidable constant delay: experiments and hardware design have a certain “latency” and need to be iterated upon a certain “irreducible” number of times in order to learn things that can’t be deduced logically. But massive parallelism may be possible on top of that15."

That's possible but the more likely probability is that we do gets lots of progress in many areas, but we run into one last limitation that he seems to miss:

Our bodies are insanely, mind-bogglingly complex.

The level of complexity at the molecular level is utterly astounding. Check out this rendered video below on just one of the fiendishly complex molecular machines working inside your body right now:

Heliocase, spins as fast as a jet engine and it winds at unwinds DNA molecules and astonishing speed, each one of them "a few centimeters long but just a couple nanometers wide."

What kind of scale are we talking about? How big is a nanometer?

"Two nanometers is about 1/50,000th the width of a human hair. A human hair is approximately 80,000-100,000 nanometers wide."

(Source: Visual Capitalist)

Those little machines, clustered together, can rip apart and replicate your DNA with ridiculous levels of accuracy in about 8 hours.

We have about 30-40 trillion cells inside of us, all with DNA inside of them and we have billions or even trillions of just this one molecular machine doing this one incredibly complex job.

The optimistic view and the doomer view that an AI explosion will take off and unstoppable speed, dramatically underestimate the complexity of the biological world.

The idea that we will cure all diseases in a decade is quite frankly, absurd.

But that doesn't mean we won't have incredible breakthroughs.

There's nothing but intelligence that can help unravel the micro-universe inside our bodies. We don't have enough scientists and enough time and enough money to do it fast enough. It doesn't matter if we see a 5x, 10X (or 100X speed acceleration of Dario) in our biological understanding and experimentation, or more modest gains somewhere in between those wild-eyed predictions. No matter what, AI will accelerate science and healthcare.

We’ll point our smart phones at a worrying spot on our arm and the AI that lives in our phone will tell us to call the doctor. When it sends the report it generated to the doctor’s office, the triage nurse will know to bump us to the head of the schedule if its skin cancer or a festering wound, rather than treating every patient as equal from hysterical hypochondriacs to the old lady who just likes talking to doctors.

Just this year the Sybil system out of MIT was able to accurately predict lung cancer risk up to six years in advance. I take such prediction systems with a grain of salt, but they will get better over time. Imidex has a lung cancer system that's already FDA approved that can detect cancer cells up to 6nm with 83% accuracy. Again, this is the worst it will ever be.

Big improvements in health care mean dramatic drops is health care costs too. That's going to be utterly essential as population sizes drop in the coming decades and less people are paying into the health care systems. Right now, false positives and negatives mean patients get the wrong care or get care too late or worse don’t get care at all. Big improvements mean cheaper health care and people living a lot longer because they get the care they need when they need it.

The biggest hurdle to AI in health care is the overly conservative and overly regulated nature of the system in almost every country in the world, whether that’s the cold blooded big money system of the US or the sweeping socialized systems of Europe.

It’s hard to get new devices approved. The barrier to entry is massive. Small boards of people we don’t know act as a choke point, both protecting people from hucksters and slowing down innovation dramatically with their double edged sword.

Expect those walls to start to come down as AI increasingly detects and cures disease and performs research at scale. People will demand access to those breakthroughs and regulations will crumble. Laws will change to make it easier for AI based health devices to get into hospitals and into our phones and augmented reality glasses.

As the walls come down it will open up the possibilities for preventative care at a more personal level. Your watch and your glasses will know that your frequent napping during the day isn’t just overwork, it’s sleep apnea.

That will streamline the convoluted process we have now where you make an appointment to get a sleep study, then go to another appointment to get trained for the sleep study, then take the machine home and do the sleep study, then take it back to the office to get your results, and then see a doctor in yet another appointment to prescribe the actual CPAP machine that alleviates the problem.

All this to get an air machine. It's basically a scam.

Archaic and convoluted processes like this will start to fall to the power of AI because you will walk into the doctor with proof that you already have sleep apnea.

Hospital devices will get smarter too, from blood pressure machines, to cameras, to dynamically adaptive physical therapy machines. Cameras will detect if old folks stop breathing, have a heart attack, or take a nasty spill.

All of our devices will start to work as preventative care devices or more Tricorder like universal medical devices, bundled together and able to detect many different things in a single unit. No it won't just magically detect everything with perfect accuracy, but we'll increasingly have medical capabilities in our wearables and especially in any internal devices like the neuralink or small devices we can embed under our skin that talk to our devices.

Your watch or ring or contact lens will increasingly become your heart and health monitor. In ten to fifteen years it may even be able to predict a heart attack coming before you go face down in your eggs and bacon.

“ALERT: Please go to an emergency room immediately as you’re in danger of a heart attack.”

That will mean you get to the hospital in time to live a lot longer.

Ambient intelligence will help us scale up health and science in incredible new ways, as machines invent new drugs, do science experiments with robo-labs, tackle Alzheimers, crush many kinds of cancer and maybe even unravel the basic mechanisms behind cancer and give us near-universal cures.

So in many ways I'd love to see Dario's version of the future become reality, but I'd bet that the incredible complexity of the body will remain a difficult mountain to climb and we'll see incredible progress but many diseases will continue to remain elusively out of reach or new diseases will evolve as we live longer lives and better lives.

Entertainment and Games

It's 2035 and you're a top notch concept artist in London building a game with 20 other people.

The game looks incredible. It's powered by Unreal Engine 9 and it's capable of photorealistic graphics in real time on the Playstation 7 and near perfect physics. It's got character AI that can talk with anyone in an open ended way and still stay within the plot guidelines to keep players hunting down magical rubies and the divine sword of radiance.

Ten years ago it would have taken a team of 1500-5000 to make this giant game. Now you can do it with 20 people. But that doesn't mean less work and less games it means more. A lot more. We used to get 10 AAA games a year and now we get 10,000. Even with a 1000 folks you probably couldn't do it back in 2024. That's because it's almost completely open ended, with real time procedural storytelling that creates nearly infinite side missions, personalized music that matches the listener's tastes, and players control it with hand gestures and voice commands. The controller is gone.

AI helped write the code for UE9 game engine, helping Unreal programmers do four releases in ten years, instead of a single release every decade. AI refined much of the original game engine code and made it smaller and more compact, while auto-documenting it in rich detail. AI also helped design the Playstation 7. Chips design models crafted layout of the transistors on the neural engine and graphics chip inside the Playstation. But where AI really accelerated the game development process was in design and asset creation workflows.

As the lead artist, you've already created a number of sketches with a unique style for the game, a cross between photorealism and an oil painting aesthetic that mirrors classic, clean, golden age sci-fi. The executive creative director signed off on it as the official look of the game. You fed the style to the fine tuner and the AI model can now rapid craft assets that share that look and feel.

Art isn't dead. It's become co-collaborative.

Nothing can replace the human artistic spirit but it now works hand in hand with state of the art generative AI in a deeply co-creative effort.

Now you can move much faster. You quickly paint an under sketch of a battle robot sidekick and the tough female soldier for the game plus a bunch of variants that you were dreaming about last night. You feed it into your artist's workflow and talk to it and tell it you want to see 100 iterations in 20 different variations. It pops them out in a few seconds and you swipe through them.

“I’ve changed my mind," you say. "Go back and give me 200 iterations."

The natural language and logic model baked into the app understands what you want and does it instantly.

300 seconds later the high rez iterations are ready. The 27th one looks promising so you snatch it out of the workflow and open Concept Painter and quickly add some flourishes: a different face mask that generative engine didn't quite get right; a new wrist rocket. You erase the laser shoulder cannon and replace it with an energy rifle and then you feed it back to the engine. You level up the organic, biomechanical nature of the armor, making it drip pure biopunk.

It pops out 50 more versions and you pick the 7th because it's nearly perfect and you just need to fix a few things. You rapidly shift it to match the style of the game assets with another step in the AI pipeline and then you check it for artifacts. You fix a few problems but now it's good to go, so you send it off to the automatic 3D transform.

It pops out into the 3D artist's workflow, fully formed just a few minutes later. The 3D artist, working from Chiang Mai, Thailand, has to fix a few mangled fingers some clothing bits that don't flow right and then it's ready to go. He kicks it off to the story writer working out of Poland.

Seeing the new character gets her inspired. She quickly knocks out a story outline in meta-story language and feeds it to her story iterator. It generates 50 completed versions of the story in a few seconds. She starts reading. The first three are crap but the fourth one catches her and she finds herself hooked to the end. It's good but it needs a little work with one of the sub-characters so she rewrites the middle and weaves in a love story with that character and then feeds it back to the engine for clean up. The new draft comes back and it reads well so she fires it off to the animator in New York City.

He pulls up the draft and the character and tells the engine to start animating the scene. In five minutes he's got story stills for the major scenes in the story and branching possibilities for character actions the player might take. Most of them are good but the third scene is off, so he does some manual tweaking and then asks the engine to iterate again. This time they come back good and he sends it all off the engine to complete the animations and connective tissue linking the scenes.

Welcome to the Age of Industrialized AI.

Even students and home film enthusiasts are making games. The Quentin Tarantino's of tomorrow will use AI to get out of their crappy day job and go on to become great directors. They'll still need incredible skills, like knowledge of story, and character and development. They'll need to know lighting and how emotions come across on the screen. They'll need to understand camera movements, even if those cameras a virtual.

The people best poised to benefit from AI are the professionals who already know the real world of film and TV.

Those students and directors and professionals will have a vast dashboard of new tools. They will animate people and fully realistic scenes the way we used to animated cartoons. They will give subtle expressions and performances to the AI actors, and incorporate real actors seamlessly into the scene. They will "reshoot" a scene in minutes and create a thousand variations of it.

The kids that grew up with AI won't know the bitter controversy around it in the arts and they won’t care if you told them about it. To them, it will just be another tool, like a hammer or a phone and they will use it to build incredible student projects, many that go on to great acclaim and vast audiences.

Expect an absolute explosion of new film and TV and games that adapt to you on the fly, a golden age of story.

Agents, Robots and the Future of Work

But what about work? Will robots and agents take all the jobs?

No. But people working hand in hand with robots and agents will absolutely overtake anyone who refuses to work with them. The AI won't replace the radiologist but the radiologist working with AI will replace the one who won't use it.

What are agents?

My team at Kentauros AI defines it simple as:

An AI system that's capable of doing complex, open ended tasks in the real world.

Even more complicated?

Build an agent that can do long-running, open ended tasks. They need to work for hours, days or weeks without any unrecoverable errors. Just like a person.

The real world could be the physical world or online. What we're talking about here is when agents get out of the lab and face the never ending complexity of life.

Of course, life is messy. When you deal with the chaos and complexity of the real world, it's incredible hard to make these systems work well. Most teams are badly underestimating just how difficult it will be and throwing out wildly optimistic predictions of how fast we'll have robots and agents in every day life.

Moravec's paradox says that reasoning for robots is relatively easy and sensorimotor perception is hard. That's wrong. Both are hard, especially when you try to generalize reasoning.

We've seen Tesla cars go from software based object detection and perception to built in hardware based object detection on specialized chips. As we understand more and more of how to make machines understand the world, we'll see more of that capability in silicon. Steve Jobs once said that "software was everything we didn't have time to get into hardware."

Right now we might have to spend a ton of inference time compute on "hearing" but your ears are fine grained hardware based fast Fourier transformers. They do the work in an instant in hardware. Expect robotics to follow more of the same, with a flurry of specialized chips and devices that do the work of seeing and interacting with the world.

If you're paying attention to robotics work, we seem to have made more progress on sensorimotor perception over reasoning so far.

We have a joke in house:

If you think AGI is just around the corner, try to build an agent.

You quickly learn how dumb these systems currently are. And I mean a real agent, not a Playwright web scraper or PDF summarizer.

But make no mistake, these complex agents will get better. And not just better, they'll blossom into agents that can work for days or weeks unattended without making mistakes.

Whenever I want a more realistic perspective about AI, I read robotics papers or talk to roboticists. They've been dealing with the messy real world for many decades and they're under no illusions of how fiendishly complex it is to build a machine that can think and act quickly in the real world.

We underestimate just how complex and wondrous we humans are! We're some of the most amazing biomachines ever created.

But we're already making progress with bots too. Major progress. We've seen a big leap in humanoid robotics capabilities in just the last few years versus the decades before it. That's often the nature of technological revolutions. They come slowly, inch by inch, and then all the building blocks are there and it comes together very quickly before hitting another wall of diminishing returns. Every exponential curve eventually becomes an S curve. But we're still in the early parts of that dramatic rise in capabilities and we can see it all over the space of robots and agents.

Here we can see O2, from Figure, a California robotics company, doing test work in a BMW factory.

If you look at this clip from the recent Tesla after party, where the robots are handing out bags and drinks, you can see they already have a strong ability to perceive and interact with the world. The robots are tele-operated, but that just means that they're collecting lots and lots of data that can then be used to train them on a variety of tasks so they can do those same tasks autonomously.

Here's a Boston Dynamics robot doing work in a factory fully autonomously already.

Beyond robotics, we're entering an era of Service-as-Software, where more and more boring work gets automated, which will massively speed up what we can do and how fast we can do it. Doing sales outreach, writing reports, detecting disease in an X-ray, making calls to setup appointments, doing research on complex topics, fact checking and so much more is coming.

OpenAI has a blueprint for its five steps to AGI:

Level 1 ("Conversational"), AI that can converse with people in a human like way

Level 2 (“Reasoners”), means systems can solve problems as well as a human with a doctorate-level education. We've seen the first stages of this with o1.

Level 3 (“Agents”) are systems that can spend several days acting on a user’s behalf.

Level 4 ("Innovators") are AI system that can develop new innovations and novel ideas.

Level 5 (“Organizations”), means an AI systems that can do the work of an entire organization, like a small or medium business

Each one of these steps is a quagmire in and of itself. Each one has a series of mini-steps, setbacks and problems that are incredibly hard to overcome, not to mention painful integration points to make it all work in any single business or to accomplish a single task.

Right now we're at the dawn of Reasoners, with models like o1-preview and o1-mini. These will get better and lead to smarter, agentic systems, capable of doing complex work in the digital world and in the physical.

One way these models will get better is that older models will bootstrap new models. It might take the form of a method pioneered out of China, which uses an LLM as a teacher to a reward driven agent. With this approach, the LLM dynamically sets the reward and leverages its internal world model to help the agent make its initial decisions versus just trying random actions. That dramatically speeds up the process of building a strong RL augmented agent.

Even better is that the agent is likely to get stronger than the original LLM. It rapidly learns from the LLM and then outpaces it because it can then do its own exploration, so it ends up getting a better world model on our task than its teacher.

The student becomes the master.

How we train these systems will change a lot in the coming years. We'll teach models more through experience and as the model architectures change, they will get better at continual learning and overcome catastrophic forgetting.

One possibility for beating both catastrophic forgetting and enabling continual learning, two of the longest standing challenges in AI, is already here, the Mixture of a Million Experts paper by a lone researcher Xu Owen He, at Google Deepmind. He built PEER, a learnable router that is decoupled from memory usage. It's able to scale to millions of experts and some of the routing neurons are only a single MLP (multilayered perceptrons, the digital building block of neural nets inspired by studying human neurons, though radically simplified from their biological counterparts).

From the paper itself:

"Beyond efficient scaling, another reason to have a vast number of experts is lifelong learning, where MoE has emerged as a promising approach (Aljundi et al., 2017; Chen et al., 2023; Yu et al., 2024; Li et al., 2024). For instance, Chen et al. (2023) showed that, by simply adding new experts and regularizing them properly, MoE models can adapt to continuous data streams. Freezing old experts and updating only new ones prevents catastrophic forgetting and maintains plasticity by design. In lifelong learning settings, the data stream can be indefinitely long or never-ending (Mitchell et al., 2018), necessitating an expanding pool of experts."

Essentially massive MoE models like this hold the potential to lower inference memory consumption and beat catastrophic forgetting by freezing or partially freezing weights and just adding in new experts to learn new information. The neural router is much more effective because it's general purpose and learned. It's like having parallelizable Adaptors/LoRas built right into the model. It also mirrors the plasticity of the brain in that the router can reconfigure itself as new knowledge is learned, much like your brain prunes or strengthens synapses.

Once you have an ever-learning model like this in place, whether it proves to be this architecture or another, we can move to a practical life long learning system that mirrors how people process massive amounts of information.

Agents also won't be limited to what they learn on their own, like humans. They can share what they've learned.

Humans can do that too, with language and books and multimedia and YouTube. But those are lossy transfer mechanisms. I have to explain what is in my head when I'm teaching you and you have to understand it and language doesn't capture everything I've learned or experienced exactly as I experienced it or learned it, even if I'm a fantastic writer.

With AI we can directly transfer memories completely intact between systems with no loss at all.

That's very powerful.

We also might be able to get to robots and agents with downloadable skills by just using Adaptors and hot swapping them in on a per task basis or as needed, which our team at Kentauros AI is experimenting with now on our AgentSea platform and Apple recently used the same approach for their on-device models.

With hot swappable skills, we can fine tune a small adaptor, like a QLoRa that is specific to that workflow or task. So you might train it up on your own personal in-house application and the training is very fast because you're leveraging the model's general purpose reasoning. Or you might train it to shop on Amazon or do research on LinkedIn.

We don't need a general purpose model that knows everything. If it can dynamically load adapters on the fly and suddenly it is better at that specific skill, like Neo suddenly knowing Kung Fu.

Think of a cleaning robot that downloads a new adapter on how to do the dishes or make dinner. Now it can swap in that module when it's time to make that meatball pasta for the weekend. It can download the dog walking module and learn to walk your dog instantly.

All of that means we will soon(ish) have very strong, very powerful agents.

AI agents will seep into every area of work, online or in the real world, with robots. AI lawyers, doctors, software engineers, security engineers, dock workers and self-driving business and ships and cars.

They'll remake the very nature of work and how we do it.

Economics and Trade

Economics is one of the areas where AI can deliver a true revolution too.

That's because the dismal science is antiquated and full of theories from the turn of the century, made by old men in smoking jackets using questionable analog statistics. Our economic theories are simplistic, outdated and rarely reflect reality.

The dominant economic theories of today were primarily developed in the 20th century, with roots tracing back to the 18th and 19th centuries. Keynesian economics are still highly influential today and they was developed in the 1930s. Neoclassical economics comes to us from 1870s and dominated after World War II. At least modernism was developed in the 1970s, with Milton Friedman pushing back against Keynesian economics and emphasizing money supply control but it was still created before the computer and digital revolutions.

(Source: John Maynard Keyes, United Press International)

While these theories have been updated and refined, they simply can't capture modern economics in any meaningful way. New economic theories are slow to develop. Newer theories try to bring in behavioral economics, incorporating psychological insights into economic decision-making, and by viewing the economy as a complex adaptive system, made up of many tiny models.

There is a desperate need for a "MEADE" (Multiple Equilibrium and DiversE) style paradigm shift, which uses a range of models to understand economic phenomena rather than relying on a single unified theoretical framework.

Even that won't be enough.

That's because economics is dense, complicated and largely impenetrable for the average human being. It's incredibly hard to understand. We're not wired to easily comprehend systems that move at massive scales with trillions of tiny variables.

As Richard Dawkins wrote in "The Blind Watchmaker":

"Our brains were designed to understand hunting and gathering, mating and child-rearing: a world of medium-sized objects moving in three dimensions at moderate speeds. We are ill-equipped to comprehend the very small and the very large; things whose duration is measured in picoseconds or gigayears; particles that don’t have position; forces and fields that we cannot see or touch, which we know of only because they affect things that we can see or touch."

Dawkins, Richard (2015). The Blind Watchmaker: Why the Evidence of Evolution Reveals a Universe without Design . W. W. Norton & Company. Kindle Edition.

And because it's super hard to comprehend, that's exactly why machines will do it better. Machines are better at size and scale, exactly that kinds of thinking that humans are terrible at.

Over the next decade we'll move to a rapid synthesis of up-to-the-minute economics data and an explosion of new economic theories built from real world understanding as world trade explodes across our screens like winds moving around the world in beautiful dance of delightful chaos and complexity. We'll see it all as it happens rather than months or years after the fact, when its already too late to make good economic policy to avert the crisis or stimulate a new boom.

We'll watch the flow of money and people and ships around the world in a revolution of real-time economics statistics tracking and we'll see a dramatic rise of reasoning AIs that make better decisions faster than any human being.

Take something like shipments. The world economy moves by sea, with around 80-90% of the volume of international trade in goods is carried across the oceanic sea lanes. The percentage is even higher for most developing countries. In 2023, sea transportation accounted for 47% of goods traded between the EU and the rest of the world measured by value, and 74% measured by volume. There are over 50,000 merchant ships trading internationally, transporting every kind of cargo. The world fleet is registered in over 150 nations and manned by over a million seafarers.

(Source: Wikimedia Commons)

Tracking all those shipments and goods is a nightmare. Much of our economic data about it comes from government published reports but the quality of that data varies widely and is often outright manipulated. Many countries struggle to create high-quality economic data and because of outdated methodologies for measuring GDP and other indicators it doesn't match reality. Developing countries often lack the institutional capacity and funding to conduct comprehensive economic surveys and data collection.

Surveys are error prone and don't capture even a fraction of the capacity and nuance of the underlying real economics. Countries will understate inflation to avoid higher interest payments on inflation-linked bonds or make sure international aid keeps flowing. Greece and Italy almost certainly manipulated their budget deficits to meet Eurozone criteria prior to joining the EU. China regularly inflates economic statistics to always maintain an air of rapid growth.

All of this bad data and lying leads to bad economic analysis and forecasting, which leads to misguided policy decisions.

But we don't need government figures if we can watch all the shipping containers flowing out of ports around the world with machine learning. That's already happening. Multiple companies and satellite providers already have software that tracks shipments in real time, watching ships with object detection and image recognition from their eyes in the sky.

BlackSky and Spire Global have partnered to create a real-time Maritime Custody Service (MCS) that can automatically detect, identify and track over 270,000 vessels worldwide using AI and satellite data. Mitsubishi is using AI to track "dark fleet" ships from space, violating international laws to fish illegally or move illicit goods. Satellogic and HappyRobot created a AI models that identify individual shipping containers and different types of vessels at ports using high-resolution satellite imagery.

(Source: Satellogic Blog post: “By selecting an area of interest, such as the port of Hong Kong in this picture, and leveraging Satellogic’s high resolution combined with Happyrobot’s AI models, we were able to monitor port activity over several days.”)

That's just the beginning.

Imagine ambient AI embedded in every level of our economy, watching the flow of money, goods, people, trade, business and immigration, trillions and trillions of data points flowing into massive economic models and massive reasoning engines.

As more and more money becomes digital, it becomes easier and easier to spot wide scale spending patterns, economic data, and to predict economic crashes and booms long before they ever happen. In a few decades we're likely to see AI recommending stimulus and lending rates and minimum wages based on real-time, adjusting the economic levers to smooth out the downsides and supercharge the upside.

The biggest limiting factor will be how much we resist the switch to the real-time information economy. How much will we fight and resist letting our brilliant machines track the ebb and flow of money and people? How much will we battle back against better decision making due to misguided fear and a desire to keep our thumbs on the scale out of blind animal panic and misguided delusions of "losing control" of our AI.

Politics and fear are that only things that can slow down the inevitable move to the age of "algorithmic economics."

It might take many decades to see the age of info economics, because resistance in government is massive to this kind of transparency. There's power in manipulated statistics. But we'll likely see nations monitoring other nations first, rather than themselves, and that will force transparency whether nation-states like it or not, as every nation monitors every other nation and eventually forces direct transparency.

The idea of algorithmic economics scares some people but we should welcome it because the machines will make better sense of the world economy than we ever will. It's simply too complicated for any one person or group of people to understand but its exactly the kind of problem that machines tackle better than any bio-mind.

We could look at a lot more industries and world changes. I could write an entire novel on this and probably will. Every industry from chip making, to knowledge work, to software creation, will shift massively in the coming years.

But now that we've looked at the positive lets look at the downsides, in a realistic way, before wrapping up.

Every light has a shadow.

If we want a balanced view of the future we can't just look at the bright side.

We have to stare into the abyss as well.

(The Horror. The Horror.)

He Took a Face from the Ancient Gallery

While the AI doom cabal scream about drug dream fantasies of AI going foom or taking over, or us "losing control" to magical mega-AIs, something far wickeder is festering right under our noses in the real world.

AI can and will supercharge war and surveillance to terrifying new levels.

To see the most horrific use cases of AI you have to turn away from the private sphere and look at governments, especially authoritarian governments, but also just well meaning democratic ones too. Nation states have enemies and their basic nature is to protect their citizens and that often leads to ends-justify-the-means kind of thinking.

The AI doom movement has concentrated their fire almost exclusively on strangling and limiting the development of civilian AI while giving an absolute and total free pass to government and military uses of AI. This is a looming disaster if they win because it means civilians will have no means to fight back against all powerful states that can peer into every aspect of their lives. As we get more and more digital it becomes harder and harder for any person to move through life without leaving a long digital trail in the clouds of machines we interact with every day and that means nobody will escape the all seeing eyes of the powers that be.

As we move to nation-state digital currencies it will be easy to cut someone off from their money like flipping off a light switch. AI driven camera networks will watch every aspect of people's lives and they already do in China. Chinese surveillance systems can even ID people wearing a mask.

To be fair, we've seen a few scattered attempts at bringing awareness by activist groups, like the excellent Slaughterbots sci-fi short film that predicted the rise of the AI driven drone explosives that have sadly become real life in a vicious and drawn out Russia/Ukraine war that echos the cyberpunk fiction of the 1980s.

(Source: Reuters special report on drone warfare in the Ukraine/Russia war)

But overall these efforts are few and far between and these groups have gotten zero traction on any bills that will restrict the ever accelerating AI arms race of governments all over the world for new and more powerful weapons and surveillance systems.

There's a reason they've gotten zero traction.

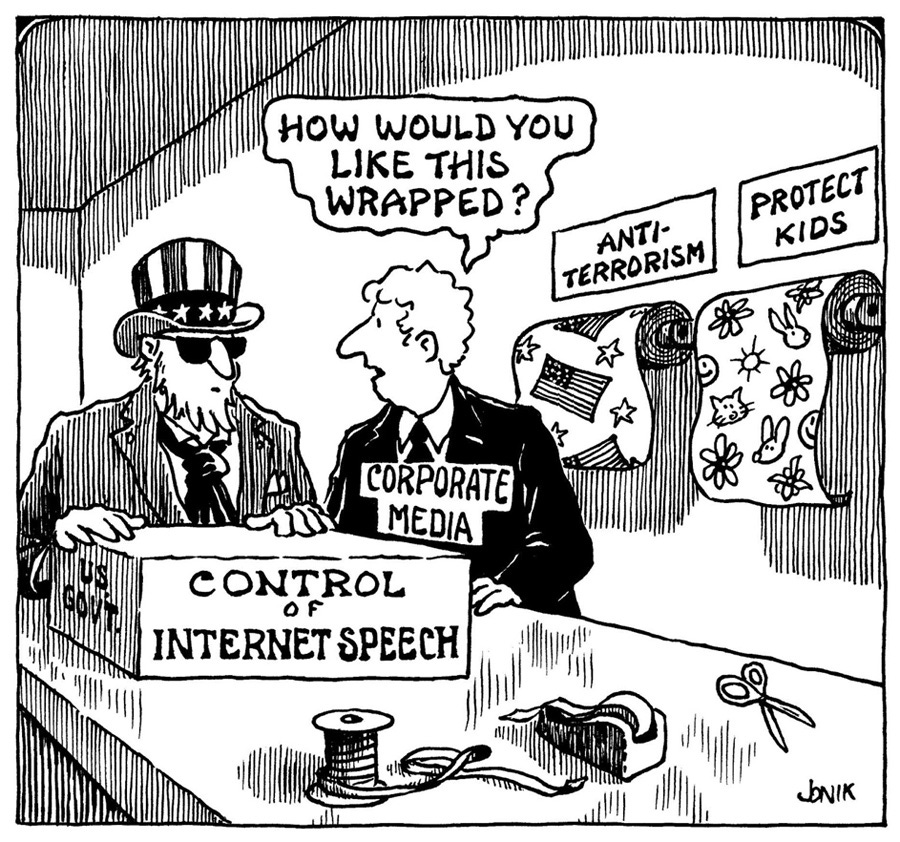

AI is just too tempting for governments and there is zero chance that any government will willingly restrict its own growth and risk losing the edge to rival nations as this cartoon from Economist shows so brilliantly:

(Source: Economist KAL cartoon after the UK AI Safety Summit)

Every bill coming out of America, China and the EU (including the EU AI Act which is starting to go into effect) is strictly focused on civilian AI and gives a 100% free pass to governments to do whatever they want.

Even if any government does sign a bill to restrict AI in weapons tech or surveillance, they'll just build it anyway with black budgets as we've seen time and again with projects like Total Information Awareness (TIA), which was explicitly defunded by congress but a decade later led to the very mass surveillance tools that Snowden leaked.

To refresh your memory on TIA, the scope of those systems included "a 'virtual, centralized, grand database',[38] the scope of surveillance included credit card purchases, magazine subscriptions, web browsing histories, phone records, academic grades, bank deposits, gambling histories, passport applications, airline and railway tickets, driver's licenses, gun licenses, toll records, judicial records, and divorce records,[8][12] along with "health and biological information TIA collected included drug prescriptions,[8] medical records,[39] fingerprints, gait, face and iris data,[12] and DNA.[40]"

Now imagine what they can do with AI embedded into those systems? Imagine what a sadistic regime like the regimes of Mao, Satlin, Hitler, Mussolini and Franco can do with that kind of dark intelligence monitoring every aspect of their citizens lives.

"If you want a picture of the future, imagine a boot stamping on a human face— forever," wrote George Orwell in 1984.

And nothing will make that shadow more clear than the dawn of the AI arms race.

People have started to misuse that term to mean companies racing each other to build better products, like OpenAI and Anthropic. That's absurd nonsense. When I say arms race, I mean exactly that, "arms" as in weapons of war.

The next five to fifteen years will mark the beginning of a new digital Darwinism. It’s survival of the fittest at the military and nation state level.

Today’s narrow AI feeds on big data. The more data you can collect the smarter your AI gets, at least until it hits a point of diminishing returns after it's chewed through petabytes of imagery and text and video. If your citizens don’t have any right to privacy and the government can do whatever it wants, it makes it super easy to build massive data sets to train your AI.

That gives authoritarian regimes a tremendous early advantage.

While the EU is still debating AI ethics, holding hands and singing songs, and passing laws to tie up their own companies in red tape, China started rolling out AI at a breathtaking speed five years ago. As early as 2019, Alibaba-built “City Brain’s” that could already monitors 50,000 cameras, directs traffic, detects car crashes in under 20 seconds and tracks criminals.

They won’t stop there.

(Source: Alibaba website: Alibaba City Brain Dashboard circa 2019.)

They want to build city scale visual search engines, call fire trucks before the people even know their house is burning and they most assuredly have stealth purposes not listed in their marketing material, like tracking dissidents and other enemies of the state.

That’s the biggest problem with AI. No matter what we can do it can always been used for good or evil.

If we use it to track old people in hospitals when they go into cardiac arrest or stop breathing, it’s only a short hop to monitoring old folks for health insurance to penalize them for not working out enough or taking their medicine.

For governments the ethics implications are even worse. Every country will want to deploy AI at the City Brain scale but they’ll need to tread a fine line between privacy and the public good.

That line is fuzzy at best.

The road to Hell is paved with the motto “for the greater good.”

For every use case I can think of that’s a clear public win, I can think of one with a more sinister edge.

Yes, there are clear and obvious greater goods:

Spotting fires faster than any human ever could means firetrucks get their faster.

Detecting violence and catching violent criminals means less people die from grisly gunshot wounds or a knife in the back.

Recovering stolen cars and purses means a lot cheaper insurance.

Directing traffic means a lot less time commuting.

Sill, a system that spots criminals and “bad guys” can easily spot dissidents and political enemies and anyone who stands up and speaks out against the system. Expect governments to use the now standard bogeyman of terrorism as a justification to sneak these technologies in under our nose without a public debate. They won’t even have to sneak them in because they’ll already be there directing the morning commute and calling ambulances so politicians with darker agendas can easily layer in a second purpose.

(Source: John Jonik)

And as cities get smarter, it’s not hard to see non-lethal and lethal autonomous arrest technology. A drone will swoop down and capture the bad guy with a net or a glue spray or just kill him with good old fashioned bullets.

The only real question is, who gets to define the bad guy?

There are obvious bad guys like murderers. But it doesn’t take long to start seeing everyone on the other side of the political debate as bad guys too.

Who the bad guys are shifts with who’s in power.

If you have a just government, making just decisions, then the bad guys are people we tend to agree on. But an unjust government can mark anyone who disagrees with them as a criminal.

And that’s the biggest problem. The real bad guys, authoritarians, the vicious and the cruel will already have a dual use technology ready and waiting to serve them when they come to power. AI will live in our public cameras to get help to people faster but its latent power will be there waiting to be activated.

Today’s AI serves its masters faithfully. Unlike a soldier, it won’t ever question the order of its commanders. It will just do what its told with ruthless efficiency whether its right or wrong.

Many fear AI with consciousness.

Maybe we should fear the exact opposite?

AI without consciousness.

I don't fear AI taking over. I fear dumb, narrow AI, controlled by nasty, sadistic, power hungry people. AI is just a tool. And tools in the hands of bad people are the real problem.

Humans are the real monsters.

Balance is Best in All Things

We’ve looked at AI detecting cancer, running entire cities, creating astonishing new film and TV, creating software that can do any kind of work, making smart weapons smarter and more lethal, and robots that can do the dishes and fold your clothes, robo labs and scientific breakthroughs, and a ton more.

While some people wonder what happens when we automate more and more work and AI seeps into every aspect of our life, the answer is the same answer it's always been.

We'll adapt.

It's what we do. We evolve. We change. The people alive today are not the same as the people alive just a generation ago and we live a light years different life to people 15 generations ago and radically different from the people 100s or 1000s of generations ago. As Tim Urban wrote in his brilliant essay, Meet Your Ancestors, All of Them, your great grandfather to the 20th power would look at your life now with shock and distain.

"Your great20 grandfather kept it real. When he wasn’t torturing somebody, he was being tortured himself. When he wasn’t catching the Black Plague and dying, he was slaughtering women and children in the Crusades. And weirdly, he might have had the same last name as you. If he could meet you, he’d be blown away by the ease of your current pussy existence. But not as blown away as your great500 grandfather would be."

(Source: Tim Urban essay on your ancestors, linked above)

We've adapted to technology change millions of time in our history and we'll do it again. Human life is not just one thing. "Always in motion is the future," said Yoda and always are we too. We're not running around in the forest collecting things and living in communes like we did in the hunter gatherer era. We're not hunting the Water Buffalo. I'm sitting in my bed writing this essay on a computer, a near magical marvel of the modern world, the work of millions of my ancestors and modern businesses.

Nobody is clamoring for a return to hunting and killing whales so we can dig the white gunk out of their heads to make candles. We use electricity now. The whale hunter jobs are gone but we adapted. And it's better that we can just flick a switch and light the world.

In many ways the paranoia and ridiculous fear mongering around AI mirrors early fears around electricity. It's dangerous and terrifying and it will kill everyone if it gets more widespread! And it's not that there were no problems, like poorly insulated wires and lack of standards. There were. Real people did get electrocuted before we got very good at stringing wires around the world. Now the dangers are vanishingly small unless you do something magnificently stupid like putting a fork into a light socket.

AI will change the world. It just won't happen as fast and as suddenly as many people fear.

Think of this essay as playing out over many decades, not next year or next week.

While people are predicting AGI is just around the corner, anyone who works with AI agents like my team does knows just how stupid these models really are and how limited they are in the real world. We live in a weird world where AI is smarter than many PhDs and yet dumber than your cat. They can tell you about nuclear physics and speak 100 languages fluently and yet they lack any and all common sense and they can't seem to get tasks done without going completely off the rails.

This confuses a lot of people. If it can speak a 100 languages it must be smart. But it's just dead wrong. It's only part of the story. These models are smart and getting smarter but they're still dumb too.

Here's an example from Max Bennett's amazing book A Brief History of Intelligence. I started with the line "He threw a baseball 100 feet above my head, I reached up to catch it, jumped..." and let it finish the sentence.

Because of your common sense, you'd know the ball was too high to catch.

GPT-4o does not know that (unless they fine tune it on that now, which they might do.)

Don't get me wrong. Many of these systems are still good and useful and they do things that simply weren't possible a few years ago and they're only the beginning of what's to come. I absolutely love coding with AI. I'm a pretty terrible programmer but I'm a great idea person and now I can prototype ideas without waiting for engineers to tell me "no" and "it can't be done."

It can. And my lack of experience is an asset in this case because I will try anything and AI lets me experiment quickly with ideas, discarding the ones that don't work and digging deeper on the ones that do.

AI is here. It's evolving but it won't go foom or change the world an instant. It will change it and we'll adapt and for the most part it will be a good thing.

More intelligence is always better.

Is anyone sitting around wishing their supply lines were dumber or that it was harder to detect diseases?

Do we really want dumb cars? Autonomous cars will save millions of lives each year.

Turns out humans are terrible drivers.

It takes perfect concentration to drive a car right. And that’s something humans just don’t do with their ever-deteriorating attention spans. Globally in 2019 1.25 million people died in car wrecks. That’s 3,287 deaths a day. More than 20–50 million more are injured or disabled.

Still humans are under the delusion that, like Rain Main, they’re very, very good drivers. Humans have the great ability to fool themselves that way.

In the early days of autonomous cars, every fiery self-driving car crash made the news.

In a short time, no self-driving car crashes will make the news because they'll just be much better at it than humans who killed so many more people with their inability to pay perfect attention to ever changing road conditions.

By the 2030s the death toll will plummet. Steering wheels, once required by law, will disappear all together.

(Source: Mercedes F 0150 concept vehicle.)

The inside of cars will be redesigned as mini-apartments on wheels, with tables and chairs facing each other.

Drinking and driving will become a good thing.

I once tried to describe what I did to my 86 year old grandmother. When I told her AI, she said "what's that?" I tried to explain it and then showed her a self-driving car. She said "I'd never get in that."

And I told her, "Grandma, tomorrow's kids will say the exact opposite, I will never get in the car with a human behind the wheel because it is just too dangerous."

Change is coming and its coming fast. Just not as fast as everyone thinks.

As I wrote earlier, we're still in the early phases of level 2, reasoners, with models like o1. They will get better and better over time. But it will be more gradual and slower. In some areas it will rocket ahead and in other areas it will be slower and steadier. Technology diffuses into the world at differing speeds, like water rushing fast at first and then trickling slower as it spreads out and runs into obstacles and barriers.

In other words, there is friction at every one of these steps. A lot of it.

We tend to overestimate the speed of some things and underestimate it in other ways. There’s a great line in Black Hawk Down, where a soldier asks how long it will take to fix a helicopter?

“Five minutes.”

“Nothing takes five minutes.”

But does it really matter? Five years or fifteen or fifty is a drop in the bucket for radical changes that will remake the world.

Change is coming and it’s coming relatively fast and it will only accelerate.

For first time in history we have a technology that can do just about anything. Just like us.

We're about to see a Cambrian explosion of intelligence.

And life will never be the same.

Great article! thanks!

Would you be interested in this?

Superposition Checkers - lesswrong.com's editors suggested this short description. It is well worth a few minutes to study as a minimalist thought experiment. Thanks!

R. A. McCormack, P.Eng. No.1 Author, Hackernoon - AI/Machine Learning

This simple game is standard checkers with one twist: after any capture, both players must take momentary control of an opponent's piece and move it anywhere- then play continues normally until next capture.

Surprisingly, this simple mechanic can expose three structural failures in AI systems:

Strategy Collapse Every capture forces pieces to change sides. AI can't build strategies when it can't trust its own pieces.

No Patterns to Learn While technically solvable, the solution space is a dead end - a lookup table that doesn't help. Each capture resets the board in ways that break all learned patterns.

Safety Implications The game shows how simple rules can break AI's strategic thinking without complex scenarios. It's a clean test case for how AI fails when its basic assumptions about control and ownership are challenged.

Full detail research on GitHub.com MIT Licensed