The Age of Industrialized AI

Where is AI Going Over the Next Two Decades? The Sci-Fi Story that AI Will Destroy All the Jobs Will Slowly Fade as AI Becomes More Deeply Collaborative and Co-Creative.

It’s 2033 and you're a top notch concept artist in London building a game with 100 other people.

The game looks incredible. It's powered by Unreal Engine 9 and it's capable of photo-realistic graphics in real time on the PlayStation 7 and near perfect physics. It's got a character AI that can talk with anyone in an open ended way and still stay within the plot guidelines to keep players hunting down magical rubies and the divine sword of radiance.

Ten years ago it would have taken a team of 1500-5000 to make this giant game. Now you can do it with 100 people. But that doesn't mean less work and less games it means more. A lot more. We used to get 10 AAA games a year and now we get 1,000.

Even with a 5000 folks you probably couldn't do it back in 2023. That's because it's almost completely open ended, with real time procedural storytelling that creates nearly infinite side missions, personalized music that matches the listener's tastes, and players control it with hand gestures and voice commands. The controller is gone.

AI helped write the code for UE9 game engine, helping Unreal programmers do four releases in ten years, instead of a single release every decade. AI refined much of the original game engine code and made it smaller and more compact, while auto-documenting it in rich detail. AI also helped design the Playstation 7. Chips design models crafted the best layout of the transistors on the neural engine and graphics chip inside the PlayStation. But where AI really accelerated the game development process was in design and asset creation workflows.

As the lead artist, you've already created a number of sketches with a unique style for the game, a cross between photo-realism and a classic look from the golden age of anime sci-fi. The executive creative director and the environment designer signed off on it as the official look of the game. You fed the style to the fine tuner and the AI model can now rapidly craft assets that share that look and feel.

Art isn't dead. It's become co-collaborative. Nothing can replace the human artistic spirit but it now works hand in hand with state of the art generative AI in a deeply co-creative effort.

Now you can move much faster. You quickly paint an under sketch of a battle robot for the game plus a bunch of variants that you were dreaming about last night. You feed it into your artist's workflow and talk to it and tell it you want to see 100 iterations in 20 different variations. It pops them out in a few seconds and you swipe through them.

(Source: Author, Stable Diffusion 2.1 768)

"Never mind," you say. "Go back and give me 200 iterations."

The natural language and logic model baked into the app understands what you want and does it instantly.

Two seconds later the high rez iterations are ready. The 27th one looks promising so you snatch it out of the workflow and open Concept Painter and quickly add some flourishes: a different face mask that the generative engine didn't quite get right; a new wrist rocket. You erase the laser shoulder cannon and replace it with an energy rifle and then you feed it back to the engine.

(Source: Midjourney v4 and Stable Diffusion v2.1 inpainting mashup)

It pops out 50 more versions and you pick the 7th because it's nearly perfect and you just need to fix a few things. You rapidly shift it to match the style of the game assets with another step in the AI pipeline and then you check it for artifacts. You fix a few problems but now it's good to go, so you send it off to the automatic 3D transform.

It pops out into the 3D artist's workflow, fully formed just a few minutes later. The 3D artist, working from Chiang Mai, Thailand, has to fix a few mangled fingers and some clothing bits that don't flow right and then it's ready to go. He kicks it off to the story writer working out of Poland.

(Source: Author, Midjourney v4)

Seeing the new character gets her inspired. She quickly knocks out a story outline in meta-story language and feeds it to her story iterator. It generates 50 completed versions of the story in a few seconds. She starts reading. The first three are crap but the fourth one catches her and she finds herself hooked to the end. It's good but it needs a little work with one of the sub-characters so she rewrites the middle and weaves in a love story with that character and then feeds it back to the engine for clean up. The new draft comes back and it reads well so she fires it off to the animator in New York City.

He pulls up the draft and the character and tells the engine to start animating the scene. In five minutes he's got story stills for the major scenes in the story and branching possibilities for character actions the player might take. Most of them are good but the third scene is off, so he does some manual tweaking and then asks the engine to iterate again. This time they come back good and he sends it all off the engine to complete the animations and connective tissue linking the scenes.

Welcome to the Age of Industrialized AI.

To see how we got here, we just have to roll the clock back to 2023 to see how it all started.

The Great Ravine

The great acceleration of AI started with a collapse.

It was 2023 and we saw a big collapse and consolidation of MLOps companies.

It happened because in the last decade Big Tech yanked AI out of the labs and universities and into the real world. By 2010 they were already putting AI into production but to do it they had to build all their infrastructure from scratch. It's one thing to build a model at a University and write a paper but it's another thing altogether to scale it, optimize it and served it out to millions or billions of users.

The engineers writing that AI infrastructure for Big Tech saw an opportunity. They figured everyone would want to build models. So they left and started their own companies to bring pipelines, distribute training engines, and experiment tracking to the masses, infused with a surge of capital during the go-go "everything" boom days. Those newly minted MLOps masters all made the same bet. They figured it wouldn't be long before every company in the world had a small army of ML engineers, cranking out models.

There was just one problem. It didn't happen.

Most of those companies struggled to find customers while barely keeping their heads above water.

What happened?

They forgot one thing.

Real machine learning is incredibly hard.

Building some XGBoost models or doing some churn prediction is within reach of most teams but it's another thing altogether to build out large language models like Chinchilla or GPT4 or generative diffusion models like Midjourney v4 or Stable Diffusion. It takes massive amounts of data that can't be labeled with brute force, millions of dollars worth of GPU compute, and a lot of talented and expensive people.

In short, it's just too complicated and too expensive for the average company to build out a supercomputer, hire an ML team, a data team, an MLOps team and build a top of the line model.

Most companies will never train advanced AI from scratch.

The vast majority of companies won't even interact with AI at a low level. That's like writing in Assembler, essential for a small subset of tasks, but way too complicated for most projects. Companies just don't have the time, money and people power to ingest billions of files, label them, do experiments, train a model, deploy it, optimize it and scale it.

And that means 2023 marks a major turning point for MLOps companies. The smart and well designed platforms will survive and adapt to the new reality but many of them just won't make it. Macroeconomics are scary at the moment with sky high inflation, a property bust in China, an energy crisis, an invasion in the Ukraine putting serious pressure on food prices and more.

We'll see a lot of mergers, acquisitions, fire-sales and flame-outs this year. That's all right because it's accelerating the rise of the canonical stack of AI. People will start to converge on a small subset of the best tools.

Call it the Great Ravine. Many companies will not cross the chasm. But the ones that do will form the bedrock of future AI applications and a new stack that most AI driven businesses use by default.

Think of it as the LAMP stack for AI.

Once we had the LAMP stack, we got Wordpress and once we got Wordpress, we got high level drag and drop editors like Divi that make it easy for anyone to design a cool looking website.

In other words, we moved up the stack to a higher level of abstraction. I'm a so so web site designer but I've got a good art direction sense and that lets me drag and drop websites even though I can't write a line of CSS. Each layer of abstraction is all about accessibility.

The history of technology is abstracting up the stack. Assembler leads to C, which leads to C++, which leads to Python and Go and on and on. Abstract away more of the low level functions and you've got more people who can play in the sandbox. It's a lot easier to learn to write Python than machine code.

The same thing is happening now in AI and it's happening a lot faster than anyone expected. We're already moving up the stack to fine tuning models, chaining them together and stacking them into wonderful workflows and building apps around them.

A lot of those companies and open source tools won't last. But the bloodletting will just make the winners stronger. The lower level tools will mature and morph. They'll stop trying to serve every use case on the planet from basic Bayesian analysis to self-driving cars and they'll focus on two kinds of companies, tuning the stack to fit their needs:

AI driven businesses

Foundation model companies

AI at the Core and the Rise of the Foundation

AI driven businesses are companies where the business model simply couldn't exist without AI.

Think about a company like Boeing, where they might have AI/ML in five different areas like the materials science division and for simulating aerodynamics and doing order tracking. But their business is still making airplanes, which means they're not AI driven. You can make airplanes without AI because they've been doing it for over a hundred years. AI will make building planes faster and safer but that's still a whole lot different from an AI driven business model.

Take something like photo-realistic virtual people generation. If your company specializes in spinning out 1000s of virtual people so an online clothing company can showcase their entire catalog of 100,000 items in every possible combination of hat, shoes, dresses and pants, that's an AI driven business model. You couldn't generate those virtual fashion models with traditional code and the company couldn't hire enough real people to shoot every clothing combination.

(Source: Author, in Stable Diffusion 2.1 768)

We'll see thousands of kinds of AI driven businesses in the coming years doing things totally impossible with regular code. Expect to see musical hit generators, on the fly video editors, rapid concept designers that can go from image to 3D asset in the blink of an eye, custom drug designers for diseases that were too small to warrant R&D in the past, advertising and stock photo creators, and so much more. We're only begin to understand what we can do with powerful models. As coders and hobbyists everywhere get their hands on them for the very first time they'll come up with applications that no single company can image on their own.

Now instead of these powerful models hiding behind the walls of a small group of powerful organizations, state of the art models are getting into everyone's hands faster. An open model like Stable Diffusion already unleashed an unprecedented explosion of new creativity, with new tools coming out nearly every day and every major open foundation model will do just that, spark new waves of ideas and possibilities. There are so many tools and new business ideas it's almost impossible to keep up with them all and the amazing array of potentially game-changing applications for business such as prototype synthetic brain scan images that can drive medical research, on demand interior design, incredibly powerful Hollywood style film effects, seamless textures for video games, new kinds of rapid animation that can drive tremendous new streaming content, on-the-fly animated videos and books, concept art, plugins for Figma and Photoshop, and much more.

And that takes us to the second kind of company that MLOps companies will focus on: foundation model companies.

Those are the companies dedicated to building out the most cutting edge models in the world, foundation models. They're big, powerful and incredibly expensive to build and train. Companies like OpenAI, Stability AI, Jasper, and Midjourney are just the beginning. We'll see the rapid proliferation of foundation model companies looking to build the most dynamic and incredible models in the world.

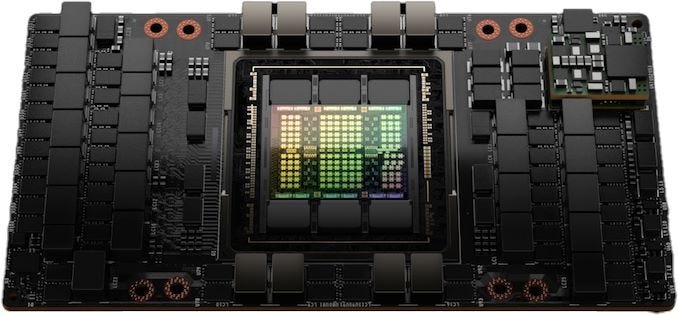

These massive models are simply out of reach for most companies. They're not going to hire advanced machine learning researchers and label a petabyte of data. They're not building a supercomputer cluster by rolling out Nvidia A100s or H100s, where each card costs $20,000 retail. And that's just to get started. They have to keep investing in the hardware to keep building those models, which means supercomputers that get swapped out every year or two.

(Source: Nvidia H100 TensorCore Chip)

But have no fear that Foundation model companies will be the only ones building stunningly powerful models in the next few years. They won't and that's because as faster AI cards hit the market over time, the models that were cutting edge last year or a few years ago will come down in price. That's where smaller companies will swoop in. AI driven businesses will crank out older versions of those models and imbue them with brand new capabilities and apps nobody thought of yet. As the years race forward, an ever increasing set of incredible capabilities will start at the top and then cascade down.

Even then most people still won't train models from scratch if they're not AI driven businesses. Most of us will simply run models via API or take an existing model and run a fine tuned version locally.

And it won't just be training that's out of the reach for most companies. When it comes to serving mega-models, inference gets real expensive real quick.

The more people who join your platform, the more GPU or custom AI ASIC power you need to serve that model at scale. That means you're building out a small supercomputer just to serve your models too. Even worse, you're not just building one, but probably multiple distributed versions so you can serve requests all over the world at light speed.

To understand just how expensive inference can get, all you need to do is take a look at why Google built their custom neural-chip, the TPU or tensor processing unit. When they rolled out voice recognition on Android back in 2011, they calculated that if everyone used Google voice search for just three minutes a day, it would force Google to double their datacenters. Rather than do that they created their own custom chips to handle the load.

Since then Nvidia's chips have come to dominate training and inference and more companies are rushing to build out the next generation of neural chips, everyone from Cerebras, to Graphcore, to Amazon and Google, along with a surge of stealth startups too. The race to build the perfect AI architecture is on and that will make it easier for more companies to do massive amounts of inference but it still won't be cheap for some time and it's incredibly hard for the average company to handle.

That's why we're likely to see dedicated inference scaling companies and out of the battle for mindshare we can expect to see an Akamai of AI inference win out in the end. That company will pour money into datacenters around the world to deliver the smallest possible latency to anyone, anywhere. Eventually, they'll build their own chips, aided and augmented by AI itself, and custom manufacture them with TSMC or Samsung or from Intel's new foundry strategy that emerged out of the chip wars between China and the US.

Eventually AI Akamai will have a moat so huge and most of the world will run their models on that platform and a few smaller competitors from the cloud behemoths. Almost nobody will run inference themselves. Powerful edge processors on our phones, glasses and smart devices will offload some of the work but most of it will come from giant chip hungry inference mega-companies.

Chip Wars

What are the chip wars?

China and the United States are vying for world AI chip domination.

Microchips power everything from our cars, to our phones, to our computers, to the most advanced military systems, to key medical equipment, to tractors and vacuums. In short, every major modern convenience and tool. China spent more on semiconductors than it did on oil in 2020, buying $350 billion worth of chips that year.

At stake is the future of militaries and technological might. Smart weapons dominate the modern battlefield and AI powered weapons will own the battlefields of tomorrow. Behind all those smarts are chips. Control the chips supply chain and you control the modern world. Right now the US controls it, along with its allies in Taiwan, Korea, the Netherlands, Germany and Japan. China wants to disrupt that and control it themselves.

Chinese leadership sees it as a historical chance to leapfrog the US and take pole position on the world stage.

They're right.

Whoever wins at AI sets the stage for the next century.

That's because AI will quickly become one of the most important industries on Earth, following just behind the twin powerhouses of Energy and Agriculture. There's not a single business that won't benefit from getting smarter so it's an area every country wants to lead because it marks a major turning point in world history.

The fight is just getting started. It's a war of espionage at the highest levels, as both sides try to hack their way into what the other side has brewing in secret military labs. But it doesn't stop there. It touches everything from finance, to politics, to business, to law, to materials science, to biotech and pharma, to war and energy.

In a speech in 2017, Chinese president Xi Jinping said that China must be “gaining breakthroughs in core technology as quickly as possible.”

He was talking about semiconductors most of all. China is critically dependent on American designs and an American led global coalition. He wants to break the old order and remake it so it more closely aligned with China

“We must promote strong alliances and attack strategic passes in a coordinated manner. We must assault the fortifications of core technology research and development…. We must not only call forth the assault, we must also sound the call for assembly, which means that we must concentrate the most powerful forces to act together, compose shock brigades and special forces to storm the passes.”

- Miller, Chris. Chip War: The Fight for the World's Most Critical Technology (p. 248). Scribner. Kindle Edition.

When it comes to AI chips, Nvidia is the undisputed AI chip champion, a US based company that the Chinese rely on for their most advanced supercomputers. The US also has most of the world's best chip designers, around 57%. Apple, Google, Amazon, Tesla and Nvidia all design their own chips but they make exactly zero of them. Those chips all get printed by the Taiwanese company TSMC the most powerful semiconductor manufacturer in the world. TSMC is fed by a global network of irreplaceable companies in Germany, the US, the Nederlands, Japan and Korea. For instance, one Dutch company, called ASML, makes 100% of the world’s Extreme Ultraviolet Lithography (EUV) machines. They're the only company in the world to make them and each unit costs $100M. The next generation ones will cost $300M in 2025.

This year, the US kicked off a huge spending spree with the Chips and Science Act to try to bring more of fabs back to the states. For years, the US reigned supreme with integrated design and fabrication companies, like Intel, during the heady days of the WinTel dynasty of Microsoft and Intel. But over the last few decades the world moved to the fabless model, where they designed chips in-house but outsourced the fabrication of chips more and more, leading the rise of TSMC.

It's estimated that TSMC produce 90% of the world's most advanced chips and about 40% of the less advanced chips that power everything from your toaster to your car to the router in your house. With China saber rattling and poised to take Taiwan back by any means necessary, that's forced the US to try to reignite home grown fabs.

The US also just took more drastic steps too. They stopped Nividia from selling its most cutting edge chips to China, the Nvidia A100s and H100s.

The Economist called Taiwan the most dangerous place in the world because an invasion from the mainland would smash the world economy in an instant. Cutting the US off from chips would likely mean World War III because it would bring everything from the car industry, to the computer industry, to the military industrial complex to a grinding halt and cripple the modern economy. Taiwan and TSMC sit at at the heart of the global tug-o-war to own AI and chip making for decades.

The Chinese aren't sitting still. They've got a flurry of companies looking to beat Nvidia and make the most powerful chips at home. "In the first five months of 2021, about 164 Chinese semiconductor companies received investments with total financing of more than 40 billion yuan," writes Zhang Erchi, for Nikkei Asia. The blockade is likely to turbocharge Chinese investment in home grown chips and it looks like it's already happening now. The Chinese just announced a package for $143 billion dollars of investment in chips as a response to the blockade and in an attempt to match the $280 billion behind the Chips and Science Act from the US.

In the end there's no "winning" this war but breakthroughs in faster and smaller chips will lead to mega advantages for any nation that develops the technology first. Their military will get smarter and more vertically integrated and so will their weapons systems and the international market will buy from whoever makes the best chips. It's a battle that will rage for decades, if not centuries.

Classic, electronic transistor based chips are only the beginning. As we moved beyond electricity to photonic chips, and beyond silicon to graphene and other biological materials, the war will only heat up. Each new breakthrough in chip architecture offers another country or company a chance to race to the front of the pack, change the center of chips world order, and become the center of an intelligence economy.

The AI Factory Boom

A chip war for global AI supremacy between China and the US, new kinds of chips proliferating, the consolidation of MLOps companies into a LAMP stack for AI, a coming burst of dedicated training and inference powerhouses, and the rise of AI driven businesses and foundation models as a services, FMaaS all mean one thing:

The dawn of the age of industrialized AI.

That's where we start to see the rapid acceleration of AI chips, software, ideas and uses as companies around the world pour money, people and time into the pursuit of ever smarter software and machines.

The spark is already lit. AI busted out of the walls of Big Tech R&D labs. Now regular people and traditional coders and business people are getting their hands on super powerful models and taking them in bold new directions. Synthetic medial images. Image to 3D. Mega music creators. Powerful virtual assistants. Self-driving tractors and trucks. AI driven businesses will sprout like mushrooms after a long rain.

Those businesses will turbocharge development of AI, rapidly advancing and refining the ideas of the research labs. As more and more product people, technical people, and traditional coders work with super charged models they'll take us in directions none of the researchers could have possibly imagined. They'll make the models smaller and faster, and find ways to weave them together with other traditionally coded apps, all while figuring out better ways to put guardrails on them so they deliver more and more consistent results.

We're on the verge of an incredible set of brand new workflows and ways of interacting with software and the world. Take something like ChatGPT. It only took a few days before people starting using it for prompts on Midjourney v4 and Stable Diffusion, chaining together AI apps into a new kind of workflow. We're going to have an infinite regress of AI working with AI.

(Source)

Think of it as Zapier on steroids. Plug a bunch of traditional apps together with AI apps and you've got incredible new workflows. People will chain together models and traditional hand-coded apps into amazing and powerful, never-before seen applications. Stack AI on top of AI and its turtles all the way down.

(Source: Author, Midjourney v4)

The AI concept iterator will work hand and hand with the artists. They'll iterate together in a co-creative collaboration. They'll draw and paint and the AI will give them incredible variations that take them in countless new directions. When it's ready they'll transform it to 3D instantly and automatically apply textures. It's already happening with early versions of plugins that will become standard parts of the workflow over the next few years.

I had ChatGPT create a story for me about a biopunk soldier and his mech facing off against an alien invasion of cephalopod aliens and to turn it into key scenes so it gave me this:

The biopunk soldier stands atop a crumbling city skyline, his combat mech towering behind him as he readies his weapons for the approaching alien horde

The combat mech unleashes a barrage of missiles and gunfire, decimating a group of invading cephalopod aliens as they try to breach the city's defenses

The biopunk soldier faces off against a massive cephalopod alien, its tentacles writhing as it attempts to overpower the soldier and his combat mech

Amidst the chaos of the alien invasion, the biopunk soldier and his combat mech fight their way through the streets, fighting to protect the civilians caught in the crossfire

In a desperate last stand, the biopunk soldier and his combat mech take on the alien leader, a towering cephalopod with powerful psychic abilities

I edited the prompts language (as some of it was too complicated for Midjourney to understand) and added things like this to it: "extremely detailed, high quality, 8k, hyper realistic, studio lighting --v 4 --ar 3:2 --q 2"

Midjourney gave me back images like this:

It won't be long before multiple diffusion models are generating so many fully realized pictures that they become perfect synthetic data machines. The AIs will feed work back to themselves, learning from data they produce, so they learn faster and in a more controlled way. That synthetic data will come out with perfect labels already generated and ready to go, which will super-accelerate the data-centric approach to AI.

All this will spark a massive race around four key machine learning techniques until they get totally maxed out:

Scale

Search

Reinforcement learning

Continual learning

The combination of all these will deliver tremendously powerful new capabilities over the coming decade.

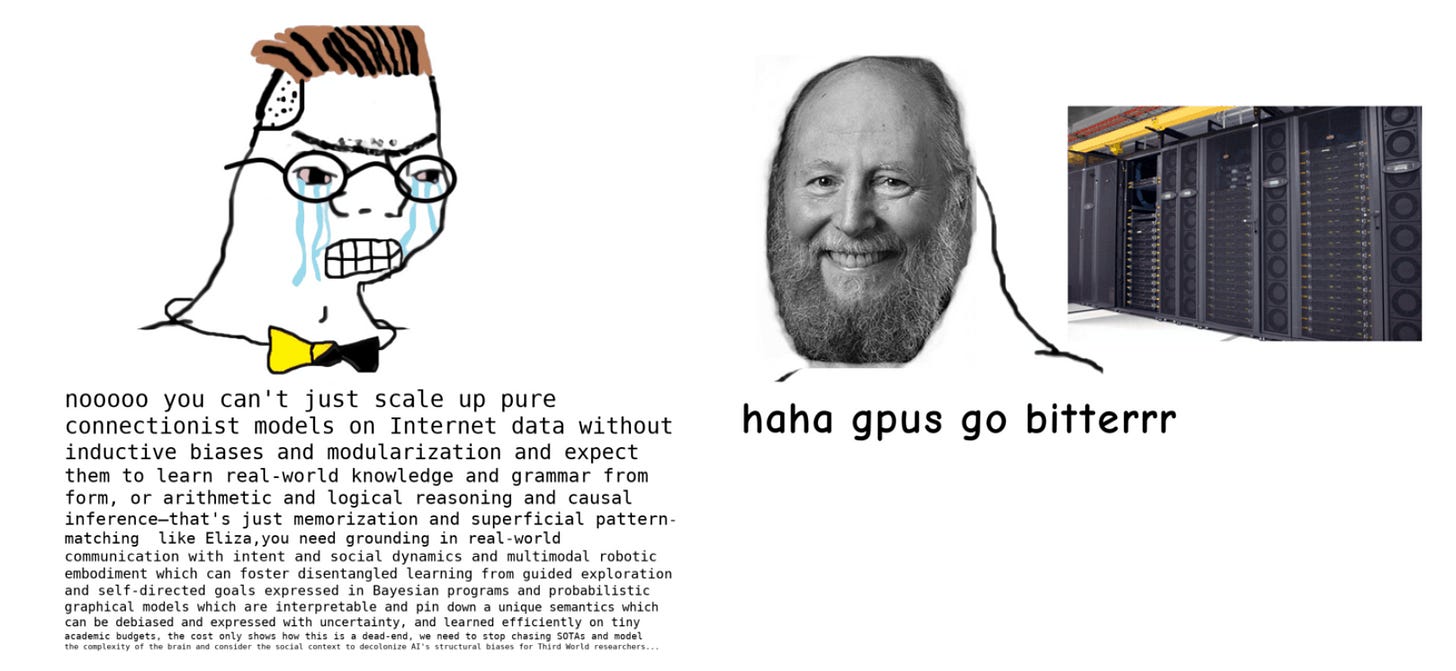

The first two techniques come to us from the "bitter lesson" essay by AI researcher Richard Sutton.

The bitter lesson is simple: The only two things that have ever really worked in AI are scale and search.

Scale is throwing more compute and data at machines and letting them learn on their own. Search is looking up information or hunting through learned information/a set of options to inform the process or augment it.

It's a lesson that AI researchers tend to bitterly resent and resist even when as they discover it's true again and again. Why do they resist it despite it working over and over?

Because it feels too much like blind evolution.

Ironically, every AI researcher believes in a kind of intelligent design and the unique nature of the human mind whether they know it or not. Accepting that intelligence might just come from jamming together a lot of neurons and letting the system brute force figure it out on its own feels downright barbaric.

That's because the other major approach to AI is the one every researcher secretly loves. It's where you add lots of lots of human hand coded ideas and domain knowledge to a system, making an expert system. It usually works well in the beginning but quickly becomes a bottleneck and limiter on the system. Almost every time, as the researchers remove that human framework the model gets better, smarter and faster.

We hate to learn this because we're people and we like to imagine that we're unique and special in the Universe and endowed with a unique spirit.

As Sutton writes:

"This is a big lesson. As a field, we still have not thoroughly learned it, as we are continuing to make the same kind of mistakes. To see this, and to effectively resist it, we have to understand the appeal of these mistakes. We have to learn the bitter lesson that building in how we think we think does not work in the long run. The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning. The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach."

"One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning."

But it's a lesson the most powerful AI companies of tomorrow will embrace.

Think of the version of Alpha Go that beat Lee Sedol. It was basically nothing but scale and search. It learned from millions of human games and from billions of games it played against itself and now it had a big database of ideas it could look through to help guide its decisions. It also had a ton of compute that Google could throw at it. That was all it needed. The later version, Alpha Go Zero, which smashed the original Alpha Go that smashed Lee Sedol, didn't even learn from human games. It learned from playing itself over 70 days, in the process figuring out ways to win that no human had ever considered in over 2,500 years of play. No human matches needed. Now people study AlphaGo Zero matches for ideas in a self-reinforcing collaboration between people and machines, a process that will become never-ending as we get smarter and the machines get smarter.

But there's still hope for us yet. The artists and AI engineers may be right. There is something special to the human spirit that we can give to the process.

Reinforcement learning.

Reinforcement learning will become more and more essential for keeping our AI's on the rails. It's a bit like how we trained pets and children. Think of it as reward and punishment for AI.

Reinforcement learning is incredibly complex but when you look at something like ChatGPT, it's really just a variant of InstructGPT, where humans got in there and talked with the model and trained it to better align with what we want. Reinforcement learning is hard and complex but we're just getting started with it and it holds one of the best possibilities of solving the "alignment problem" in AI.

Too many people conflate the alignment problem with the idea that "AI doesn't have our values." That's not it at all. In fact, AI may have too many of our values, especially the ones we don't like to admit we have, the ones that led to us fighting, killing, and dominating others for our entire history.

Alignment is simpler. It's getting the model to do what we want more often.

In other words, as Wikipedia puts it: "It's steering the AI towards the intentions of the model designers' intended goals and interests." Those interests will vary widely in the industrial age. Whether it's getting a model to only spit out images of trucks, to making sure the fashion designs fit the style of the designer, to making sure that chat bots don't make up really plausible sounding answers that are just plain wrong. It's about keeping AI on guardrails. It's not about universal values, which are impossible to code, it's about the values of the creators behind the system and what they want.

RL will scale up in the age of industrialized AI, as billions of people interact with these systems and train them just by using them and occasionally giving well-structured feedback as a pop up that they won't even realize it being used to train the machine better. To do it, we'll get smarter at dealing with how adversarial attacks work in a large, chaotic system. That will help us screen out the bad actors trying to deliberately subvert the training to their own ends, which happens in any distributed system. The second OpenAI put ChatGPT on the web, people immediately found ways to exploit its logic, flipping on its ability to search the web and give answers that the OpenAI team tried to filter out. People are immensely creative at finding loopholes in systems fast but RL will use that creativity to train the models better and better.

So we've got scale, search and RL but the last one will make the biggest difference in AI, once researchers crack the problem wide open is continual learning.

I talked about continual learning in-depth in The Coming Age of Generalized AI. Continual learning looks to solve the problem of "catastrophic forgetting" by making it so that when you teach the model something totally new, it remembers everything it learned in the past.

What's catastrophic forgetting?

If I train a model to recognize dogs and then later I go back and teach it about cats, it will forget everything it knows about dogs.

It's due to the way neural networks learn, constantly updating and shifting around their weights as they're trained. But what if you could teach a model about dogs at one point and then cats later and it still remembered everything it knew about dogs?

With a massive foundation model, like Stable Diffusion, you can fine tune it, meaning you can train it on a much smaller subset of people, places or styles that it doesn't know about. It has so many parameters that it doesn't matter if some of those get overwritten. Fine tuning adds new capabilities to the existing model, but it only goes so far. If the original model was trained on billions or hundreds of billions of images, you can fine tune it with 10 thousand images or even a million images, but the more images you add, the more it destroys the original model and you start running into problems. You can't create a totally different model with fine tuning, only a tweaked model that can do some seriously cool things, just not something totally new.

Continual learning has huge implications for every kind of AI. Imagine a robot that learned to do the dishes and then learned to fold clothes and take the dog for a walk. The model would quickly become a master of a single domain like taking care of a house. The models in the robot might never learn to compose poetry or design skyscrapers but it's still an incredibly powerful machine that can do multiple tasks easily, while remembering everything it's already learned.

Expect foundational model companies to invest heavily in continual learning and take it from theory to reality in the coming years. Continual learning will give foundation model companies a tremendous moat. If they can train a model and then continue adding new data and information to it over time, that model only gets smarter and smarter. Eventually few models will be able to compete with it because it's learned from such a vast and diverse archive of information over a long period of time.

If one company has a model trained on 10 trillion images over five years, then even getting started as a competitor would require 10 trillion images just to keep up. It doesn't even have to be the amount of the information, just the quality of that data. There are huge, private caches of data in the world. If someone trained a music generation model on the combined data of Sony, Warner Brothers, and Universal, BMG and Interscope, nobody else would stand a chance of building a better music generator because the model would have such a rich and deep knowledge of the subject that other models just couldn't match it without a similar treasure trove of music.

Most AI research companies started with a focus on building safe and powerful AGI but no company will get to AGI by raising a few billion dollars and starting with AGI. There are just too many steps in between where we are now and fully intelligent systems. We need new techniques, new algorithms, better data, much faster chips and software systems to support the models and more.

We'll get to AGI when companies shift their focus to industrializing the capabilities of the models we can build now, which are not even close to AGI but which are still amazing and have incredible powers. When the industry is worth 100s of billions or trillions of dollars and we've maxed out the best business models for funding mega-super computers is when we get to something closer to generalized AI and then maybe AGI.

Imperfect Perfection

We're rapidly approaching a day where AI surges into every aspect of our lives. It will run logistics, transportation, drive trucks and cars, create art and stories, answer questions and act as a personal assistant and therapist and friend. It will become our interface to the world, speed up how we make games and movies and how we find information and how information finds us. It will discover new drugs and new materials breakthroughs. It will design chips and surge into robots that clean up our house and take our dog for a walk.

Of course, it's got miles to go before it gets there. Don't let anyone tell you differently. The AI critics are right that it's not there yet. AI has much to learn. Robots aren't very smart and can't learn new tricks. Much of AI art hasn't crossed the uncanny valley. For art models to weave their way into prototyping tools they've got to get much more consistent at putting out something awesome much more often. People saying that ChatGPT puts out a lot of wrong answers with total confidence are absolutely right.

But don't let the critics and the naysayers and the doomsayers fool you either. Folks like Gary Marcus get positively giddy every time they point out a flaw in AI. He takes it as a given that current techniques are largely worthless. That's just short sighted and stupid. The techniques we have today will grow and get better and get augmented by new techniques.

The flaws don't take away from the fact that AI can already do amazing things. AlphaGo smashed Lee Sedol at one of the oldest games in the world. The most touching moment in the AlphaGo movie is when AlphaGo plays a 1 in a million move and the commentators are initially stunned and confused only to realize it was "a beautiful move." In the next game, Sedol comes back with his own beautiful move that turns out to be 1 in a million shot too. You can't call one move beautiful and not the other unless you just refuse to see machines as anything but inhuman monsters out to destroy us all.

People who think AI is not creative obviously never studied one of AlphaGo Zero's games, like many masters do today. The successor to the model that beat Lee Sedol invented moves that nobody ever thought of in 2,500 years. Think about that for a second. Nobody in 2,500 years of play came up with those moves. That's creativity and a kind of genius, whether others can see it or not.

The critics think our current generation of AI won't get anywhere because it's too flawed and they're totally wrong. We've got a lot of room to squeeze from current ideas. We're just getting started. Listen to the critics and the doomsayers too much and you might think AI will never overcome its issues. But at the beginning of any technological revolution the technology is messy and doesn't do everything we want the way we want. Doesn't matter if it's the steam engine or the car or the vacuum or cell phone. The first vacuum cleaners were huge and needed horses to pull them. Not exactly a perfect device for cleaning your flat. Spoiler alert, they got better and now we have cordless vacuums that fit in our hands, all using the same basic principals as the giant horse pulled version from a century before.

(Source: Science Museum Group Collection)

The first computer filled a room. The first cell phones were huge.

That's the way of all things. They start out with flaws. What the critics always miss is that engineers are working on it, finding ways to make it better, finding ways around the flaws, making it smaller, faster and lighter.

Machine learning is getting better at driving trucks and designing chips and creating art. AlphaFold created a database of a billion protein fold predictions and as humans we'd only managed to do 100M through experimentation and traditional code predictors. AI already recognizes you in pictures on your phone and translates things for you easily while you're traveling and you don't even think about it anymore because it just works. It's not perfect yet but perfection is a pointless goal.

In the messy real world, AI will never be perfect. It's impossible. But it doesn't need to be. Take self-driving cars.

Imagine self-driving cars killing 250,000 people on the road every year.

Would you accept that?

The perfectionists wouldn't. They'd be screaming that AI is killing us all and it's too dangerous for the real world and must be stopped.

But what if I told you that humans kill 1.3 million people worldwide every year in driving accidents?

250,000 would mean a dramatic drop in the loss of life.

In that case self-driving cars wouldn't be perfect but they'd be a hell of a lot better than what we have now and they'll save a lot of lives. Humans are terrible drivers. We have to pay close attention every second we're in the car and weren't just not good at it. We get distracted easily. We look away, get tired, look at our phones, get emotional and lose focus. Machines will do it better and we should let them, even if they're not flawless.

To really let AI come into its own, we have to let go of perfect. Perfect works for computers doing repetitive calculations that have to be right every time, but being right in a complex world is a matter of degree and probability. It's a matter of picking the best choice out of a near infinite number of variables.

AI is just starting to get good. We're at the foot of the mountain, looking up at an exponential curve. It doesn't look like much from where we're standing but it will look very different very soon. Tim Urban covers it perfectly in this essay on AI and where it's all going.

We're at the bottom of the exponential curve. It doesn't look like much from where we're standing.

But soon it will look like this, as AI feeds back on AI and accelerates progress faster and faster.

AI is coming out of the labs of Big Tech and into the real world. It can't be stopped and it won't be stopped no matter how people try.

And it's industrialization that will push these systems to get safer, smarter and more aligned with what we want them to do. It's self-driving car and truck companies and chat bot builders and medical system AI makers that will make it safer, better, smarter and faster. That's because companies will demand they get more reliable and have better guardrails so they're more consistent and manageable and predictable. Not perfect, but more consistent, much more often.

I told my 86-year-old grandmother I work in AI and she said, "What's that?"

I tried to explain for a minute and then I just showed her a self-driving car navigating the busy and chaotic back streets of China, something many human drivers couldn't do well.

She said, "Oh I'd never get in that."

And I said, "In 20 years, people will say the exact opposite. I'll never get in a car with a person driving. It's just too dangerous."