It's Time to Fight for Open Source Again

Open source is the core of 90% of the world's software, an unequivocal force for good, and yet it's under attack. Big companies and activists want to make open source AI illegal. They must be stopped.

Open source AI is under siege.

A loosely knit group of AI opponents want to crush it completely.

They want to make sure you never get to download a powerful model. If they win, you'll be forced to access models trapped behind a steel cage of corporate firewalls, the models safely castrated so you can't do anything they don't want you to do or think or say.

Instead of the open web, where anyone can publish anything without intermediaries, you'll have a filtered web where big models parrot official talking points only and keep you from "misinformation," a vaguely defined term that always means "whatever the folks in power don't like at the moment."

You see, you're too stupid to be trusted with powerful models. You might hurt yourself or others. After all AI is just like a nuclear weapon they tell us. It's too dangerous to let into the hands of mere peasants like you.

It's only safe when it's locked up and neutered and watered down. Only the trusted people can safeguard it for us, their eyes shining with divine light, their great wisdom preserving us and keeping AI from ever becoming so powerful that it rises up and destroys us in an all out man-versus-machine war.

These folks want to outlaw open source AI or make it impossible to do open research and share the model weights and data with you.

But we can't let them win under any circumstances.

We can't let them influence government policy. We can't let them crush open research or stop people from studying and training models in the open. We can't let them license labs and saddle companies with painful bureaucratic processes that take forever. We can't let them choke off the free and open sharing of innovation and the free exchange of ideas.

To understand why, you just need to understand a little about where open source came from, where it is today and why it's the most important software in the world by a massive margin.

The Ancient Battle Born Again

But first we need to roll back the clock a little to the 1990s. It's not the first time open source has been under attack. When I was a kid open source was a battle ground.

Microsoft and Sco wanted to destroy Linux in the 1990s and early 2000s. Balmer and Gates called Linux "communism" and "cancer". They told us it would destroy capitalism and intellectual property and put big companies out of business.

(Source: A Midjourney riff on the old Bill Gates as Borg theme)

I first started working with Linux in 1998 and when I went to look for jobs the recruiters would tell me all the jobs were in Solaris, a proprietary operating system that was dominant in servers and back offices at the time. I said "Solaris won't exist in ten years" and they would look at me like I had two heads. It still exists but it got dumped in a fire sale to Oracle (ten years later🙂 ) and almost nobody uses it anymore. It now has a 0.91% market share.

When I started at Red Hat, the open source pioneer, there were only 1400 people. We used to pitch Linux to old graybeard sys admins who would tell us "get out of here kid, nothing will ever displace UNIX." They told us only proprietary systems can be safe and secure and that Linux was a dangerous free-for-all hobbyist operating system that couldn't be trusted.

Nobody thinks that anymore and all those sys admins are gone, wrong and retired.

Does it sound familiar? It should. Because it's the same pitch proprietary AI companies and AI doomers are hawking now.

Only the trusted people can safeguard us from ourselves! Big corporations are the only ones who can be trusted because we're too stupid and AI itself is a menace to society unless carefully controlled by their divine hands!

Just like those old graybeard sys admins they'll be wrong. Open source AI will make the world safer and it will be one of the dominant forces for good in the world.

Frankly, it's bizarre that it's under attack. I though the battle for open source was over forever. How could it not be? Open source won. It won massively.

Open source is the basis for 90% of the world's software today! Read that again slowly. 90% of the world's software.

According to an Octoverse study, "In 2022 alone, developers started 52 million new open source projects on GitHub—and developers across GitHub made more than 413 million contributions to open source projects."

Linux runs every major cloud, almost every supercomputer on the planet, all the AI model training labs (including the proprietary model makers who are telling you open source is bad), your smart phone, the router in your house that you connect to the internet with every day and so much more. That's what open software does. It proliferates in a virtuous cycle of safety and stability and usefulness.

Open source is a powerful idea that's shaped the modern world but it's largely invisible because it just works and most people don't have to think about it. It's just there, running everything, with quiet calm and stability.

Open source levels the playing field in life. It gives everyone the same building blocks. You get to use the same software as mega-corporations with 10s of billions of dollars in revenue. You get to use the same software as super powerful governments around the world. So do charities, small businesses just getting started, universities, grade schools, hobbyists, tinkerers and more.

Go ahead and grab Linux for whatever project you dream up. Grab one of the 10s of millions of other open source projects for everything from running a website, to training an AI model, to running a blog, or to power a Ham radio. You don't have to ask anyone's permission or pass a loyalty test or prove that you align to the people in power's view of the world. It's free and ready to use right now.

With that kind of reach and usefulness I never saw it as even remotely possible that someone would see open source as a bad thing or something that must be stopped ever again. Even Microsoft loves Linux now and it powers the majority of the Azure cloud business. They thought it was cancer and would destroy their business and instead it revolutionized the world and it's now the software that underpins Microsoft's business and everyone else's too. If they'd been successful they would have smashed their own future revenue through short-sightedness and lack of vision.

But I was wrong. Here we are again. The battle is not over. It's starting anew.

The people who want to destroy open source AI come from a loosely knit collection of gamers, breakers and legal moat makers. The founder of Inflection AI and owner of 22,000 H100s GPUs to train advanced AIs, wants to make high end open source AI work illegal. He and a few other top AI voices want to make sure that you can never compete with their companies through regulatory capture like licensing model makers. The Ineffective Altruism movement (powered by such luminaries Sam Bankman-Fried and his massive crypto fraud) has linked up with AI Doomsday cultists and they want to stop open source AI by forcing companies to keep AI locked up behind closed doors instead of releasing the weights and the datasets and the papers that define how it works.

They must be stopped.

(Sam Bankman Fried and his massive crypto fraud)

That's because open software makes it easier for security experts and every day programmers to look at it closely and find its flaws. The top cryptographic algorithms in the world are open and widely known. Why? Surely, the algorithms that protect a country's top secrets are closed right? But they're not because more mathematicians can study open algorithms and understand their flaws. Algorithms developed in secret don't keep their secrets for long and hackers inevitably find and exploit their weaknesses. Now the government of the US is looking to create a new standard to resist the rise of quantum computers. They’re doing that in the open too.

The same is true of open source software. With so many people looking at it, it's more secure, most stable and more powerful. Right now it's open source AI that is leading the way on interpretability approaches, something that governments and safety advocates say that they want. It's leading the way to make models smaller, to expand them and teach them new knowledge or even to forget old knowledge.

The European Parliament's own study of open source AI said the following:

"The convergence of OSS and artificial intelligence (AI) is driving rapid advancements in a number of different sectors. This open source AI (OSS AI) approach comes with high innovation potential, in both the public and private sector, thanks to the capacity and uptake of individuals and organisations to freely reuse the software under open source licences...OSS AI can support companies to leverage the best innovations in models and platforms that have already been created, and hence focus on innovating their domain-specific expertise. In the public sector, OSS has the benefits of enhancing transparency, by opening the “black box”, and ultimately, citizen trust in public administration and decision-making."

And yet the EU AI act is still not properly supporting open source AI. They didn't listen to their own experts because too often governments fall prey to the fear mongering and doomsday scenarios and a hatred of Big Tech companies. It's short-sighted, dangerous and against the very spirit of the EU which came to power to foster working together and openness after a horrific era of authoritarianism and totalitarianism in the second World War and even into the 1970s and 80s in Germany and Spain.

This is a disaster for society, for innovation and for transparency. Openness is the foundation of modern democratic societies. Openness has led to some of the most powerful breakthroughs in history.

When it comes to software, when something is know to a wide group of people it means anyone can try their hand at fixing problems with those systems. I don't need a Phd to create a breakthrough in AI. I don't need to get hired by a few companies. When there are fewer gatekeepers, I can work on the problem if I have the will, the smarts and the inclination.

That's why a kid with no machine learning background was able to make a self driving car in his garage and a system that lets you turn 100s of models of cars into self-drivers. He taught himself how it worked. He didn't need to work at Open AI to make it reality. He just had the smarts and the will. When society lowers the barriers for everyone it makes life better for everyone because everyone has a shot at changing the world.

You never know where innovation is going to come from but you do know that an open and level playing field is the best way to maximize the possibility that innovation will happen.

Well Intentioned Extremists and the Not So Well Meaning Ones Too

To be clear, most of these folks who want to kill open source AI are not bad people but they are straight up wrong and their ideas aren't just bad, they're dangerous. They’re well intentioned extremists.

In writing tropes, they’re “villains who have an overall goal which the heroes can appreciate in principle, but whose methods of pursuing said goal (such as mass murder) are problematic; despite any sympathy they may have with their cause, the heroes have no choice but to stop them.”

(Thanos’s “They call me a madman” speach)

They're a bit like badly trained AIs with the wrong training data. Their models of reality are wrong. They look up at the sky and see five clouds and think the whole sky is dark and stormy. They're missing the blue sky behind it and the amazing potential of AI to transform our world with a wave of intelligence everywhere.

For them, if AI is ever used for anything bad that means AI shouldn't be used or it should be highly restricted and regulated. That's just foolishness. We don't ban Photoshop because you can put a celebrity head on a naked body. We don't ban kitchen knives for the 99% of people who want to cut vegetables because someone stabs someone.

Instead of advocating for sound, sane legislation that treads the middle way, they're looking for a total ban, or horribly burdensome restrictions. If you make self-driving cars, which power machines that can kill someone, you should have a higher degree of interpretability and risk assessment. But to dump everything into the "high risk" bucket makes no sense whatsoever and that's exactly what happens when extremists get their hands on things or when politics come into play. That's how "social media" algorithms got classified as "high risk" in the new EU AI Act. Classifying a recommendation algorithm with a self driving car is how good intentions in legislation get all tangled up with politics. Less is more when it comes to crafting tight, clear legislation that works.

AI holds the amazing potential to transform medicine, make cars much safer to drive, revolutionize materials science and science in general. It will make legal knowledge more accessible and coding skills. It will translate texts into hundreds of languages that are totally left out of the global conversation today.

And we're going to miss out on all of it, if these folks who want to kill open source AI have their way.

They want to send us hurtling backwards to an era of closed source software controlled by a small handful of companies who dominate every aspect of the software stack and how we interact with the world. Remember the Microsoft and Intel era where Windows servers were a hideously unstable mess and 95% of the desktop computers ran Windows? I do because I was a sys admin then and my job was to fix those error prone monstrosities of human potential sapping software. It's because of proprietary software's instability that I was able to make a great living but I don't miss it at all.

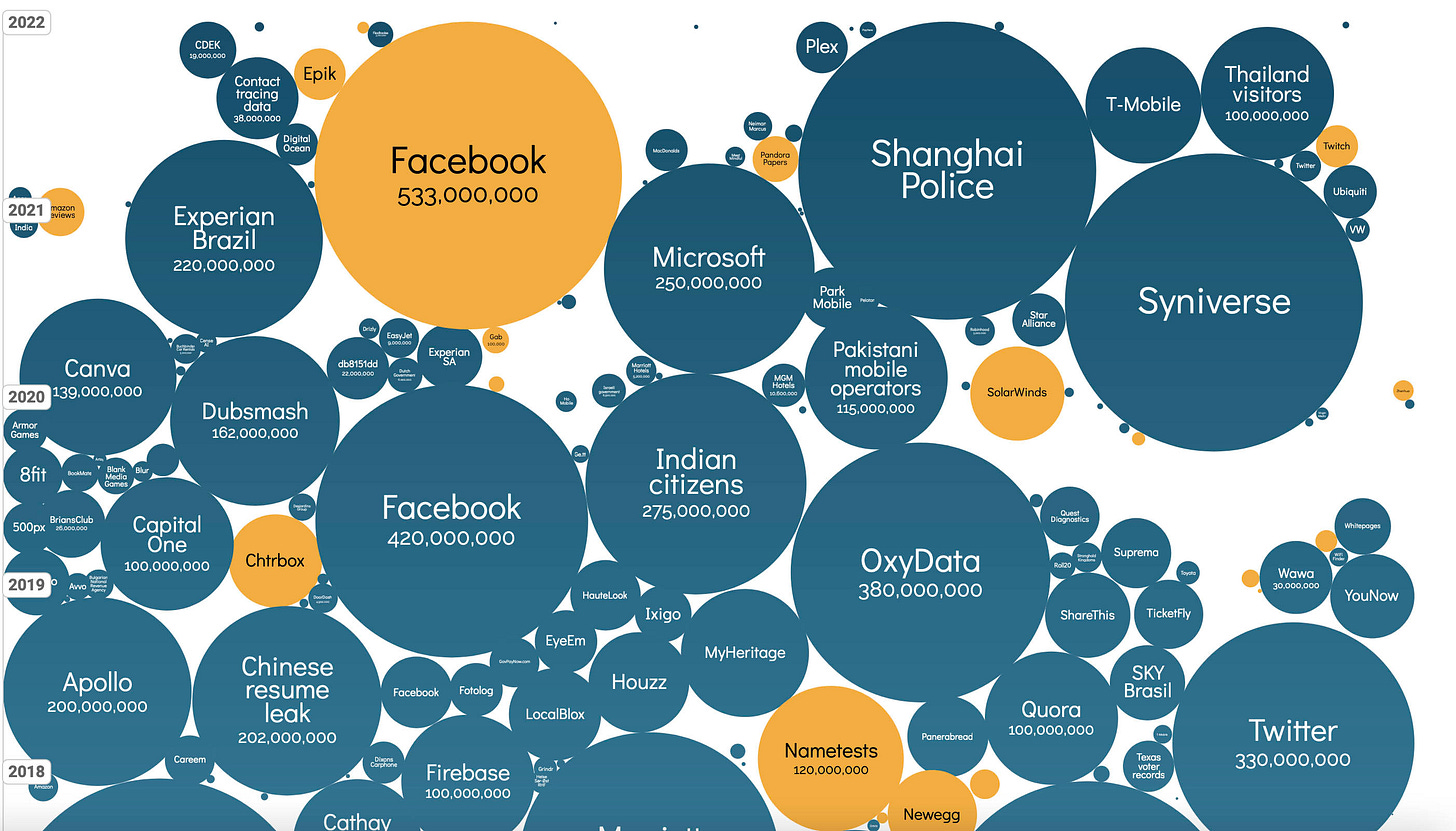

We love to imagine there's some magical company or group of people who can be trusted with protecting us all but they don't exist and once they're put into power they're hard to remove. Take Equifax. They are one of three companies charged with protecting our private credit card and personal data in the United States. They managed to leak the personal information of half of the United States.

They're still "trusted" and processing your private information today.

Nobody yanked them out of the system. Nobody replaced them. They're lodged in there for good. That's not trust, that is a travesty of trust that is invisible to people.

What most folks miss is that trust is a moving concept. It is not fixed.

An organization that's trusted one day can be unworthy of that trust tomorrow.

That's because an organization is not a block of stone and steel, it's made up of people and people change, retire, quit, die, get fired and move on. If I create the environmental protection agency, it might be trusted when I start it but if someone comes along and staffs it with people who don't care about environmental protection then the trust is shattered. The institution becomes a broken middle man, violating our trust.

These middle men can't protect us. Putting AI behind an API and a corporate firewall does not mean for second that it cannot be exploited, stolen, hacked or otherwise exfiltrated. It doesn't mean that company can be trusted forever because the people behind it will change.

Here's a visualization of historical data breaches. Try to find a single major government agency or corporation that is NOT on that list. Putting AI in a walled garden so that mega-corps can "protect" us from ourselves is absurd. It has never worked and it will never work. It's called security through obscurity and every first year IT security pro knows that it's basically worthless as a security measure without tons of other layers of protection.

(Source: Information is Beautiful Data Breaches)

Now while there are some reasonable and well-meaning folks in the anti-open source AI movement they've also hitched their wagon to some people who are not reasonable at all.

Some are extremists who's opinions should be lumped in with the Unibomber manifesto.

The president at the Machine Intelligence Research Institute say there's a 95% chance AI will kill us all and all AI research must be stopped now before it's too late.

Where does this 95% chance number come from? Nowhere. It's completely made up.

What is the actual probability that AI will kill us all? Trick question! Any answer someone gives is just totally made up bullshit based on absolutely nothing more than someone's feelings.

There is no predictive formula, no statistical model, no actual rubric for getting to that number. It's just people putting their finger to the wind and saying "I think it feels like 20%." They're making up a number based on absolutely nothing more than a feeling and a personal conviction that it's a real threat.

He wants you to know that there's a "debate within the alignment community re[garding] whether the chance of AI killing literally everyone is more like 20% or 95%". That's just an appeal to the crowd. In reality this community is just a small echo chamber faction of the alignment community, which is itself a tiny fraction of AI researchers, which is itself a tiny fraction of people on Earth. And their numbers are no more real than a number you make up about the probability of aliens invading tomorrow or a coronal mass ejection from the sun killing us all.

The default belief of the AI-is-dangerous movement and that in the future it will become so dangerous that it will rise up and murder us or cause a catastrophe. Not just metaphorically. They believe in total extermination of human beings, literally. They use that word a lot, literally, because they really mean total extinction of the human race. In other words they watched Terminator too many times and now believe it's reality. Their basic idea is that intelligence and being nice don't always line up. Unless we can guarantee that AI will always do exactly what we want, we shouldn't take any chances. Better safe than sorry.

Unfortunately, everything good and worth having in life is a risk. You cannot make things "absolutely 100% safe." There is nothing in life that is guaranteed. You can sit inside all day like an Agoraphobic who never leaves the house and you still aren't safe.

Life is a risk. It's a risk every time you get into a car. You have a 1 in 93 chance of dying in a car accident in your lifetime. You still get in a car. It's worth the risk because walking everywhere is great when everything is a few miles away but not so great if you want to go from New Jersey to New York.

AI is a risk worth taking. It will revolutionize health care, fun, entertainment, productivity and more. It will help us create new life saving drugs, and make driving safer, and stop more fraud and revolutionize learning while you chat with your AI to learn a new language or a new subject.

Take driving, for a great example. Why do we want AI to drive? Because humans are terrible drivers. We kill 1.35 million people on the road every year and injure 50 million more. If AI cuts that by half or down to a quarter that is 1 million people walking around and playing with their children and living their lives.

Again, everything good involves risk. Love is a risk. If you open yourself up to another person they may reject you or hurt you. Love is still worth that risk. Getting in a car is still worth the risk. Starting a company which has a huge probability of failure is still worth the risk. AI is still worth that risk because more intelligence is good.

Free societies are built on risk. They are built on some people trying and failing and some people trying and succeeding. Some people try to become incredible painters or musicians but most fail. But some break through and contribute paintings that make your heart melt or songs that you can't stop singing. Others set out to build a business and then find that they don't have the right combination of skills, perseverance, insight, luck, capital or any other of a million factors. Others succeed against all odds and somehow go from a small two person startup to a multi-billion dollar behemoth that employees 10's of thousands of people.

That's life. It's a risk at every step and the beauty is in the risk. Nothing worth having comes without risk.

Free societies emphasize people's ability to make their own choices and to take on risk for themselves. Sometimes people make bad choices and they get punished. It's still worth having an open and free society.

Who wants to live in an authoritarian society? Few people on the planet who would knowingly choose an authoritarian society to live in and for good reason. Free choice is essential for a good life. You want freedom of movement and freedom to make your own decisions, to fail or succeed on your own merits. You want your decisions to matter.

We should have the freedom to share research openly, to interact with AI the way we want and to not have that Promethean fire locked up and controlled by a few people who want to keep all the benefits for themselves and dole them out sparingly to the rest of us poor peasants.

More intelligence, more widely spread, is the best way to make this world safer and more abundant for as many people as possible.

When you think about it, what doesn't benefit from more intelligence?

More kids in school means we have smarter kids. More intelligence in our software means it reacts better to what we want and need faster.

Is anyone sitting around thinking I wish my supply chain was dumber? I wish cancer was harder to defeat. I wish drugs were harder to discover and new materials were harder to create. I wish it were harder to learn a language.

Nobody.

More intelligence is better nearly across the board. We want an intelligence revolution and intelligence in abundance.

If we let it proliferate and we don't kill it in the crib based on regulatory capture disguised as safety, intelligence will weave its way into every single aspect of our lives, making our economy strong and faster, our lives longer and our systems more resilient and adaptable.

I don't want to miss out on that and you don't either. But that's exactly what we'll happen if we let paranoid people with circular reasoning make the policies that shape our future.

I fully support everyone's right to believe whatever they want to believe. But I don't support their right to make stupid and paranoid public policies for the rest of us. All beliefs are not created equal. Not every opinion deserves to be taken seriously. You are free to believe that you can jump out of a 100 story window and nothing will happen to you. But go give it a try and you will find that gravity has an undefeated record in your final seconds here on Earth.

You are free to be Chicken Little and think the sky is falling because of AI but it's not and I don't want Chicken Littles deciding policy for the rest of us who live in actual reality.

If you believe in an AI apocalypse that's fine but you don't get to make the rules for the rest of us, just as Ted Kaczynski doesn't get to shut down society and send us all back to our agrarian roots just because he thinks it's a grand idea.

Refuting the Unrefutable and Embracing the Great Risk of Life

Folks in the AI-will-kill-us-all crowd will tell you that you have to engage them on the merit of their argument and use their language. No, actually we don't. If someone is screaming that the sky is made of ice cream and carrots are poison I don't have to spend a single nanosecond engaging that argument on its merits because there are none.

That's the thinking of cults.

In the Financial Times, in the a review of the book "Cultish: The Language of Fanaticism", Nilanjana Roy writes "linguist and journalist Amanda Montell makes the persuasive case that it is language, far more than esoteric brainwashing techniques, that helps cults to build a sense of unshakeable community....they use 'insider' language to create a powerful sense of belonging."

Using their language just means you've fallen into their trap. Forget them.

The arguments for the AI apocalypse fall squarely into the Münchhausen trilemma of assuming a point that cannot be refuted (because it hasn't or won't happen), circular reasoning (aka looping back to that unprovable, fake foundational beliefs) and a regressive chain of bullshit.

(Source: Wikimedia Commons Baron Muchhausen pulls himself out by his own hair)

The biggest problem in most of their arguments is the idea that we can fix problems in isolation. We'll just come up with a magical solution and guarantee with 100% certainty that AGI and AI is 100% safe and then we'll publish that solution, everyone in the world will agree to it happily and nod their heads in 100% agreement and we'll move forward, safely assured that we've eliminated any and all risk of the future!

If that sounds absurd that's because it is absurd.

It's not how life works.

To fix problems we have to put AI into the real world. That's how every technology works, throughout all time. And the more openly we do that the more people we have working on the problem. It's open source researchers who are probing the limits and possibilities of models. We need more people looking at these digital minds, not less.

When OpenAI put ChatGPT onto the Internet, they immediately faced exploits, hacks, attacks, and social media pundits who gleefully pointed out how stupid it was for making up answers to questions confidently.

But what they all missed is that they were a part of the free, crowdsourced product testing and QA team for ChatGPT.

With every screw up immediately posted on social media, OpenAI was watching and using that feedback to make the model smarter and to build better guardrail code around it. They couldn't have done any of that behind closed doors. There's just no way to think up all the ways that a technology can go wrong. Until we put technology out into the real world, we can't make it better. It's through its interaction with people and places and things that we figure it out.

It's also true that Open AI proprietary models were built on the back of open source. They couldn't have made ChatGPT and GPT 4 without it.

The training software they used to train the model is open source. The algorithms they used to create that model, aka the Transformer, were published openly by Google researchers. They use distributed infrastructure software like Kubernetes to run their systems. Anyscale, an open source inference and training engine, serves GPT 4 to you every day so it can answer questions with super natural ease. DALL-3 trained their diffusion decoder on the latent space learned by the VAE trained by Robin Rombach et al, the team behind the open source Stable Diffusion model.

They could not run without open source.

And they can't make their software safer without exposing it to the real world.

It's real life feedback and openness that makes it safer faster.

You can hammer away at your chat bot in private for a decade and never come close to the live feedback that OpenAI got for ChatGPT. That's because people are endlessly creative. They're amazing at getting around rules, finding exploits, and dreaming up ways to bend something to their will. I played Elden Ring when it came out and if you want to know how to quickly exploit the game for 100,000 runes and level up super fast about 30 minutes into playing it, there's a YouTube for that. How about an exploit for 2 million runes in an hour? That's what people do with games, political systems, economic systems, everyday life and office politics. If there's a loophole, someone will find it.

Anyone thinking we will make anything 100% safe is just a magical thinker. They knock on wood and think it actually changes the outcome of life. I'm sorry to tell you that it doesn't even though we all do it. I wish it did, but alas, it does not. Again, that's not how life works. It can never work that way and we can't build solutions on castles made of sand. We can't build real, working solutions on faulty premises.

If you put one million of the smartest, best and most creative hackers and thieves in a room, you still wouldn't come up with all the ways that people will figure out to abuse and misuse a system. Even worse, the problems we imagine are not the ones that actually happen usually.

In this story in Fortune, OpenAI said exactly that. “[Their] biggest fear was that people would use GPT-3 to generate political disinformation. But that fear proved unfounded; instead, [their CTO, Mira Murati] says, the most prevalent malicious use was people churning out advertising spam."

Behind closed doors, they focused on imaginary political disinformation and it proved a waste of time. Instead it was just spammers looking to crank out more garbage posts to sell more crap and they couldn't know that beforehand. As Murati said "You cannot build AGI by just staying in the lab. Shipping products, she says, is the only way to discover how people want to use—and misuse—technology."

Not only could they not figure out how the tech might get misused, they didn't even know how people would use the technology positively either. They had no idea people wanted to use it to write programs, until they noticed people coding with it and that only came from real world experience too.

This idea that we can imagine every problem before it happens is a bizarre byproduct of a big drop in our risk tolerance as a society. It started in the 1800s but it's accelerated tremendously over the last few decades. That's because as we've dramatically driven down poverty, made incredible strides in infant mortality and gotten richer and more economically stable over the 150 years, it's paradoxically made us more risk averse. When you have it all you don't want it to go away, so you start playing not to lose instead of to win, which invariably causes you to lose.

By every major measure, today is the greatest time to be alive in the entire history of the world. We’re safer, have much better medicine, live much longer and richer across the board, no matter what the newspapers tell you. But that's had the effect of making us much more paranoid about risks real and imaginary, to the point of total delusion.

You cannot make AI perfectly safe. You can't make sure nothing will ever go wrong in life. If we don't let go of perfection fast we'll miss out on less-than-perfect systems that are incredibly beneficial and amazing for the world.

As a society, we've gotten so wrapped up in techno panic about AI that we can't see all the good we'll miss out on if people try to crush AI in its crib out of blind animal like fear.

If you're out there right now, working in AI or government policy or you invest in AI or do open research, it's time to speak up.

These anti-AI and anti-open source AI folks are not afraid to get out there with a simple, lizard brain fear messages that resonates with regular folks who don't understand that they're a tiny minority of people who just happen to be very, very, very loud and very persistent.

They're fanatics in the Churchill sense of the word: "A fanatic is one who can't change his mind and won't change the subject."

Don't let them win.

You're probably reading this on something that's built on open source, whether that's your phone or your laptop. It doesn't matter if your phone is an Android phone or an iPhone, there is some open source in there. The kernel of both MacOS and iOS are open source and Android has a Linux kernel in it. Yes they are wrapped in proprietary code but that's the beauty of open source. If you're a big, rich tech company, you can build flashy proprietary things on top of it.

Open is everything. Open software is the most important software now and it will be the most important software in the future if we're smart and make sound, sane policies, instead of paranoid, insane policies.

It's time to fight for open source again.

It's time to fight for open societies. It's time to fight for open software and the free exchange of ideas.

Trust in open. Never trust in closed. Nothing good ever comes from extremist polices throughout all history. When we let extremists come to power we get disaster. AI fear is just a con job, designed to fool you. Don't give into it. If you're out there, take the leap of trust and fight for open.

The future depends on it.

And if you're reading this, you are the revolution.

It's all up to you.

https://openai.com/blog/openai-announces-leadership-transition

Very impassioned plea here! I’m curious to understand how you view a couple elements though (that never seem to get discussed much)

1) An ML model is an opaque set of weights derived from training code and training data. But yet very few of the open source models release the training code/data. An ML model feels like a free binary-only compiler: You can use it for whatever you want, but you can’t make it yourself from scratch. I’m curious which aspects of open source you care about?

2) There are a lot of models being released with open weights not actually under open source. Llama et al come to mind. If you’re making an appeal to Linux and Red Hat, i’m sure you must be more familiar with the variants of open source licensing than I am. I know there are some open source advocates very upset at the dilution and cooptation of “open source”. So lI am curious if you arguing open weights, or actual true open source above?