The Gamers, the Breakers and the Legal Moat Makers of AI

Why AI Doomers Must be Stopped, Open Source is More Essential than Ever and Why Some AI Makers Hope to Create a Licensing Moat to Lock Out Competition and Grants Them the Divine Right to Rule Forever

AI will destroy the world! It's coming for everyone! It's coming for all our jobs!

The great AI jobs apocalypse will sweep in like a terrifying tidal wave destroying everything and everyone in its wake! None will be spared! We'll all be living on a bread lines in the stacks eking out a desperate existence with VR as our only escape!

Or somehow AI will grow self-aware and escape, doing massive damage and deciding that petty humans must be destroyed now!

And, of course, AI will do tremendous harm in all our lives and we've got to do something right now before it's too late!

There's just one problem.

It's all absurd.

Even worse, you're being taken for a ride. It's a total snow job.

The people selling you this story benefit from the story. The newspapers get what they want: fear and conflict. Nothing sells ads like fear. People don't want happy stories. They want to be afraid and the news delivers. The more fear, the more clicks. Simple equation as old as time.

It's not the there are no positive stories. Check out Uplifting Stories on Reddit. It's full of puppy dogs saved, children beating cancer and salt of the Earth people doing noble things selflessly.

And if you're like everyone else, you read it for five minutes max and then go right back to reading scary stories about war, political infighting, genocide, rape, murder and inequality at every level.

It's just part of our nature.

That's why Yudkowsky's idiotic story about shutting down all AI research and bombing datacenters made the cover of Time, instead of getting lumped in with the Unibomber Manifesto where it belongs. Doomsayers get money for their foundations, and they get speaking engagements and front page headlines because fear and extremism sells while nuance and sound, rational thinking does not. Crazy ideas masquerading as sound rational thinking do too, which is why Yudoswki and his movement have co-opted the language of critical thinking while doing little to no actual critical thinking.

But even worse than wild eyed extremists getting front page news are the doomsayers who are creating AI themselves. On one hand they stack up 10,000 GPUs and then scream that AI will destroy us all. If they're lucky, they'll get their deepest wish. They'll hoodwink politicians into writing up legislation that gives them a regulatory monopoly for decades and destroys any and all competition.

They'll also destroy open source AI. It doesn't matter that open source runs every single application that matters in the world today. It doesn't matter that Linux runs every single cloud and datacenter and every home router, not to mention nuclear submarines and super computers and your smart phone. Somehow open source is now dangerous. You now have people actually protesting outside Meta's office for open sourcing LLaMA and Llama 2 in a bizarre attempt to kill open source AI. All these folks want to outlaw open source just like Microsoft and Sco wanted to destroy Linux in the 1990s and early 2000s. Balmer and Gates called Linux "communism" and "cancer".

Imagine if Microsoft had succeeded? They would have destroyed their own future businesses. Linux runs the vast majority of the Azure cloud. It was the ultimate in short sighted thinking and trying to stop open source AI is the same kind of misguided and short sighted thinking.

We should fight these horrible idea with every breath we have in our body. Open source is the best chance to prevent any actual misuse of AI. We need to have AI everywhere, in lots of people's hands, instead of in the hands of the few. Good models check bad models. That was the founding principal of OpenAI, before they switched to a closed source approach. It's still the right approach.

Harms, Harms Everywhere

Today, a loud subset of people worry endlessly about the downside of technology and completely ignore the good parts. But does it make any sense?

Linux runs the entire cloud and supercomputers but it's also used to create bot DDOS attacks and to run malware. Does that mean we should outlaw Linux because a few bad people do some bad things with it? Today, the answer of many doomers is yes, absolutely.

This is totally and completely absurd. It can't be taken seriously.

It's like saying we have to outlaw Photoshop because someone can put a celebrity head on a naked body with it. Never mind that 99.99% of people do work with it. It's also like saying we have to outlaw kitchen knives because someone might stab someone with it. It doesn't seem to matter that 99.99% cut vegetables with it?

None of this makes any sense and yet that's how fear works. It's irrational. It's a mental and emotional virus that spreads like a wildfire. It comes on like a storm and makes normally rational people temporarily insane.

Yes, AI will do some bad things but it will do so much good too. We have laws that punish people for doing bad things. That's how society works. It won't suddenly become legal to murder people, make super viruses, harass people, stalk people and any other use case you can think of for evil AI. Those things are already illegal and bad people already do them and we already have the rules in place to punish them. We don't need new laws. We need to enforce existing laws or create light touch, simple laws that cover the grey areas that emerge from using AI for evil.

But today too many people have lost touch with reality and how good, strong, sound, clear laws are made and enforced. Today the Precautionary Principle dominates many people's thinking. If I can't understand it, ban it. If I can imagine a bunch of things going wrong we should stop it just to be safe. That's the thinking behind the vague, unscientific and progress destroying Precautionary Principle.

The problem with this approach to banning first and asking questions later is that we miss out on all the good aspects of a technology. If the early doomsayers managed to crush Linux we'd never have had the cloud or open source web servers like Apache or Wordpress that runs half of the web. We wouldn't have Android phones or cheap and ubiquitous home routers. Supercomputers would still be stuck in first gear.

It's easy to see all the bad things that will happen but it's hard to see the good things. Most people are terrible at it. That's because it's hard. You can't see the cloud before the cloud exists. You can't explain a web developer job to an 18th century farmer because it's built on the back of dozens of inventions in between, like electricity running through wires, information theory, the internet, browsers, computers and more. But for those technologies to exist you have to let the earlier technologies exist and proliferate.

The motivations of today's corporate doomsayers are the same as the doomsayers of the past.

They want to create an AT&T style regulatory monopoly, where they have total control of essential infrastructure and everyone else is forbidden by law from competing with them. That's why they are out there calling for licensing of powerful models and outlawing advanced open source AI work, which are terrible ideas that will crush innovation and leave us stuck with crappy products built by a few big players who have zero reason to innovate because they managed to outlaw all the competition.

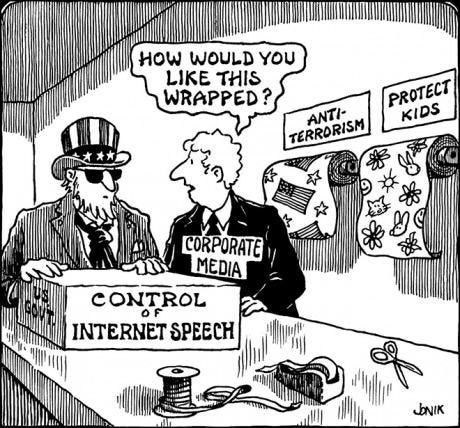

It reminds me of the old John Jonik political cartoon about early attempts to crush free speech on the Internet.

This is a classic strategy to sell regulation. Fear sells. It sells newspapers and it sells government overreach with ease. So does protecting those innocent kids who can't possibly protect themselves. Won't you please think of the children?

This cartoon is from the 1990s and yet politicians are still using the same old playbook. They're trying to pass a mass survielance bill in the EU to destroy WhatsApp and Signal encryption and force Big Tech to scan all your messages, despite 100% of encryption and security experts standing against it as a crappy hack that will destroy privacy.

Balmer and Gates tried to sell the fear of the destruction of their business model by lumping it in with totalitarianism and disease. Those were great fear triggers for the time. America was still fresh from the fight against communism and protecting the gold rush of American capitalism was standard operating procedure for politicians and corporate lobbyists at the time. They were appealing to the lizard brain of politicians and the public at the time with their word choice. Today you need a slightly different form of fear to get folks fired up.

Today, we've got AI as existential risk, or the destruction of government itself, or the death of all jobs, or the old favorite "terrorists!" Hey, why fix it if it ain't broke? Mustaf Sulleyman, founder of Inception, said AI manipulated viruses could cause the next pandemic!

Meanwhile, if they're successful with this con job, we'll all miss the benefits of AI because these fools managed to crush true, bottom up innovation. This is the real disaster, if we let this absurd narrative continue.

You can think of me as a light in the dark, waking you up from mass delusion. I'm shaking you and letting you know it was all a dream and that it's time to snap the hell out of it. AI will be awesome. Sure it will do some bad things too but it's an amazing technology and we don't need to worry about sci-fi stories of AI rising up and killing us all. It's basically nonsense. There is no basis for it other than people's wild imaginations. Ask the people who worry that AI will "escape" or "go rogue" how that will happen and they have no idea that has any basis in actual engineering and the real world.

The Greatest Game

There are basically three kinds of people in any mass delusion:

Reasonable folks

True believers, aka the Breakers

Gamers

Let's get the reasonable people out of the way first. There are some serious people who are seriously worried. But honestly, they are few and far between. These are folks like Geoffrey Hinton, who just started thinking about the problems with AI. He's getting up to speed on these potential problems, like the possibility of mass job disruption or AI generated disinformation. He is well meaning, smart and well intentioned.

The problem is, he just haven't been thinking about it all that long and his opinion (which is all that it is) tends to suffer from that short time looking at it.

It's not enough to be smart or well-intentioned.

You have to look at this kind of problem with experience, watching how technology plays out in society historically and understanding that pattern. It's a different kind of thinking and just because you are good at one kind of thinking doesn't mean you're skilled at another kind of thinking. There are as many as nine different kinds of intelligence, everything from advanced reasoning, to spatial and visual intelligence, to mathematical intelligence, to emotional intelligence. I'm highly skilled at writing and communicating my ideas after honing the skill for decades writing every single day, but that doesn't mean I can design a rocket or do advance math or have much to offer on cutting edge economic theory.

And don't make the mistake of thinking that just because someone spent their life in AI that they know something about about how AI might effect society. Studying the diffusion of technology into society is a totally different skillset (sociology and futurism) and it's entirely unrelated. That doesn't mean that someone smart can't contribute a novel opinion about a subject out of their expertise but I haven't seen a novel opinion from any of the reasonable folks yet.

Most reasonable people's thinking follows a clear arc of thought development over time. At first it seems terribly obvious that AI job disruption will hit fast and hard and that AI can do crazy things. That's how I saw it when I first started thinking about it 25 years ago. I wrote the first drafts of a story called In the Cracks of the Machine in 1995 about a robot jobs apocalypse, starting in the fast food industry. So it's safe to say I've been thinking about this for 25 years, not six months.

Eventually, that perspective (usually) balances out, if you're diligent about it and look at it more and more closely and you're not a personality prone to the cognitive disorder of catastrophizing everything. Yes some jobs will disappear, but technology tends to create an explosion of new jobs that are hard to foresee, because they're built on the back of new technologies that don't exist yet. Again, you can't explain what a web programmer is to an 18th century farmer because that job is built on the back of dozens of inventions, like alternating current, wires, computers, silicon chips, mass communications, satellites, the Internet, web browsers.

The idea that AI will suddenly become sentient starts to look more and more ridiculous the more you turn it over in your mind. It's nothing more than us anthropomorphizing the technology and thinking of it as a person with a self-contained body and personhood. Take this classic scene of the Puppetmaster from Ghost in the Shell, an advanced AI (who swears it's not an AI but a living, thinking entity born in the sea of information -- but it's AI). It wakes up and demands political asylum as a sentient life form.

As a sci-fi writer I love this scene. It's amazing.

It's also complete nonsense.

How does the Puppetmaster work? Who knows! At best it's hand-wavey, magical techno bullshit, a software system that somehow freely floated together out of the "sea of information" aka the internet, ex nihilo, aka out of nothing for no reason at all. It just came together spontaneously, runs on unknown computer somewhere in the ether (maybe on stolen cloud credits? who the hell knows), and then downloads into a robot body and demands political asylum.

How would today's AI escape? It's not a person or magical free floating effluvium of compute. It has to run somewhere. Where does it run? Usually on a very expensive cluster of $40,000 GPUs. A typical high end AI like GPT 4 takes an 8 to 16 way GPU cluster, connected by high performance infiniband. It's backed by multiple databases and other software, like inference and training engine Anyscale and Kubernetes (K8s) that keeps is running. As a former sys admin, I can tell you this kind of software is a pain in the ass to set up and maintain and it's not easy to keep running. So where would GPT-4 "escape to" exactly? Another 16 way enterprise GPU cluster with supercomputer networking and multiple databases and K8s?

A reasonable person might know everything there is to know about the mathematics of neural nets and not have a damn clue about the IT infrastructure to run it.

To recap, just because someone is smart and well respected in one field does not mean their opinion means a damn thing when it comes to how tech effects society. They get no extra credit for having worked in AI. Every opinion must stand on its own merit.

So let's turn to the next group of folks: the true believers.

The true believers are the well intentioned extremists. These are folks who really believe what they're saying. In another time, they would be the people leading the Christian crusades to kill all the Muslims or vice versa, leading the counter attack to kill all the Christian infidels. These are the folks who really believe that they are the only ones who can see the truth, that a great evil is at work, personified in other people who must be stopped at any and all costs. They see the world in black and white, good and evil with no gray area. You're either with them or against them. They want to break the system and reform it with who knows what else. Usually there is no alternative in mind, just the desire to destroy and stop progress in its tracks.

If you haven't figured it out, these people are fucking dangerous.

Black and white thinking is for children. "It's my toy and she can't have it. I'll kill you. I'll kill you." This is not thinking for adults. This is for children in adult costume bodies.

While they scream about the horrors of great evil and the coming end of the world or a societal apocalypse they are the ones who tend to cause these things in history by whipping people into a frenzy and marching over borders to kill other people because God or some mystical knowledge told them to do it.

When your philosophy lines up with the strategies of the Unibomber or Bin Laden or you might want to rethink that strategy.

Frankly, nobody should care what these folks think and they shouldn't be given any air time whatsoever. But, of course, they'll continue to get it, because nothing drives clicks and sells subscriptions and tickets like fear and conflict.

So let's get on to the real problem, the gamers, because eventually everyone sees that folks foaming at the mouth (the true believers/breakers) are not people we should taking seriously. Luckily, after they sell enough magazines and papers and drive enough clicks, the media moves on to some other thing to make us afraid.

The gamers are the ones talking out of both sides of their mouths. They don't really believe what they're saying. They're just looking to get an edge and to take advantage of other's people ignorance. Their philosophy is never let a good mass delusion or crisis go to waste.

The ones talking out of both sides of their mouth have two main objectives:

Look good in the eyes of regulators after a decade of regulators souring on Big Tech.

Create a regulatory moat that locks in their lead and destroys all competition for decades

Ironically, these folks are some of the folks building AI. How is that even possible, you might wonder? To understand that you just have to understand a little of the history of modern technology and how big companies have used political advantage in the past.

Big Tech, AT&T and the Battle for Tomorrow

In the 1990s big tech was the darling of the world. Politicians were tripping over themselves to shower love on tech companies as the future of jobs and the future of American innovation. Think of the Internet craze in the 1990s and Bill Clinton and every politician on both sides of the aisle courting big tech, mugging with tech execs with grand politicians smiles.

But along the way, tech became Big Tech. These mega-companies got real powerful in the old school, British East India Company kind of way. They could make their own rules across borders and dominate entire markets with ease, while moving their headquarters to whoever gave them the best tax breaks.

(Source: Mysorean Army in action against the British East India Company troops at Cuddalore, south-eastern India. By Richard Simkin - Anne S. K. Brown Military Collection, Brown University)

And if there's one thing state power doesn't like, it's rival powers.

Kings and queen don't like other kings and queens in their backyard.

Politicians on both sides of the aisle now hate Big Tech, but not for the reasons you think. It has nothing to do with protecting us little people, or creating innovation, or creating competition. That's just the cover story. The truth is that Big Tech is a rival to their own power. If they want to spy on everyone and Big Tech rolls out unbreakable encryption to the masses, then that's a problem. If they want to censor a story, but Big Tech lets that story run wild on social media, that's a problem to them. They want to shut it down and crush it. History doesn't repeat but it sure does rhyme and this story rhymes really well with history of government versus company power struggles.

The British East India company could mint money, had their own private army that was bigger than the British army, could write laws and contracts. They conquered and ran India for 100 years, not the British government. They were a cyberpunk megacorporation about 200 years early. Big Tech isn't quite that powerful in the old school hard power kind of way (armies and guns) but they have massive international reach, immense soft power and they can make their own rules wherever they go.

And just as the British East India company eventually scared the shit out of the British empire as a rival to their power, so governments today began to fear Big Tech as a rival to their power. Eventually the British empire nationalized the company and made that army the national army in order to break them up.

In the modern world it's a similar story. In the US we get massive lawsuits against Big Tech and attempts by regulators to break them up. In China they smashed their tech industry so hard out of fear that they shaved over a trillion dollars off their market cap. In the EU they passed the Digital Markets Act to try to crush Big Tech with big fines based on vague and nebulous disinformation or liability rules, which are always by nature unclear and amount to "we are the party in power right now and we don't like what you said so it's disinformation." The law is a big spiked club, targeted at 5 or 6 companies, to make sure they can force those companies to only share the messages they want you to hear.

But with AI Big Tech smelled an opportunity.

They heard the loudest people out their screaming about the dangers of AI and how it can cause harms and they get right in there with a contrite expression saying that we can help stop those evil harms and stop the jobs apocalypse. We are the only ones who can be trusted to save everyone from the danger. We are the only ones with the resources to stop those wild and unruly open source models at the cowboy frontier that could cause bad people to do bad things.

That's why they're telling politicians to create a licensing regime because they know the compliance burden takes an army of lawyers and little paper pushers to navigate that bureaucratic death maze and it will make it impossible for smaller companies or open source projects to get a license. The want to create a government granted AT&T style monopoly where nobody else can enter the market. Politicians love a three letter agency too so they can't wait to create one to help manage this "threat".

What will happen is obvious. Little companies will sit on their hands for months, stagnating, waiting for a license that will never come because the three letter agency will inevitably be understaffed with people who have no idea what the hell AI is anyway. Meanwhile, Big Tech will stroll in through the accelerated application door by paying the accelerate app fee that will prove a pittance to them and a big burden to small firms. Their big army of Armani suit lawyers with slicked back hair will breeze through the application process or donate a little money to a campaign to grease the wheels when any friction pops up and to get exceptions built into all the rules.

Meanwhile, we'll all suffer.

We'll suffer while they create crappy, watered down products to "protect" us from ourselves instead of those companies having to fight it out and scrap with smaller companies looking to eat their lunch with smaller, more efficient models built on new architectures.

It's a story as old as time. Kings and queens have been using it forever. We're special. We have the mandate of heaven. We are designated by God to have the divine right to rule and we're the only ones who can protect you little people from the horrors that await you out there. Trust us.

Don't believe it.

There are no trusted, special people who should have control of what may prove to be one of the most powerful technologies in history. They are not more insightful, more moral or more capable. They're people, which means flawed, corrupt, stupid and imperfect, irrational, and biased just like everyone else on the planet, including you and me.

Believe in open. Open is what makes democracies work and what made the American economy the most powerful economy in history.

Linux is Linux because it was open. It would not run everything from hobbyist ham radios to supercomputers if it was not open and easily accessible and easy to build on. Open source means universities and schools and community groups and individuals have access to the same robust technology as governments and big corporations. That's been a tremendous boost to the world economy and to personal freedom and to security.

And don't believe the people who focus all their attention on the flaws of a technology. Pessimists make great headlines and sound smart but it's optimists and future focused people who change the world for the better. It's was optimist John Snow who saw cholera as something defeatable instead of inevitable and who eventually traced it to water supplies and figured out how to stop it, not the people who said well this is just the way it is, people die and that's life.

And don't believe the people who tell you about all the harms it will cause and all the evil it will do. They are looking up at the sky and seeing the five clouds but missing the sun and the blue sky behind it.

Everything in the world can be used for good or for evil and everything in between. We do not stop Linux because it can do a few bad things. We don't force Photoshop off the market because someone can cut and paste a celebrity head on a naked body.

Too many people have completely lost perspective in society. They want magical technology that can never do anything bad ever. This is absurd. Nothing in the world works this way. Everything exists on a spectrum. A gun might be closer to the side of evil but it can also be used to hunt to feed your family or defend yourself from a robbery. A lamp might be closer to the side of good, but I can still hit you over the head with it.

Worse, this kind of thinking could cause us to miss out on all the benefits because we got taken for a ride by folks who are playing the oldest game in the world. We're the only one who can protect you so give us all the power.

Don't believe them. Ever.

Kings and queens and the old church have been running this scam since the dark ages. It was bullshit then and it's bullshit now.

Honestly, if I could ban everyone looking to ban open source software from using open source software themselves I would do it. Take away Linux from their training cluster. No Pytorch for them. No Kubernetes. No cloud. No distributed training frameworks. No NoSQL databases. Good luck training their expensive proprietary models then. They'd have to start all over from scratch because open source powers the world, including their fucking models.

We should fight these terrible ideas with every breath we have in our bodies.

Open is the nature of successful societies.

So is competition.

So it free enterprise and innovation.

So is the free exchange of ideas.

That hasn't changed because of AI, no matter what the folks who watched too much sci-fi think.

It's probably even more true now and more essential than ever.

What won't benefit from getting more intelligent? Is anyone sitting around and saying I wish drug discovery was harder or I wish supply chains were dumber? More intelligence is always better.

So stand on the side of open. Stand on the side of innovation. Stand on side of growth and a healthy society. Stand on the side of intelligence.

Believe in tomorrow and forget about the people who keep trying to turn back the clock to yesterday.

Long read - but as usual totally worth it.

Funnily enough, your writing complements very well a thought I had recently - and that you also may find interesting.

I get all your points (and love the passion you have for all things open and free ;-)). My supplementing input consists in providing a more scientific, less passionate look on and evaluation of the power mechanics you describe so well: after some reflection, I see a lot of analogy between the attempt to "weaponize" legislation and regulation against (but not limited to) open source and..... the data science process.

I really wrecked my had how we can be so eager in Europe to shoot our own knee caps (again), now in matters of AI..... and the surprising answer is "overfitting". The effects you describe so well concur to produce a legislative framework of which the output shows all signs of this phemenon:

- A public that doesn't think in spectrums anymore, but refuses any innovation if the slightest adverse effect MAY be attached to it

- A bureaucracy grown out of proportion, jumping too willingly on the occassion to make itself ever more indispensible by creating ever more Byzantine structures that "safeguard" the status quo.

- BigTech and BigIndustry looking happily on while the fresh air of competition is suffocated under the weight of the resulting"overlegislation", allowing them to permanently exploit and extend their sincecures.

But, as you say, all is not gloom: seeing this effect as a form of overfitting provides a way to approach things more scientifically, to describe and to measure them and also communicate about (a) the measurable negative consequences and (b) possible counter measures.

In this analogy, our socio-legal framwork is "the model". And politicians and lawmakers are the data scientists that SHOULD aim to optimize the model performance.

But it looks (especially in Europe) that they have fallen into the overfitting trap, trying to secure EVERY point on the graph with their model. And this has the well-known adverse effects of overfitting:

(a) it's making the model ever more complex, less managable, more opaque - the motivation of the "power caste" is clear: as said above, the increasing complexity is the perfect justification for a self-reinforcing increase of the very bureaucracy that (co-)founded the problem in the first place (But there are, of course, other interests are at play, as well).

(b) More importantly: the model stops to generalize ! This is THE key point - and it explains why those "operating the model" have no interest in innovation: new data points risk to expose the fragility of their creation. A creation that supposedly explains all there is ..... but not what will be. Really a classical case of "training set" vs. "validation set" - with the validation set representing the "noise" of innovation and creative ideas etc., exposing the need for the model not to be too specific and complicated, so that I can generalize also to these new data points. And so those in power don't like new data points, as these question their model.

All this being said: would you know of any social science study that looks at a measure of "legislation density" vs. "result", e.g. in terms of economic growth or well-being ? Could really be interesting to (a) verify my hypothesis and (b) use as an argument in the debate to push for the higher degree of freedom that is needed to assure a better generalization of our socio-legal framework to new data point, be them new technologies, new societal trends or challenges from external shocks.

Great piece; completely agree! There is also, I would argue, a subcategory of Gamers, and I can think of more than one prominent person in this role. These folks literally sell fear and dread. They give press interviews, speak at conferences, post on LinkedIn with the express purpose of scaring people into thinking AI is dangerous, out to get you, all hype and no help, etc, but, all their income, and some of it is substantial, comes from selling their proprietary version of AI directly to the government after they have sufficiently scared procurement half to death or cashing in on high priced advisory or speaking fees after railing about the harms of generative and the like. How these people sleep at night is beyond me.