Effectively Altruistic Techno Panic, Why Witches Cause Storms and How Not to Make Shitty AI Legislation

OpenAI's bizarre Effectively Altruistic techno panic and how it nearly set 90B on fire, AI dangers real + imagined, why much of the legislation around AI is bad + how we can make much better laws

How do we make good AI legislation?

A lot of people are working real hard to make shitty AI legislation but it's not too late. There is a clear path to good legislation. All we have to do is walk it.

I started writing this article a week ago only to find that it had gotten much more prescient overnight with the shocking news of OpenAI's board firing Sam Altman over the weekend, the ensuing drama and his return to the top.

That craziness thrust a growing divide in AI into the spotlight. It's a divide that's led to the worst early AI regulation drafts, like some versions of the EU AI Act. A lot of people think it's a battle between people who legitimately worry that AI will kill us all and the go-go techno optimists of Silicon Valley who believe in progress at all costs, safety be damned.

But really it's not.

What happened at OpenAI was not a battle between safety and progress.

It was a battle between sound, sane, measured reasoning and people who believe crazy nonsense about AI.

Here's the thing though. It's not a new battle. People have always believed crazy things about technology.

Dungeons and Dragons, Bikes, Telephones and Radios: the Scourge of Mankind

Imagine if we'd regulated the radio out of existence in 1923 because local police blamed that radio and its evil invisible electrical waves for driving a local Sunday school teacher to beat his sister and brother in law to death before shooting himself?

What if we killed off the radio because a prominent boat captain claimed in 1928 that the radio was causing "the world's freak weather"?

What might have happened if we tried to regulate bicycles in 1895 because they might "hurt the bookseller's trade" or because bicycles made "youths insane"?

What if we'd destroyed telephones because they'd driven the whole world to "mania" and because they were "the latest negative result of modern inventions, and those who are its victims are objects of dread to their fellow citizens."?

Here's a list of technologies that people thought were going to devastate society or cause mass destruction:

Television was going to rot our brains. Bicycles would wreck local economies and destroy women's morals. Teddy bears were going to smash young girl's maternal instincts. During the insane Satanic Panic in the 1980s which blamed Dungeons and Dragons and other influences like Heavy Metal music for imaginary murderous Satanic cults rampaging across the USA, people actually went to jail for abuse of children that did not happen.

(Source: the Satanic Panic as dramatized in Stranger Things. The real world version was much, much more horrifying in terms of damage to real people’s lives)

Wikipedia notes that "In 1985, Patricia Pulling joined forces with psychiatrist Thomas Radecki, director of the National Coalition on Television Violence, to create B.A.D.D. (Bothered About Dungeons and Dragons). Pulling and B.A.D.D. saw role-playing games generally and Dungeons & Dragons specifically as Satanic cult recruitment tools, inducing youth to suicide, murder, and Satanic ritual abuse."

One couple went to jail for 21 years, with the actual evidence submitted in court that Satan was able to retroactively hide all abuse signs on the children. That absurd claim was taken seriously and supported by a "quack cadre of psychotherapists who were convinced that they could dig up buried memories [in little children] through hypnosis."

I wish I was making that one up. Here's what they were convicted for, in an actual court of law in the United States:

"The Kellers had been convicted of sexual assault in 1992. Children from their day-care center accused them — variously — of serving blood-laced Kool Aid; wearing white robes; cutting the heart out of a baby; flying children to Mexico to be raped by soldiers; using Satan’s arm as a paintbrush; burying children alive with animals; throwing them in a swimming pool with sharks; shooting them; and resurrecting them after they had been shot."

People actually believed these things about radios, bikes, telephones, video games and Dungeons and Dragons and there were real consequences for it.

If all of those stories sound crazy now, it's because they are crazy, but it all felt very real to the people back when those technologies first came out.

Now we're seeing the same wild beliefs and speculations about AI and their feelings feel very real to them too.

Some folks think AI is going to grow sentient, rise up and kill us all, or make us all obsolete, or become God-like in its powers almost overnight and recursively self improve until it turns the whole Universe into paperclips. People nod their head and say, "yeah that's perfectly reasonable." They'd probably be the same folks nodding their heads when the local cops blamed the radio for the Sunday school teacher beating his family to death. Everyone knows radios make you nuts!

With older technologies, we get the benefit of hindsight and we can look back and realize that we can't blame radios. Before the rise of the scientific method, educated people believed, wholeheartedly that witches caused storms and that knives would bleed when a murderer was nearby.

How could anyone believe that, we think?

But we don't think it's crazy when people believe crazy things about today's technologies.

It's not just LLMs that hallucinate. Human beings believe all kinds of crazy stuff and we just take it as normal.

To be fair, sometimes when a technology is new it can be super hard to understand. Electric light bulbs looked like magic to people when they first came out. What do you mean there's an invisible stream of energy you can't see that lights things up? Like Arthur C Clark said, any sufficiently advanced technology is indistinguishable from magic. In a way it is magic. The whole world is made of the most exquisitely complex magic, a dance of atoms and evolution that's so beautifully and incomprehensibly advanced that it just appears mundane!

Most people still don't understand electricity but they use it every day and that's enough. Exposure to something makes us comfortable with the everyday magic of our lives.

Even though we should have learned our lesson a long time ago, we seemed doomed to repeat the same cycle of wild speculation taken seriously about every new technology. At the dawn of any new tech, absurd ideas get mixed up with reality because people don't have enough experience to tell the difference yet.

People today want you to believe that this time is different. It's not. Like every technology that came before it AI will do some good, some bad and everything in between, but it won't destroy the world and most of the far out speculations people have today about AI will look as crazy as people thinking that radios caused freak weather or made someone stab their sister.

But when it comes to AI something is different this time. What's new about this fight is that sometimes the people who are afraid of the technology are the very same ones in charge of that technology. It's as if Jeff Bezos was starting Amazon but secretly hated and feared the internet and books.

But who are these strange and powerful people who believe in the end of the world but are busy creating the very same technology they think will make it happen? That's usually the providence of Kool-Aid drinking death cults, not tech magnates and scientists and math savants. How did we get here? Where did they come from?

Enter the AI Decelerators.

Hitting the Breaks on Innovation

A few years ago, almost nobody had even heard of AI outside of seeing some robots in Star Wars. When I talked about it, I was talking to a small group of insiders who worked in the space or who felt passionate about it and who had a deep understanding of it.

Then suddenly AI thrust itself onto the big stage with the rise of ChatGPT.

Now everybody wanted to know about it. Was it sci-fi brought to life? Could I have a powerful AI friend and lover like in the movie Her? Was it a force for good? A force for evil?

Who would explain it all to them? Who would tell them about this strange and powerful new technology?

That's where the AI decelerators enter stage left.

Almost overnight, a loose knit collection of formerly fringe groups crawled out of the woodwork to explain AI to people in the worst possible way. They'd been waiting for this moment for years and they were ready. Fueled by decades of Hollywood disaster films like Terminator and 2001: a Space Odyssey and their own incoherent ramblings about AI they told everyone who would listen that AI would kill us all and destroy all the jobs overnight. LLMs were dangerous and would soon grow sentient. AI would accelerate away from us, self improving to God like levels overnight!

Remember when that guy got fired from Google because he thought LaMDA was sentient? Anyone who's now played with Google's models has no illusions that they're sentient or even very smart at this point. But at the time, people took that guy seriously even if everyone has forgotten his name now.

And nobody ever bothers to stop long enough to say, maybe we shouldn't take these folks seriously at all.

Soon after we got the letter to pause AI innovation and then it got more unhinged from there, an ever accelerating Status Game where you got points for saying more and more crazy things than the last guy.

We went from "let's think about this for six months" to we've got to shut it all down and if it's not stopped we should be "willing to destroy rogue datacenters by airstrike."

Here's an actual quote from Elizer Yudkowski, the prophet of AI doom:

"Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike."

AI doomers always want people to engage them on the merits of their argument so let's do that. In this one paragraph are multiple unhinged statements that are truly scary and the scary parts have nothing to do with AI. He is actually suggesting that we enforce a global ban on strong AI and that to do that we stand ready to bomb the hell out of datacenters that don't comply whether that country is hostile or friendly. And we should be less worried about an actual shooting war, where people die today, perhaps many many people, because AI might someday, potentially, kinda sorta be dangerous.

This is not sound, sane thinking and no serious person should take it seriously. This is scary, deranged thinking.

The people who want to destroy AI research come from a loosely knit collection of gamers, breakers and legal moat makers. You've got the Baptists (aka the true believers like Yudkowski who really believe AI will kill us all) and the Bootleggers, a group of cynics who take advantage of those Baptist as useful idiots to push for legislation that will give them a massive regulatory advantage that kills all competition for them. Bootleggers don't actually believe AI will kill us all, but just like the bootleggers of the past, they're hoping to make a legitimate business illegal (or parts of it) so they can take it over and make a whole lot of money by killing off the competition. Lock out the competition and you've got a monopoly on the future.

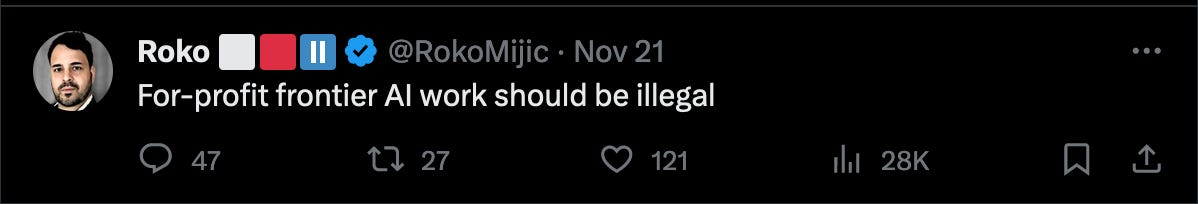

The founder of Inflection AI and owner of 22,000 H100 GPUs to train advanced AIs, wants to make high end open source AI work illegal. Roko, the creator of a famous thought experiment Roko's Basilisk which said that super powered AI from the future may look to punish people from the past who are not actively working towards its God like ascendence (seriously) thinks all for profit advanced AI work should be illegal.

A few other top AI voices want to make sure that you can never compete with their companies through regulatory capture like licensing model makers. The Effective Altruism movement (EA) (powered by such luminaries Sam Bankman-Fried and his massive crypto fraud) want to stop open source AI by forcing companies to keep AI locked up behind closed doors instead of releasing the weights and the datasets and the papers that define how it works.

Politicians started to take notice.

AI doom policy language started to show up in drafts of bills making their way through legislatures around the world such as in the EU AI Act. The fringe groups wanted to force licensing on big model makers, regulate training and surveil data centers to make sure nobody was developing magical super AI outside of strict controls. They wanted to make big model makers liable for everything that could possibly go wrong with them, defined vaguely as "harms" which could mean anything from "spreading misinformation" or "bias" to someone using it for "bioterrorism" or whatever speech they don't like that day.

Thankfully cooler heads are starting to prevail and the member states are backing down from regulating the training and distribution of models. That's good. But there are still a lot of not so great ideas in these bills and in the Biden executive order that have bled into the language from fringe group lobbying efforts and mass media fear mongering.

Imagine if every kitchen knife manufacturer was responsible if you stabbed someone? Imagine if Adobe was responsible if someone made a poster with Photoshop advocating for a terrorist attack? Imagine if Reddit was responsible for every crank theory someone stupid posted on their platform? That's the kind of liability these groups were looking to push in their radical regulatory agenda.

If you're already thinking that would crush research and development, you're right. And that's exactly what they wanted.

They don't want to regulate AI intelligently.

They want to destroy it.

They want to kill it out of a bizarre fear that it will grow sentient and take over the world.

This isn't just speculation. In the letter signed by nearly every OpenAI employee asking the board to resign (738 employees out of 770) they note that the board told the leadership team that "allowing the company to be destroyed 'would be consistent with the mission.'"

Again, the folks gripped by this fear of AI don't want to regulate AI; they want to strangle it in its crib.

What AI model maker would take the risk to make any powerful model if they could be responsible for anything someone might possibly do with it? Why make a lamp if you're responsible when someone hits someone else over the head with it, even if 99.99999% of everyone else just lights their house with it?

The foundation of all sound, sane legislation is punishment for crimes after the crime is committed, placed squarely on the shoulders of the individual who committed that crime.

Car makers are not responsible if you crash the car, unless they were grossly negligent or deliberately cut corners and put in faulty brakes.

But X-risk groups, short for "existential risk", favor the Precautionary Principal, a legal philosophy that says we should go slowly when a technology has the "potential for causing harm when extensive scientific knowledge on the matter is lacking. It emphasizes caution, pausing and review before leaping into new innovations that may prove disastrous." The key here is that we're making up rules despite having no actual scientific knowledge or consensus, stopping innovation dead just because something might, somehow, someday prove dangerous.

It can be summarized as the "better safe than sorry" approach to life.

Critics of the Precautionary Principal, myself included, argue that it is "vague, self-cancelling, unscientific and an obstacle to progress."

Because it is.

It is basing legislation on the imaginary worries of people who have no evidence that their worries will come to pass, no meaningful way to prove or disprove their theories and no scientific data to back it up.

In other words, it's the equivalent of banning radios because the local cops blamed the radio for causing someone to go off his nut, murder his sister and brother in law and then blow his own brains out.

This is not the path to sound, sane legislation, it's the path to delusional legislation that stops good technologies dead for no other reason than a few people with suspect reasoning capabilities have a bad feeling about it.

When you have a group of people who, in the short term, could not even predict how their actions would play out at the most basic level in the real world (i.e. the OpenAI board), why would you think their long term predictive powers about AI and how it will play out are any better?

Look, the truth is that today's Bootleggers can and will use these folks to make shitty legislation. If everyone is afraid, they make bad decisions and that plays right into the hands of folks hungry for regulatory capture.

Today's early leaders in AI model making will profit massively if legislators force model licensing or regulate the training and distribution of frontier models. Licensing and distribution limits would cripple competition and concentrate power in the hands of a few companies that can afford to deal with the regulatory burden. It would also destroy open source AI.

Good Legislation and Why Licensing Will Concentrate Power in the Hands of the Few at the Expense of the Many

Okay, we talked a lot about bad legislation and bad ideas that are influencing legislation. So how do we make good legislation? It's pretty simple. Let's keep it short and sweet. You don't need much.

Focus on real world problems that exist now or in the near term.

Focus on AI that can kill people today, like AI in self-driving cars, law enforcement, surveillance and military systems. Such high-risk systems should be held to higher standards and testing criteria or restricted/limited.

Criminalize illegal uses of models, not the models themselves.

Stop focusing on vague or imaginary long term threats.

Forget trying to police training of models. It won't work and it will only get more and more infeasible with each passing month.

Protect open source research and open source AI. It's the best path to making these systems safer.

Have big tech firms report on their training methodology, architecture, parameter counts, and safety efforts.

That's it. A simple framework for making legislation that actually works in the real world.

But if we're not careful and we let the doomers drive legislation we're going to end up in a world nobody wants to live in. Lawmakers, in a misguided attempt to do good, would create the exact opposite of what they want:

Without competition, progress stagnates. When companies don't have to worry about new competitors entering the market, they stop innovating, jack up prices and stop having to serve their customers in any meaningful way. It's why many state sponsored companies in non-democratic countries are bloated dinosaurs that burn through capital and create little to no innovation.

They'll create worse security risks. When we have millions of apps powered by the same models, any security vulnerabilities ripple across all those applications. With only a few companies making models, the same models will power everything.

Closed source monocultures are a disaster. Closed source software companies can change the rules on you, inflate prices, lose everyone's data, or worse and we're stuck with them. The OpenAI drama shows us the dangerous, brittle nature of relying on a single company and their potentially erratic decisions. In the early days of computers, no matter how terrible the Windows operating system was, we were stuck with it because there was no alternative. Whether Microsoft put out a great OS or a terrible, buggy hunk of crap, they got our dollars. That's not something we want to repeat with some of the most powerful models on Earth.

Destruction of free speech. Today, we have an open web where people can meet peer to peer. That's stagnated in recent years as some platforms come to dominate and increasingly act as middlemen between our conversations, but in general the web is still very much peer to peer. You and I can talk directly about it and share our ideas and opinions. There are always new companies popping up that let people express their opinion freely, even if the older platforms lean toward stifling free speech. AI models can act as the ultimate middleman. If I no longer look up the information myself, but rely on a model to look it up for me or answer it for me without looking it up, then eventually that small group of model makers get to define the limits and range of acceptable free speech and what people can and can't say.

Regulatory capture. The ongoing lobbying for a licensing regime is nothing short of regulatory capture. Regulation favors the incumbents. As Andrew Ng wrote about the Biden executive order (or really any order that regulates the training and distribution of models): "It’s also a mistake to set reporting requirements based on a computation threshold for model training. This will stifle open source and innovation: (i) Today’s supercomputer is tomorrow’s pocket watch. So as AI progresses, more players — including small companies without the compliance capabilities of big tech — will run into this threshold. (ii) Over time, governments’ reporting requirements tend to become more burdensome. (Ask yourself: Has the tax code become more, or less, complicated over time?)"

And the worst part is that decelerator ideas won't work anyway.

They won't stop an AI apocalypse, even if it were real, but they sure as hell would concentrate power in the hands of a few small groups and safety committees.

They'll centralize power, create a monoculture of models that power every intelligent app in the world, and assign trust to people who we can't trust to act as our divine overlords.

Even worse, if regulators try to slow down AI how would they even enforce it?

Here's why regulation of models won't work:

As the prices drop, malicious actors will simply ignore the reporting and train their own models to do whatever they want, while only the good guys will get stuck with the increased regulatory burden.

How Does That Work Again?

OpenAI and AI doomsday prophets like Yudkowski have suggested surveilling data centers. The idea is that AI eats up a lot of compute and bandwidth. Data centers would need to report on anyone building a massive training cluster and it would be hard to hide all those GPUs or specialized AI chips.

Setting aside the fact that most countries on Earth wouldn't agree to this, let's pretend for a second that we somehow magically got every single country in the world to agree to do this. China and North Korea gladly open up their doors for reporting on training to the whole world! So does Russia and various unstable Latin American dictatorships. All is well and we are all reporting accurately and faithfully to the global world AI Turing police.

(Source: The Animatrix)

But this starts to fall apart fast as decentralized training takes off and as the cost of training big models falls dramatically. From 2012 to 2019 the cost to train an image classifier dropped 44X. It will likely drop even faster now as more and more chips saturate the market and we get algorithmic and training tricks that make it faster and easier. GPT 4 was expensive to train but the cost keeps dropping as more and more players join the game. The cost to train GPT-4 was around 100M. The cost to train Llama 2 70B, a state of the art open source model that surpasses GPT 3.5 and approaches GPT-4, was estimated at around $8M only four months later.

It won't take long before the cost drops to below 1M and even lower than that in the coming year. Pretty soon you can train GPT-4 on your desktop and run it on your watch.

These surveillance proposals also don't bother to take into account new ways to train AI. If tomorrow someone comes up with a breakthrough to train AI the way we train humans, with little data, over short periods of time, the entire approach falls apart.

And if someone created that method, would they even bother telling anyone or would they just train the best models in the world on a fraction of the compute, falling well below the requirements, and not bothering to detail their breakthrough to anyone? You bet they would.

The technical know-how to train massive models is already widespread. The tools to build big models are all open source. Even proprietary models train on open source distributed training frameworks like Deepspeed, Horovod and open source inference/training engines like Ray, running on top of open source container management systems like Kubernetes. Universities around the world and many companies have already shared their code, models, algorithms and their entire training repositories to make it easier. There are no secrets to building smarter, faster models. It just takes more compute and the price of compute is falling fast. Or at least that's what it takes now. A breakthrough in algorithms could drop off the massive compute part too.

OpenAI and proponents of the Effective Altruism movement have also pushed for a licensing requirement to train the most powerful models. The only way to really make it work would be to give governments extraordinary new surveillance powers and to get global coordination at a level that is unprecedented for a problem that does not really exist. Considering we've never gotten that kind of coordination on anything (see Climate change), it's basically a non-starter. Maybe the only time that ever worked was for the regulation of chemical weapons and that was only because 1) every country on Earth had experienced the actual real world horror of chemical weapons and 2) chemical weapons are shitty, indiscriminate weapons anyway so it was no real loss to most countries to get rid of them in favor of weapons of mass destruction or precision weapons like laser guided missiles.

It also doesn't stop state actors from creating powerful AIs, or rogue states, or companies buying chips through intermediaries. There is now a black market for the top Nvidia chips in China and other places on the entity list. It's also accelerating China's push to build their own independent chip pipelines and you can bet they won't care at all about limits on super powerful AI when it comes to state security.

Licensing regimes buy, at best, a few years time (if that) before they fall flat on their face. Algorithmic improvements, new training techniques and the ever falling price of compute and training will wreck any licensing scheme fast. Once the costs drop far enough, the people who can train a powerful model will proliferate and policing them will become impossible.

And the worst part about all this?

All this nonsense about the end of the world is distracting us from the real dangerous uses of AI.

AI Dangers, Real and Imaginary

There are real dangers with AI. Tech can have real consequences but smart policies rely on proving actual harm rather than dealing with imaginary demons.

The Biden executive order on AI uses the "dual use" language, lumping AI in with weapons of war like missiles and tanks.

"Establish appropriate guidelines (except for AI used as a component of a national security system), including appropriate procedures and processes, to enable developers of AI, especially of dual-use foundation models"

The order exempts AI in military systems. That's no surprise as nation states live to defend themselves, but using AI as part of national security is exactly the kind of place we should be thinking about limiting AI. Should it be used in mass surveillance or in weapons systems that can kill without a human in the loop? China already uses it for mass surveillance and you can bet with 100% certainty that the western world does too. In fact, we know it's already used that way. In the book, No Easy Day, written by one of the Navy Seals who took out Bin Laden, the authors write that it was Palantir AI systems that found Bin Laden, not some plucky blonde CIA analyst with a gut feeling like in the movie Zero Dark Thirty.

In other words, right now, AI is already being used in surveillance and military systems and in tracking down people.

But we're not talking about that.

Instead we're talking about regulating civilian AI and completely ignoring actual, real dangerous uses of AI that exist today. We're talking about making sure the peasants never get strong AI, just like we once talked about why the peasants should never get strong cryptography in the 1990s during the crypto wars where the NSA wanted to put a backdoor chip in every phone and make something like WhatsApp impossible.

It’s all fine and good as long we are tracking or killing the “bad guys” but remember that the definition of bad guys changes depending on who is in power. One day we are talking about a real bad guy like Bin Laden and at another time and place we are talking about the FBI monitoring Martin Luther King to try to pressure him to stop pushing for civil rights.

Somehow we've gotten away from actual AI dangers, like autonomous killing machines, aka LAWS or Lethal Autonomous Weapons. Those are for real dangerous. Lots of governments are building them anyway. Why aren't we talking about limiting these kinds of systems? We're just not seeing government legislation to protect us from nation states using AI for spying, mass surveillance and lethal weapons. Instead we're seeing legislation focused on the private sphere, aka you and me getting to use AI. Governments are all carving out exceptions for them to use AI in national defense, aka for however they see fit, in whatever way they want to use it. This is not speculation, this is actual fact in both the Biden order and the EU AI act.

Ronja Rönnback writes "While the AI Act prohibits or sets requirements for AI systems put on the EU market, it explicitly excludes from its scope AI systems used for military purposes only. Council’s General Approach on the AI Act confirmed this exclusion by referring to systems developed for defence and military purposes as subject to public international law (also according to the Treaty on the European Union, chapter 1, title V)."

(Source: The Economist's cartoon about the EU AI Summit)

Instead of worrying about war weapons and mass spying, we worry about whether ChatGPT makes up wrong answers to questions, as if it's the same thing. It's not. If kids use it to cheat in school, it's somehow a national disaster and the subject of countless news articles lamenting the death of education. Here's a shocker, kids have been cheating in school for all of time. Smart teachers are already adopting and embracing AI as something to learn about for tomorrow's workforce reality where everyone uses AI, rather than knee jerk reacting to it and banning it as if they could ban it anyway.

Of course, there are real concerns that don't involve injury and death. If we could just dial down the white noice of moral panic over AI, we could start focusing on them. We don't want chat programs advising kids about the best way to commit suicide or making up answers with great confidence about what pills don't have interactions. Some of those problems can be solved with research and smart programming.

We won't solve those problems in an ivory tower. We'll solve them in the real world, like we solve every other problem.

When we first put out artificial refrigerators, they tended to blow up. The gas coursing through them was volatile and if it leaked it could catch fire. People were rightly up in arms over that because nobody wants their house burning down over keeping tomorrow's dinner cold when natural ice worked just fine. In 1928, Thomas Midgley Jr, created the first non-flammable, non-toxic chlorofluorocarbon gas, Freon. It made artificial refrigerators safe again and they gained widespread popularity and we had a revolution in food storage and food security for the whole world.

Unfortunately, in the 1980s, we discovered chlorofluorocarbons were causing a different problem, ozone depletion. With actual harm proven, the world reacted with the Montreal Protocol and phased out the chemicals and the ozone layer has started to recover quickly. NASA recently said the ozone layer is now recovering based on the ban.

You can't solve problems that haven't happened yet. You can only solve them as they happen or when they've already happened. Nobody wants problems but you can't predict every possible way a system can mess up. And usually when you try, you end up creating imaginary problems that never materialize and missing the real ones. People thought going over 10 miles an hour in cars might cause the body to break down and people to go insane. It's safe to say that wasn't a real thing, but later when cars were going 70 miles per hour and people were dying in car wrecks too often, seat belts offered a solution that saved a lot of lives. It was an actual fix to an actual problem. Once we see a real problem, then engineers can start to work on it in earnest. That's why we have crumple zones and airbags now too.

No matter how long OpenAI trained ChatGPT, it's just too big for any one company to get perfect. We're talking about a completely open-ended system here, one that you can ask any question you can possibly imagine and it stands a good chance of giving you an answer. How could any one company test every possible way it could screw up or how you could attack it? They only answer was to open it to the Internet and let people do what they do so tremendously well, find loopholes and exploits. Within a few days, people had figured out how to get around its topic filters by posing the questions as hypotheticals, and how to get it to enable its ability to search the web, execute code and run virtual machines. And OpenAI had figured out how to shut most of that down too.

That process of real world exposure is absolutely essential. It can't be done in advance where you try to guess every possible misuse and exploit and attack. Humans are incredibly creative hackers. The old military phrase "no plan survives contact with the enemy" is true here too. You can dream up all the safety restraints you want in isolation and then watch them fall in minutes or days when exposed to the infinite variety of human nature.

We already have most of the laws we need to regulate AI. It's illegal for someone to call you and tell you your mother is dying so you have to send money right away. That's fraud. If someone does that with AI cloning your voice it is still fraud. We don't need a new law. We just need to enforce the laws we already have on the books.

But we do need to fill in the laws where it is not clear, like with self driving cars. Today the blame falls on drivers unless there was gross negligence on the part of the car manufacturer. But if no one is driving, who is to blame for a crash? We have to work those kinds of things out ASAP.

So let's stop pretending we can make AI perfect and that nobody will ever do anything wrong with it. People do wrong with lots of things. Everything in the world exists on a sliding scale of good to evil. A gun might be closer to the side of evil, but I can still use it to hunt for dinner or to protect my family from home invaders. A table lamp might be closer to the side of good but I can still hit you over the head with it.

AI will be both good and bad and everything in between. It will be a reflection of us.

So let's speed up AI innovation and create clear, minimal legislation that actually works.

Let's put it out there in the real world.

There will be problems. There always are. But we'll do what we alway do.

We'll solve them.

I strongly agree that the precautionary principle is a terrible basis for approaching AI. Or anything else. I have proposed an alternative: The Proactionary Principle. It is close to some other approaches such as "permissionless innovation". Specifics in my blog piece (and others on the proactionary principle):

https://maxmore.substack.com/p/existential-risk-vs-existential-opportunity

"Some people in history believed wacky things. Therefore, arguments that suggest AI can someday replace human intellect are wacky. "

You need to revisit your logic.