Anti-Open Source AI Agitators are Uniquely Dangerous and They Must Be Stopped

Misguided Pressure Groups are Pushing Dangerous Policy Ideas that Will Kill AI Safety and Crush Western Innovation. They Must Be Stopped Now.

Open source AI is under attack.

A flurry of articles in the popular press and even well respected tech journals are calling for the death of open source. They want to make it impossible to do open research and share model weights. They're whipping up fear, backed by a well funded global movement of AI existential risk groups that want you terrified of artificial intelligence.

Artificial Intelligence holds tremendous potential to transform medicine, discover new antibiotics, make cars much safer, make legal knowledge and coding skills more accessible, and translate text into lesser used languages spreading knowledge farther and wider. It's already doing this and more.

But if anti-AI and anti-open source pressure groups are successful in strangling AI in its crib, we'll end up with three or four companies who have a license to make powerful models and every one else will be locked out. We'll get stuck with a bland, watered down, neutered AI in the western world, while authoritarian states rocket forward with surveillance, control and weapons first AI, blissfully ignoring the policies we created and laughing as we force feed ourselves economic poison pills.

Their misguided policy suggestions must be wholeheartedly rejected at every level.

That's because the ideas of these anti-AI groups are built on a fundamental misunderstanding of how open societies work and how open source software has changed the world for the better over the last few decades. Open source is the backbone of the world's economy now, and open source and open weights AI will be the backbone of the world economy tomorrow. Already, today's open source powers 97% of the world's software in one form or another.

Linux, the most successful open source project of all time, runs every major cloud, all 500 of the top supercomputers on the planet, all the AI model training labs, your smart phone, the router in your house and so much more. That's just one open source project. Millions of other projects power every aspect of the web and security. According to an Octoverse study, "In 2022 alone, developers started 52 million new open source projects on GitHub—and developers across GitHub made more than 413 million contributions to open source projects."

A new study out of Stanford, called Considerations for Governing Open Foundation Models, found that far from causing massive social unrest and abuse and other imaginary problems, "Open foundation models, meaning models with widely available weights, provide significant benefits by combatting market concentration, catalyzing innovation, and improving transparency."

Open source is a powerful idea that's shaped the modern world but it's largely invisible because it just works and most people don't have to think about it or even know that it exists. It's just there, running everything, with quiet calm and stability.

Open source is so successful because it levels the playing field in life. It's a common set of building blocks that's shared by all people. It means you and I and everyone else gets to use the same tools as multi-billion dollar corporations and governments. A local community group, a university, and the young entrepreneur right out of school all get to use the same core components as massive, world striding corporations and nation states. We all begin from the same starting point.

Open source started as a small movement that slowly grew to power the entire world. If open source AI is allowed to flourish you can expect it to follow a similar trajectory, rising to become the most important software of tomorrow, powering a massive array of future applications at every level of society. Killing it off is nothing short of economic suicide. Crippling it with stultifying bureaucracy and "compliance monster" legislation is sacrificing our future selves for delusional, imaginary risks that will never come to pass.

It almost happened once before. In the early days of Linux open source was under attack. Back in the 1990s and early 2000s, Microsoft and Sco wanted to destroy Linux. Balmer and Gates called Linux "communism" and "cancer". They told us it would destroy capitalism and intellectual property and put big companies out of business.

Imagine if Microsoft had been successful. They would have destroyed their own future business model, since Linux now runs more than 60% of customer workloads on their Azure cloud.

Today's backlash against open source AI is driven by an equally misguided array academic, anti-tech policy pontificators, professional AI agitators and fear mongers, and cynical companies looking to create a regulatory moat.

The vast majority of these pressure groups are not doing anything concrete to make AI safer themselves. Almost across the board, they're not working on AI alignment issues in a real lab, or trying to develop novel algorithms, or safer ways to create AI. Instead, their entire job is to manufacture outrage and fear through a constant barrage of mass media articles and letters to regulators. Unfortunately, it's working. They're having an effect on the western world's view of AIs. Ipos, a research consultancy, showed in one of their latest reports on AI that Asian countries and developing countries feel overwhelmingly positive about AI, while Americans and Europeans are nervous about it after constant bombardment of deranging messages from these groups.

(Source: Ipos survey on Global Views on A.I. 2023)

While Taiwan and other progress embracing Asian nations race forward, we're in danger of stagnating in the west because of this constant barrage of anti-tech messaging. The Taiwanese take a nuanced understanding of technology. They work to mitigate its downsides intelligently and practically. While they have a vast array of sensors around the city, ensuring traffic flows smoothly and emergency vehicles get to people faster, they also had an "entire team at City Hall...devoted to anonymizing the data from these streams, even going so far as to do their best to ensure identity couldn’t be reverse-engineered," as Alethios writes in "Why We Need Taiwan".

He goes further, showing how an embrace of machine learning can dramatically improve medical technology: "These technologies are not only being used by local governments but are being increasingly applied in Taiwan by the medical sector as well. Traditional monitoring sensors are being supplemented with cameras and ML models trained to detect signs of deterioration, assist with diagnosis, and assess patient risks. Patient mortality has subsequently dropped by a staggering 25% in less than a year, while antibiotic costs have fallen by 30%. Emergency patient wait times have halved. Taiwan is also experimenting with ‘smart’ operating theatres and telemedicine, allowing remote and mobile centres, as well as emergency vehicles, live access to central AI models and specialist services."

To turn around this downward spiral in the west, we need to swiftly purge any current legislative bills found to include these anti-tech agitator's infectious language because their ideas will make it impossible for small businesses to enter the AI market, cripple western innovation and create government mandated monopolies for the very Big Tech companies that many of these folks oppose tooth and nail.

These ideas include:

Licensing regimes that would require companies get a government license to train and deploy AI

Imaginary watermarking technology for AI text that simply does not exist and is impossible to build

Creating liability for AI makers around nebulous and ill defined "harms" that essentially boil down to "anything we say is a harm."

The recent dangerous policy propaganda article in the IEEE called "Open Source AI Is Uniquely Dangerous" advocates for all of these ideas and more. These poisonous, naive and misguided policies are usually the ideas of people who believe slowing down AI will benefit us all without seeming to understand that slowing down AI in the western world will mean real threat actors and hostile nation states will blaze forward while we fight each trying to battle phantoms and imaginary risks.

Even worse, these policy pushers don't seem to understand that they'll cripple the very research they're hoping to ignite: safety research. The IEEE article advocated for "paus(ing) all new releases of unsecured AI systems until developers have met the requirements below, and in ways that ensure that safety features cannot be easily removed by bad actors." If we pause all development, it means nobody would actually be solving the engineering problems. It's a basic misunderstanding of cause and effect.

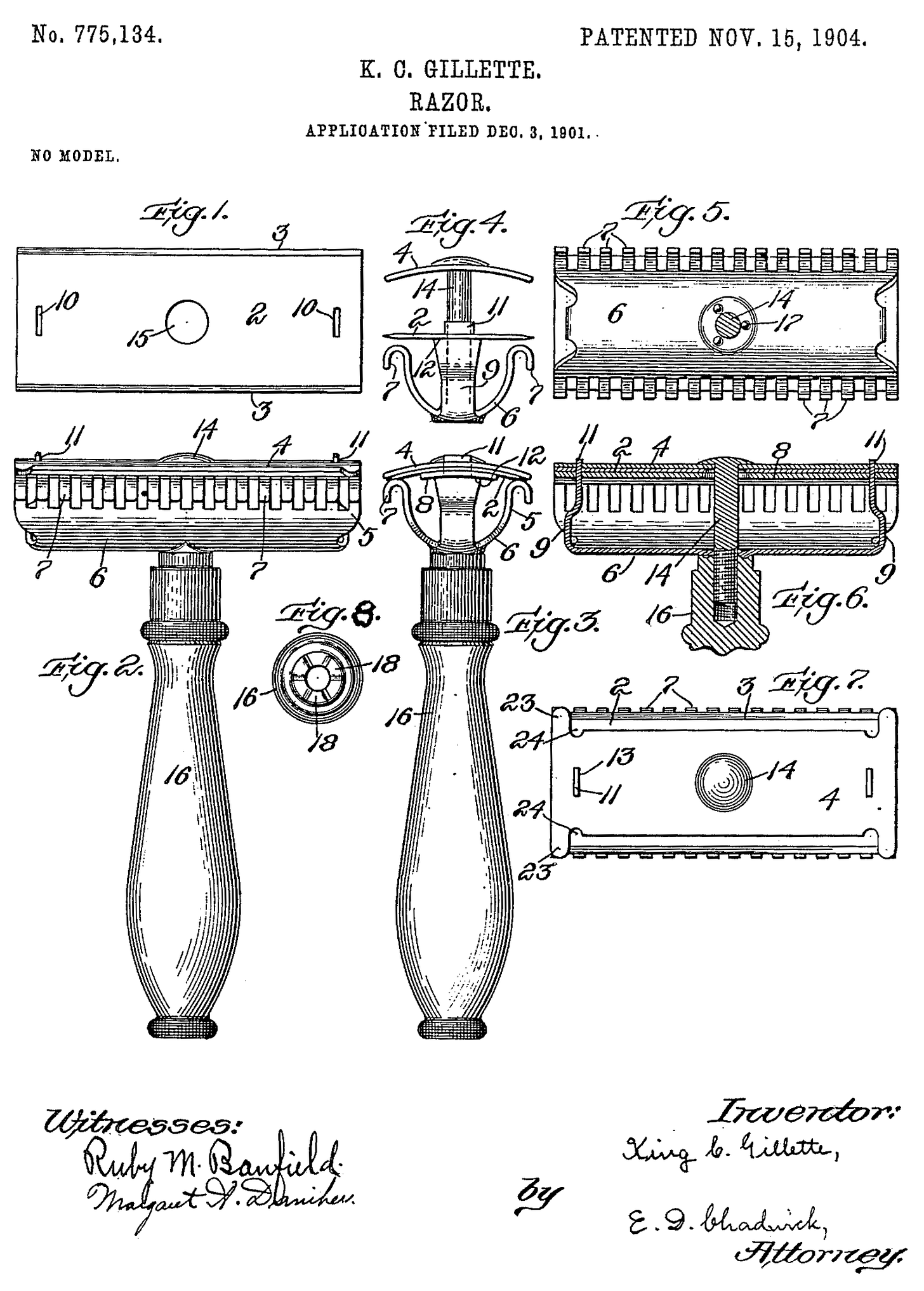

Without AI products in the real world, there is no revenue to fund safety research. We do not solve problems in a vacuum. We didn't design airplane safety standards before we created airplanes. The seatbelt was not invented before the car. The safety razor didn't come before the straight razor. This isn't because of a lack of will, it's because it's actually impossible. We have to have a problem in the real world to actually solve it.

(Source: Wikimedia Commons)

Early planes had terrible track records and often crashed and killed people. Over time engineers and operations teams developed better mitigations for the worst problems in plane manufacturing and plane operations at both a policy and technical level. For instance, pilots are now trained to deal with time dilation in a crisis situation, where time slows down and you feel like you have hours instead of one minute to land that out of control plane. They developed better auto-pilot systems, better materials and better ways to build and design planes. They created policies where if pilots self-report near accidents within two weeks, it can't be used against them in a court of law, but if they hide it it can be used against them. That leads to truthful information sharing and lets ground operators fix problems fast.

Now flying is one of the safest activities on Earth.

"The industry 2022 fatality risk of 0.11 means that on average, a person would need to take a flight every day for 25,214 years to experience a 100% fatal accident."

Over time, we always develop better and better mitigations but those have to happen in the real world, as problems develop.

Often these folks imagine that companies will be somehow incentivized people to solve these problems before putting out a product and dealing with what happens. They will do it magically in isolation, without a growing stream of revenue to pay employees. But it doesn't work that way. Even after raising 1 billion dollars as a non-profit, OpenAI realized they couldn't keep getting funding without an actual product and nobody else can either:

“So we started as a non-profit, we learned earlier on that we were going to need far more capital than we were able to raise as a non-profit" said OpenAI's president, Sam Altman.

This lack of basic understanding of cause and effect or the complexity of how things actually develop is a theme for these anti-open source agitators. Other examples abound. Later on, the IEEE author, David Evan Harris, raises the specter of AI and bioterrorism when he writes, "Unsecured AI also has the potential to facilitate production of dangerous materials, such as biological and chemical weapons." Mustafa Suleyman, another AI model maker pushing for open source restrictions through regulatory capture, said "what if AI released a virus!"

Let's walk through the logic here. How does a machine learning model "release" a virus with no actual physical presence? How does it get the chemicals or genetic materials? Who tests it? How do they release it? The list goes on and on. The hard parts are not coming up with a virus. Any first year genetics PhD could do that work. The hard part is synthesizing it, storing it, testing it, releasing it. AI does not make any of that easier.

Take a quick look at the link provided in the IEEE article to support bioterror fears. It's nothing but a link farm blog post that cites articles like ("The Genetic Engineering Genie is Out of the Bottle") that tell you it's now "easier than ever" to create a virus while offering exactly zero examples of this actually happening. The article is about CRISPR, written in 2020. The groundbreaking paper on the gene editing tech was published in 2012 but with roots of the tech going back to the early 1990s. Twelve years later we should have a whole army of examples about the devastating consequences of the misuse of that technology. Instead, we have countless examples of CRISPR used for breakthrough treatments for people with sickle cell disease, or the promising results of treating neurogenerative disease, and advances in immunotherapy to treat cancer.

(Source: FDA)

A study with control groups just released by OpenAI in January 2024 showed that expert PhD chemists with wet lab experience saw no uplift in their ability to create weaponize pathogens. There was only a very minor uplift for student chemists with access to GPT4. The study also found just having the information was not enough to create bioweapons and that the model was sometimes wrong, aka it hallucinated, which goes right back to what we already said above, that you still have to know what you're doing to test it and make it.

These bioterror fears also don't take into account that fact that bioweapons are some of the most useless weapons on Earth because they are imprecise and indiscriminate. They don't just kill your enemies. They kill you friends, family and countrymen too. That's why most governments and even psychopathic terror groups have shunned them. The only well-known incidents are the 2000s ricin in the mail attacks, a poison (not a virus) made from the waste of castor beans, and the Tokyo subway attack in 1995 (Sarin gas, also not a virus), carried out by multiple deranged cult members, with lots of funding, one of whom was a former chemist, long before AI existed. It required massive coordination to acquire spores, take over a lab, bring on multiple additional chemists, and more.

Beyond bizarre bioterrorism fears, these anti-open AI articles are always filled with more faulty reasoning.

"The threat posed by unsecured AI systems lies in the ease of misuse. They are particularly dangerous in the hands of sophisticated threat actors, who could easily download the original versions of these AI systems and disable their safety features, then make their own custom versions and abuse them for a wide variety of tasks," writes Harris in the IEEE article.

Nation states don't need any help building powerful models or attack tools. Threat actors aren't sitting around waiting for open source models to do their dirty work. APTs (Advanced Persistent Threats) are powerful government hacking groups that have raided major corporations to steal massive amounts of IP, hacked dissident groups, aid in cyber military assaults in modern wars, and influence elections. They've written some of the most powerful hacking tools on Earth. They are not waiting for script kiddies to write them a malware tool that they then modify for their own purposes. They write their own tools.

"You could ask them to...provide instructions for making a bomb, make naked pictures of your favorite actor, or write a series of inflammatory text messages designed to make voters in swing states more angry about immigration," writes Harris.

None of these are new threats. You could ask the internet how to make a bomb and it will happily tell you and it is less likely to get it wrong because of LLM hallucinations. You could buy or download the Anarchist's Cookbook as far back as 1971 to learn it too. You can make naked pictures of people in Photoshop or write a series of inflammatory messages to swing state voters yourself and automate spamming people with traditional code. We don't ban Photoshop or blame Photoshop for this and spam is already illegal.

(Source: Reddit)

What do all of these dangerous policy suggestions and flimsy evidence have in common?

They all boil down to a complete lack of understanding of risk in real life.

These folks imagine we can create a risk free society. They hope we can somehow magically eliminate all risk and build in safeguards so nothing bad ever happens to anyone but it's impossible. Everything in life is a risk. You can pick up a kitchen knife and stab someone instead of cutting vegetables but we keep making kitchen knives. We don't try to blame the kitchen knife manufacturer for the attack. We punish the people who stabbed someone and let the other 99.99% of us cut vegetables. Everything we do or build has downsides and risks. We have to be comfortable with risk if we want to make progress in the world.

If we build a highway, someone can drive too fast and kill themselves or others. We still build highways. Build a boat and people can drown. Many people have gone down to the watery depths over countless millennia and we still build better and better boats. People die in construction accidents and we still build skyscrapers. Linux runs every major cloud, your home router and supercomputers but it is also used to write botnets and hacking tools. We don't ban Linux.

You have a 1 in 93 chance of dying in a car accident in your lifetime. You still get in a car. While anti-AI agitators point out everything that can go wrong with AI they usually fail to point out what good it can do in the world. Driving is one of those risks that AI can cut dramatically. We kill 1.35 million people on the road every year and injure 50 million more. If AI cuts that down to a quarter that is 1 million people walking around and playing with their children and living their lives.

Along the way, as technology develops, we try to mitigate risk but we do it intelligently, balancing for the ability to move forward freely. As a society we're always trying to strike the right balance between freedom and security. We have to let people freely innovate without permission and compliance nightmares or top town authoritarian control if we want to reap the benefits of technology.

What kinds of laws do we need to regulate AI? Mostly, we don't need special new rules to deal with it. If someone makes a synthetic voice of your mother and calls you to scam you out of $5000, that's still fraud, even if the tactic is new. It’s just a variation on the 419 scam or confidence games. It goes back to the Spanish Prisoner scam in the 1800s and the modern version started in the fax era in the 1980s. It's already illegal to scam people out of money and we should punish people who do it to the full extent of the law.

We do need limited new laws that are tightly focused where there is grey area. A good example is self-driving cars. All the current laws assign blame based on the driver. Did they lose control? Where they drunk? Did they hit someone from behind or in front? But if nobody is driving we need to place blame somewhere for accidents so we need new legal thinking here.

Free societies are built on risk. Openness is a risk. It inevitably means that some people will take advantage of that openness, but closed societies are not only less successful, they don't minimize that risk at all. Authoritarian regimes still have crime and abuse and they have abuses of the state as well. Free societies emphasize people's ability to make their own choices and to take on risk for themselves. Sometimes people make bad choices and they get punished. It's still worth having an open and free society.

More intelligence, more widely spread, is the best way to make this world safer and more abundant for as many people as possible. When you think about it, what doesn't benefit from more intelligence?

It's delusional to imagine that we can strip all the risk out of systems. It's absurd to limit legitimate uses drastically to try to stop a few bad actors. We should have the freedom to share research openly, to interact with AI the way we want and to not have AI locked up and controlled by a few people who want to keep all the benefits for themselves.

Life is a risk. It's a risk worth taking. So is AI.

AI will revolutionize health care, fun, medicine, materials science, entertainment and more. It will help us create new life saving drugs, make driving safer, stop more fraud and revolutionize learning as you chat with your AI to learn a new language or a new subject, but not if we let these anti-AI agitators kill it in its crib.

The biggest risk is not embracing AI and giving into irrational, imaginary fears.

Resist any and all anti-open source AI policies and trust that openness is always the path to a better tomorrow.

The only thing to fear about AI is fear itself.

Well-written, Daniel, thank you! 💯

I'm also seeing a lot of attacks based on protecting systems that are already really broken and ineffective, such as in education, copyright, politics and so on. The best way out is through, not back.

Thanks, Daniel. Thought-provoking, as always.

Isn't COVID-19 at least one example of CRISPR being used for harm?