AI, Art, Blurred Lines and the Looming Nightmare of Copyright Expansion

The Push to Expand Copyright to Cripple AI Systems Will Have Blowback that Few People See Coming and Nobody Really Wants. Even Worse, It Won't Stop AI Anyway

AI is a Rorschach test.

People can see anything they want in it. AI doomsday. The Singularity. The end of all jobs. A utopia of total abundance. The end of creativity. An explosion of new and never before seen creativity.

It's a mirror for all our hopes and dreams.

Never mind that the AI revolution is probably not going to be any of these things. It's going to do some wonderful things, some iffy things, and some bad too. It won't bring about utopia or the end of the world or destroy all the jobs. But that won't stop people from thinking it because life is scary and strange and mysterious and we need a foil push against because that makes it feel like we're in control in a world of chaos.

One of the biggest fears of all is that AI might be just like us, that is as nasty, violent and barbaric.

It might mirror the genocidal and world conquering blood lust of the kings and queens of old and rise up against us, bringing divine retribution for our sins. I won't spend any time on that fear here as I've already written about the Rise of the AI Doomsday Cult. My TLDR is we all need to take a few deep breaths and realize we'll handle it. Really, we will.

This technology is too amazing to let fear cripple it before it gets off the ground. There's no industry on Earth that won't benefit from more intelligence.

But I will spend a little time on the other big fear driving anti-AI paranoia: The end of all jobs.

That's the one that looms largest on people's minds and the one that's behind most calls to regulate it into oblivion, or stop all progress, or deliver a UBI now before it's too late.

More than anything, it got me thinking about whether a machine could replace me? And that got me thinking about what makes me, me? And that led to my writing and how I learned to write and who my influences were? How do I learn it and how do machines learn it and is it really any different?

After twenty years of writing, I don't think about writing much anymore.

I just do it.

I do it as easily and naturally as breathing.

But when I was learning to write, I read every book on writing I could get my hands on, went to seminars, joined writing groups, and read the great books from the great masters. As I read I tried to pick apart what those writers were doing. When I found a brilliant description I read it again and again, trying to see what kinds of tricks they'd used to make the sentence sing.

Was it a brilliant and vivid description? Was it word choice? Or maybe it was sibilance, the repetition of S sounds that can make a sentence sound sinister or seductive. Or maybe it was assonance, the repetition of vowels. Just listen to Roy Orbison sing "Only the lonely" or Audrey Hepburn as she says "the rain in Spain stays mainly on the plain."

(Source: Audrey Heburn in the rain by Midjourney 5)

I've been writing for twenty years and I've written almost every day for more than a decade. I still write 4-5 days a week, even now with everything else going on in my life. Through all those years I honed my skills and my signature style that a writing friend once described as "building and building and then crashing like a massive wave."

Large Language Models may write faster than me, but I still do it a lot better, at least for now. I can instantly look at something written by ChatGPT and know what's wrong with it. Maybe the paragraphs are all the same size, which makes the reader's eye get tired and that makes the reader bored. Maybe the word choice is too formal. How about too many "be verb" constructions so it sounds like a high school drop out wrote it or not enough so it sounds pretentious? Maybe it makes great points but doesn't drive home the big point in the last few paragraphs.

I can tell the model how to fix it but it's still never perfect.

That's because at this point in my life, I know tons of tricks to make my words stick in your mind. I don't need to think about them. They're just there. I don't think of a certain sentence as sibilance, I just use it when it feels right without focusing on it consciously. I've got "unconscious competence" with writing, meaning I've done it so long that it's a reflex I can draw on. I've studied all the patterns and I know them by heart and I can use them in my own unique way.

We tend to think of our style as uniquely our own and it is our own. But it's built on the back of giants. I still know a few of my influences but most of them I've forgotten. They got mashed up in the primordial ooze of my mind and now I can use what I learned without knowing where it came from anymore.

(Source: Hemingway by Midjourney 5)

There are a few influences that stand out still, like Hemingway. I learned to write short, sharp sentences from the old master. I learned how to deliberately write run-on sentences from him too. Sometimes you want to keep the action sweeping along, so you just extend the sentence beyond the bonds of mere grammar and that sweeps the reader right along with you and you don't worry about the grammar because it's the feeling that matters more.

But along the way, after all that training, my writing somehow morphed into my own style. I still have the hallmarks of Hemingway in my work, but his work is stripped down and sparing and mine is flowing and lavish and flashy.

When you're a young artist you copy more than you create. It's how you learn.

The old phrase says it best, "good artists borrow, great artists steal," that's been attributed to everyone from Picasso to Stravinsky.

(Source: If It’s Hip, It’s Here - Article on Deconstructing Lichtenstein project by David Barsalou)

Its meaning is complex and simple at the same. When you're learning you borrow ideas and phrases and style from others but you don't really know what you're doing and you don't acknowledge that you're unconsciously taking from those who came before you. You're aping their ideas but you haven't really made them your own.

But when you're a mature artist, confidently working in your own style, you acknowledge all the influences that came before you and that inspired you. You realize no idea is really new, it's just the twists you put on it when it's filtered through your own lens. You take boldly from the past knowing that every great artist who came before you did the same. Shakespeare didn't invent a single one of his plots but his masterful take on all of them makes his versions last.

All of that writing and reading and studying boils down to training. It's different from how we train machines but it's still training. I took in the data of the world around me and learned how to write better and better with time and age.

Machines learn from the world around us too. They learn in a way that's a lot like us and different from us too.

Machines learn from lots of data and compress it into a small amount of digital neurons, while people learn from a lot less data and spread it out over 86 billion neurons because we've got neurons to spare. But overall it's similar. We learn patterns. We learn concepts and ideas and machines do the same.

And machines are getting very good at learning these days.

After decades of promises that never lived up to expectations, artificial intelligence is finally roaring out of the research labs into the real world. 100 million people are using ChatGPT for everything from planning their holiday to therapy to coding. Ai is predicting the shape of every known protein and has the potential to revolutionize drug discovery. It's winning at Go and DOTA 2. It's spotting potential heart attacks before they happen in Apple watches so people can get to a doctor long before they collapse clutching their chest. It's driving cars on the busy highways and backroads of China and San Francisco and soon everywhere else and holds the potential to dramatically cut the amount of human deaths on the road. Over 1.35 million people die on the road every year and 50 million more are injured. If AI can cut that by half or down to a quarter that's a million people still walking around and playing with their kids and living their lives.

But despite these machines offering a massive potential to change life for the better, they've faced a backlash recently.

Even the godfather of AI, Geoffrey Hinton has gotten a little worried. He doesn’t think we should stop developing AI but we should pour resources into controlling it. (Of course we should and we are.)

As Snoop Dog said of Hinton's worries, "is we in a fucking movie right now or what?"

(Source: Stable Diffusion custom model)

But the backlash really started with Stable Diffusion, an image generator, when three artists filed a class action lawsuit against Stability AI and Midjourney and Deviant Art. A class action lawsuit is the one where the plaintiffs usually don't pay the lawyers. The lawyers get paid if they win the case by taking a nice chunk of the settlement. That's why class action lawyers typically go after deep pocketed firms or firms they perceive as having deep pockets, because they don't get paid unless the companies they're targeting have money they can siphon off like snakes sucking blood from a juicy thigh.

But where did the lawsuit even come from? It came after a vocal and often highly charged back and forth on Twitter and other social media sites between artists and AI advocates, which got really nasty.

As both an artist and a technologist the debate and its vitriol caught me off guard.

As the fear and rage spiraled out of control and artists lashed out, even threatening to kill someone who made a children's book with AI, the folks behind the outrage searched for a narrative that would stick. Most of the arguments didn't work and didn't catch on with the general public. Arguments like "It's not real art" didn't mean much to non-artists. Other arguments like "It's not right to use a living artist's name in a prompt" worked as a rallying cry inside artist communities but not outside it. There were other arguments made too like AI "art" (always in quotes to dismissively slander it as not real art) is ugly and generic.

None of these mattered much with the general public.

People everywhere liked the AI art generators. They wanted to use them. They were new and exciting.

It's fun to push a button and get something visually awesome in an instant. AI avatars took off like wildfire, as internet influencers uploaded selfies and got back pictures of themselves dressed in dozens of historical outfits.

(Source: Stable Diffusion early version)

But eventually the artist community hit on a keyword to rally around:

Consent.

They focused on the vast amounts of training data these models needed as a uniting thread to coalesce behind. They said, I didn't give you the right to train on my data. I didn't say you could do this!

Consent is a near magic word in the modern world.

It symbolizes free choice, freely given.

It's a word that's always been with us but it's taken on near God like power as younger generations came to terms with the historic horror of the world, where everything from forced labor to sexual assault were commonplace and swept under the carpet. It's a sane reaction to insane history.

Consent is too good a word and too powerful to be left to only one aspect of life. It's a word few can disagree with. You don't like free choice? You don't feel everyone should agree with what's happening? How fucking dare you?

And so it's a concept that's moved on to anchor many other debates in many different parts of life. It's sometimes enough to just say the word to shut down all other arguments because the other side can't disagree without looking like a bit of a monster.

But what does consent mean when it comes to training data for AI?

Should machines be allowed to learn on human data at all?

That's really the heart of it.

Well actually it's not. It's a great idea to rally around but it's really not what we're arguing about here even if people think that's what we're arguing about so let's talk about that and then come back to consent.

Coffee, Revolution, Innovation and Its Enemies

In the book Innovation and Its Enemies, by Calestous Juma, the author breaks down the history of historical opposition to technology.

You may think that AI is the first technology people have ever tried to stop, but everything from the printing press, to cameras, to 5G, have faced fierce and sometimes violent opposition. In almost every case of historical opposition to a new technology nobody is really arguing about the thing they're arguing about. They come up with a different, emotional frame to get people fired up, but they never address what's really churning below the surface.

What they're really arguing about is money and jobs.

(Source: DeepFloyd If Model)

Who benefits from a new technology? Who loses out or thinks they're losing out?

The same is happening here with AI. On the surface, the argument is about consent but really it's about the dread and fear of losing a job or mass unemployment or big societal changes. The hope isn't that AI companies will have to ask artists for permission to learn from their work, the hope is they can kill AI all together and stop any chance that AI has any place in art by making it even more absurdly expensive to train these systems.

In the long run, change is inevitable though. AI is coming and nothing can stop it, even if it slows in the short term.

If lawsuits manage to expand copyright law to make it impossible to learn off internet data, then AI researchers will simply be forced to think in new directions and dream up ways to make AIs learn more like people. It's already happening anyway, like Deep Mind using Reinforcement Learning to teach robots to play soccer.

People will figure out how to teach AIs the way we teach small children. If I take a kid out in the back yard and toss him the ball a few times over a few weeks, he'll figure it out. He may not go pro without a lot of study, but he'll understand the concept. Eventually AI researchers will mirror that approach even if they're shut down by frivolous lawsuits and other attempts to kill off progress in AI.

Powerful technologies always find a path forward, even if they face massive opposition.

Take something like coffee.

(Source: Vintage Nescafe advertisment)

You might not think of coffee as a technology, but the process of gathering the beans, roasting them, storing them and transforming them into a drink are all part of a chain of technologies that make it possible for you to get your morning caffeine fix. Today, when you're picking up your coffee from the local hipster barista shop or mega-chain you probably never imagined it was once controversial.

But for hundreds of years it faced slander, demonization, legal attacks and more.

Kings and queens saw coffee houses as breeding grounds for revolution and regularly shut them down, harassed owners, taxed and arrested them. In parts of the Islamic world many powerful leaders saw coffee as an intoxicant no different than alcohol and drugs and they attacked it on moral grounds with fatwas. Beer and wine groups formed powerful opposition groups to coffee, seeing it as a competing product that encroached on their market share. For the vast majority of history people didn't drink water because it was tremendously unsafe, but they did drink beer and wine that was watered down throughout the entire day. Beer and wine makers saw coffee as destroying their markets and fought it tooth and nail.

What they were really arguing about was power. Coffee houses were places where people got together and exchanged ideas and that was dangerous to the kings and queens of old who thrived on keeping people locked down and ignorant. Competing businesses saw it as a way to eat into the profits and that was about jobs and who gets to make the money. Both forces fought back against the society shifting power of coffee.

You already know the story of how the prohibition of coffee turned out.

Today most people would look at you like you had two heads if you told them coffee was once a battleground. They're taking selfies with their coffee and getting it to go to work or study harder.

After a technology triumphs, the people who come later don't even realize the battle happened. To them it's not even technology anymore. It's a part of their life, just another thing. They just use it. To someone born today, a car or refrigerator or a cup of coffee is no different than a tree or a rock. It was always there.

The same will happen with AI, one way or another. It's just too important and powerful of a technology for it to not find a way forward. There is simply no industry on Earth that won't benefit from getting more intelligent.

But opponents of AI can manage to make it painful for a time.

And that can create a world where copyright is expanded in new and ugly ways.

If they really stopped to consider all the repercussions and unexpected consequences they might not like the world they're hoping to create, one where big content providers can restrict whatever you want to write about or paint or draw.

What's really happened here is that big content providers have capitalized on artist rage and fear and duped them into supporting copyright expansion. There's a sense in the artist community that every artist will get paid for their training data if they rally behind consent.

Let's be clear. That will never happen.

Nobody is ever calling up Dan Jeffries and sending me a check because they scraped my Future History Substack. Maybe Substack, the corporation behind my blog, might try to charge for their API but I won't ever see a dime of it, guaranteed, or if I do it will be pennies on the dollar from Substack itself if they're feeling particularly generous.

It's a simple matter of basic logistics. There is simply no way for any company anywhere to get permission from every single person individually and to keep track of that consent. You'd need a massive software tracking system that simply does not exist. I once covered Time Warner when working in sales at Red Hat and they dedicated massive amounts of resources to tracking rights just for their own IP and just in the US with a huge database that could figure out if someone got paid a nickel of royalties because they were the roadie's former spouse of some band. It's vast, complex and error prone. Extending it to the whole world is probably impossible. Nobody would build that kind of software unless they personally profited massively from it and nobody will build it out of the goodness of their heart to support a massive collection of us artists.

So who will get paid?

The giant content companies that you already signed your rights away to years ago when you weren't reading their T&C's.

It's a gold rush right now as content companies everywhere, who've been paid handsomely by ad revenue for decades, are now licking their lips at the possibility of soaking up some new revenue from AI companies.

(Source: Wikimedia Commons)

Reddit is demanding payment for its API to AI trainers and StackOverflow says they will too. Others will follow. Elon Musk threatened to sue Microsoft for "illegally" training on Twitter data. Let's be clear. There was no illegal anything. Elon is just making up a new law in the court of public appeals because he smells a new way to monetize his multi-billion dollar purchase of Twitter.

Scraping that data was absolutely fair use. Case closed.

They can change their terms and conditions but there was nothing illegal about it. They can change the rules for how people can use their API too but someone can still scrape their publicly facing website. The law can change but until it does, it's an open and shut case. Web scraping is legal and continues to be legal as many court cases have affirmed.

Artists may hope to change that to protect themselves from AI learning their style but that's not what copyright was created to solve.

Remember that copyright doesn't protect inspiration. It protects exact rip-offs of a particular work. Just because an AI learns from a particular copyrighted work doesn't mean it's going to just copy and paste from that work. It learns features like color and shading and it incorporates that into the primordial ooze of its weights.

It's a good thing that we can't copyright a style.

Imagine if I could copyright "anime style" or "fantasy style" and sue everyone who painted anything in those broad categories? That's a nightmare that creates endless legal problems and it's why the copyright courts have come down again and again against that for decades. It's awesome that we can't do that.

Copyright law has never really been about paying artists for their work. Maybe it started that way, but along the way it's been co-opted and warped to do one thing: protect distributors of art, not artists. Think big music companies. Big content companies. Film companies. That is who gets paid. Period.

There is a reason that Mickey Mouse (R) is still copyrighted when his copyright should have expired decades ago.

Now if big content folks have their way, they will do the same thing to AI.

Will I get paid for my tweets? Nope. Twitter will.

Will you get paid for all your helpful answers on StackOverflow? Nah. StackOverflow will though.

Remember when you clicked that big long legal notice you didn't read when you signed up? Yeah that one that says they own all your data and you own shit. That is who is going to get paid.

The Economics of Art and AI

Let’s start by laying our economic cards on the table.

I love AI and machine learning. In particular, I love generative AI and I want to see it proliferate.

On the flip side, many artists worry they're going to get driven out of business by machines that can crank out picture perfect portraits or stellar concept art at the push of a button. Many of them see it as a zero sum game, where they lose their job and AI destroys art and everything they believe in.

People get short sighted because they tend to see everything as a zero sum game. Someone gains so someone must have to lose too. But that's not the way it works out usually.

No web designer lost a job because I designed my website in the drag and drop Wordpress editor Divi. I wouldn't have been able to hire one. It's as simple as that. Nobody was competing for my business because I just couldn't hire one in the first place. But with drag and drop editors I can still make a website.

Of course, later on down the line I did manage to get some work to designers because of Divi. I used Divi for years on websites and when I started a foundation that had some strong funding, the AI Infrastructure Alliance, I still built the site in Divi but hired designers to do the more complex pages that needed a deft human touch. Technology expands capabilities to people who wouldn't otherwise have them.

Or take something like Lensa AI, the AI avatar program that saw millions of people creating fun pictures of themselves in a range of art styles. Couldn't Lensa AI have just hired artists to paint those avatars?

No, unless they managed to hire most of the 2.4 million working artists in the United States to paint boring portraits. They were serving millions of customers a week.

So even if they hired half the artists in the US it probably wouldn't work and certainly not for $4 a month. Maybe if it cost $10,000 a month though. If you think that's impossible, that's because it is impossible. Nobody would cough up $10,000 and send their pictures and wait around for a week while the avatars went to a fleet of artists working in sweatshop conditions to crank out those portraits.

Nobody lost their job because of Lensa. An app like Lensa simply could not exist without AI. Lensa exists because AI exists. There's no way you could possibly scale it with people. It's a brand new set of jobs that don't compete with existing ones because you can't make the app any other way.

In the 1940s the biggest musician union went on strike protesting recorded music. They warned that recorded music was putting them all out of business.

And they were right. At least at the time.

Back then, record stations had the audacity to stop hiring live musicians on the radio and they just played records instead. The union did everything in their power to stop radio stations from using that dreaded new technology of records and they banned any of their members from recording albums for a short time in 1940 which brought music creation to a screeching halt.

In case you haven't figured it out yet, records eventually won, and all radio stations play pre-recorded music except on very special occasions. All those musicians on the radio did lose their job after years of slow phase outs. But the impact of recorded music can't be understood simply by looking at the number of working musicians who lost their jobs playing on radio stations.

Because of the technology of records, music spread far and wide. There are more jobs than ever in music.

It's taken music from something you only heard once in a while, into something that's heard around the clock and in a deeply personal way. Recorded music went on to be the biggest benefit to musicians over time. And 70 years later, ironically, streaming has changed the way musicians make money again and that's seen the resurgence of live performances as one of the best ways for musicians to make money.

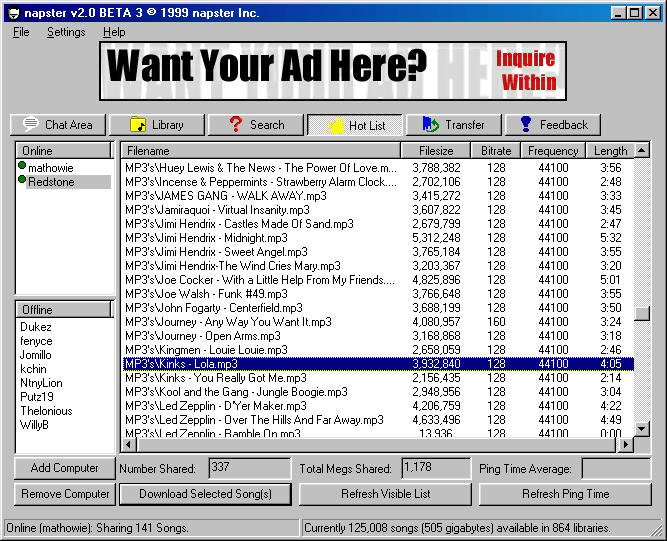

Not a decade has gone by without some new technology about to "destroy" the music industry "forever". Records. Synthesizers. Computers and sampling. CD rippers. Napster.

But music lives on.

And yet out of fear, the music industry has created one of the most cumbersome and ugly copyright regimes on the planet. The music industry reacted to these "end of all music" threats by continually agitating for new laws and copyright expansion. They launched lawsuits and propaganda campaigns and now the entire industry is a nightmare for artists and content creators alike. This is not the template for the whole world or other industries.

The music industry is an exact template for how not to do copyright.

To understand why you just need to know a bit about the song Blurred Lines.

Blurred Lines

A decade ago Blurred Lines became a double lightning rod.

The first lightning strike ignited heated debates online about sexual abuse. Lyrics like "I hate these blurred lines, I know you want it (Hey)" and "The way you grab me, Must wanna get nasty (Ah, hey, hey)." Critics saw lines like "Do it like it hurt, like it hurt" as code for sexual assault. Student unions barred the song and it was effectively de-platformed long before de-platforming became a standard tactic in online culture wars.

But it was the second lightning strike that really did the damage to copyright owners everywhere. The family of soul singer Marvin Gaye successful argued in 2015 that the Blurred Ling singers were influenced by the feel and style of Marvin Gaye's 1977 hit "Got to Give It Up."

Copyright is not supposed to protect influence.

The legal artist blog points out some key ideas behind current copyright law:

"Copyright law protects finished works of art. It does not protect things like facts, ideas, procedures, or an artist’s style, no matter how distinct."

"In Steinberg v. Columbia Pictures, the court stated that style is merely one ingredient of expression and for there to be infringement, there has to be substantial similarity between the original work and the new, purportedly infringing, work. In Dave Grossman Designs v. Bortin, the court said that:

'"The law of copyright is clear that only specific expressions of an idea may be copyrighted, that other parties may copy that idea, but that other parties may not copy that specific expression of the idea or portions thereof. For example, Picasso may be entitled to a copyright on his portrait of three women painted in his Cubist motif. Any artist, however, may paint a picture of any subject in the Cubist motif, including a portrait of three women, and not violate Picasso's copyright so long as the second artist does not substantially copy Picasso's specific expression of his idea.'"

But that is exactly what the Blurred Lines case did. It reversed a century of sound, sane precedent and replaced it with a terrible ruling that opened up the floodgates. Now musical artists are suing each other like patent trolls in a surge of lawsuits.

A Christian rapper managed to get $2.78 million after jurors determined Katy Parry stole bits of the rapper's 2009 song "Joyful Noise" in her hit song "Dark Horse." A federal judge reversed the verdict the next year but it was still a nasty drawn out burden that nobody should wish on their worst enemies.

When Grammy winning singer Ed Sheeran won a victory in a courtroom after winning a copyright claim against him for his song Shape of You he wasn't celebrating.

Said Sheeran, "Whilst we're obviously happy with the result, I feel like claims like this are way too common now and have become a culture where a claim is made with the idea that a settlement will be cheaper than taking it to court, even if there is no basis for the claim. It's really damaging to the songwriting industry."

The suits go on and on. Donald Glover, aka Childish Gambino, is getting sued over This is America.

Dua Lipa is getting jacked by two drive-by lawsuits for her song Levitating.

Oh yeah and Sheeran is getting sued, again. Also by Marvin Gaye's litigious heirs. Apparently Marvin Gaye inspired half the pop songs in the world at this point.

(Source: Midjourney 5)

That's what changing the definition of copyright from "you straight up ripped off and exactly copied my work" to "you were influenced by me" means.

A horrible nightmare.

Maybe the folks pushing for this think it's only going to hit AI companies so they don't care but that's not the way life works. You can bet on this and take it to the bank. If they manage to get a legal precedent set or get a law passed it absolutely will include all kinds of provisions to expand copyright for non-AI. Guaranteed. Count on it.

As soon as the floodgates open every scammer and fraud who never made it but can find a big artist fish to fry will come out of the woodwork with their hand out looking for the payout from the courts that they never got in life. We know this because we have an exact template for it. The music industry.

Now folks want to do the same for writing and art? That's a terrible idea.

It's easy to be angry at machines and big companies but it's a terrible idea to try to expand copyright because it will have a horrible knock on effect. People and machines learn in the same way, from the world around us. We learn from life. We learn from other artists.

As soon as we start hammering people for their influences it creates a horror show of copyright that nobody wants.

If we're going to discriminate against machines then let's go all out and extend the same standards to artists.

First, every artist should have to get permission from the rights holder to create fan art. If you want to paint Bowser or Link you should call up Nintendo and get their blessing. Want to draw Mickey Mouse for fun? Better call Disney. Let's take down all the Star Wars fan art thieves on Etsy who are stealing from Disney.

(Source: Midjourney 5)

We can extend that to public places too. It's a photographer's right to take pictures of the world around them. They can take pictures of famous buildings from public areas and the building owner can't stop them from publishing those pictures or selling them. Let's change all that. If any photographer wants a picture of the Empire State Building, they need to call up the owners and get the rights to take that photo.

Let's extend copyright to style too. If you draw anime or make an anime film you should have to go back and pay royalties to all the people who inspired you. You should have to get the permission of Katsuhiro Otomo or Isao Takahata's estate or Masamune Shirow, if he's not too busy painting Hentai tentacle porn now, he might just get back to you. Or maybe you have to go all the way back to 1917, to the film Nakamura Gatana created by Jun'ichi Kōuchi. It shouldn't be too hard to find his widow and his estate to get him paid. Honestly, who really knows who created anime. So let’s pay everyone and let them all sue everyone!

Why stop there? We can extend this to sports too. Tom Brady studied Joe Montana's throwing motion when he was growing up and dreaming of taking on the NFL world. He went on to be one of the greatest quarterbacks of all time and Joe got nothing from that. Joe got ripped off! It's his right to cash in on Tom Brady's success since he played such a massive part in it. Let's make sure that future kids learning the game have to pay to study the footage of famous quarterbacks so that quarterbacks of the past aren't cheated out of their rightful income.

If you used any reference photos in your art, you should have to declare it and pay royalties. If you borrowed any hints of someone else's style, pay up, sucker. I should have to declare the various detective shows I loved and the mystery novels I read when writing my first novel. Everyone should declare their entire lineage and kick payments on down the line endlessly.

If all that sounds absurd, that’s because it is absurd and that's exactly what people are asking AI companies to do now.

Nobody learns in a vacuum.

Not you. Not me. Not AI.

They're not doing it because they think it's a good idea, they're doing it because they found a watchword to rally around, they're afraid of losing their job, and/or they hope to kill all AI competition by choking off a key ingredient for today's AIs to learn.

There’s just one problem. It won’t work. And it will leave a hideous copyright regime that nobody wants in its place.

Cash Grabs, Content Platforms and the Little Guy Gets Screwed Again

AI is inevitable.

AI will touch every single aspect of our lives.

We'll weave AI into everything from how we discover new drugs, to how we create art, to how we send goods around the globe, write code, communicate, and how we run cities and logistics. Every nation on Earth will race to become the center of AI because it will reorder the nature of power on the planet. It will unleash brand new ways to discover drugs, help us find cures for cancer, make driving much, much safer and more.

But not if we kill it with short-sightedness and bizarrely overblown fears driven by doomsday cultists and media stories about the end of all jobs. Already we're seeing the repercussions of those scary narratives. People are trying to attack the training data of AI companies to make it harder and harder to create AI platforms. But it won't have the effect they hope for in the long run and it will create a nasty expansion of copyright that everyone in their right mind will hate.

The cash grab of companies like Reddit and StackOverflow and Twitter looking to wallop AI companies for training data is understandable but it's basically going to jack up the cost of training models and concentrate power in the hands of a smaller group of bigger companies.

The "I didn't give you the right to train on my data" movement is going to have the exact opposite effect people are thinking it will have. It's going to make bigger companies the arbiters of intelligence and content, not democratize it.

Anyone thinking that artists, writers, coders, and content creators are going to get compensated individually is out of their mind.

Never going to happen.

Again, it's impossible to build such a distributed comp system and second of all people already signed their rights away to content platforms decades ago without reading the T&Cs. So who will get all the money from these lawsuits and cash grabs? The same people that always do. The people pushing for an expansion of copyright. The content platforms and media companies. Not you and me.

And please don't talk to me about some crypto, blockchain token system for ownership.

I love crypto but that's not happening. Nobody is going to build that for the whole world and people are not going to adopt it just because they want to get a few bucks for the training data. The system doesn't exist and it's not going to magically pop into existence and get adopted by everyone on the planet simultaneously. A system like that may exist in the future but it doesn't exist now and there is zero incentive to build it.

Charging for web data is also an absurd trend that we should resist too. Humans learn from the world around them. We don't call up Tom Brady to get permission to learn to throw a football and I don't have to call up the Hemingway estate because he was an early influence on my writing. We are walking into a copyright nightmare and most folks are cheering it on because they don't see it coming.

When the whole world is the music industry and Blurred Lines we're all screwed. When anyone can be sued over utter nonsense and the hint that something is kinda/sorta like my thing, it's a waste of time, money and people's lives. When fan artists are calling up Nintendo to beg for the right to paint Bowser they will understand.

At the heart of all this is really economics. Many artists are hoping to cripple or slow AI down in order to forestall an imaginary future where all artists are out of business and the machines do all the jobs. This is a fantasy story that is based on nothing but speculation and poorly thought out speculation at that.

The future is centaurs. People working with machines.

(Source: Midjourney 5)

We've already destroyed all the jobs multiple times in history. You don't churn your own butter or hunt the water buffalo to make your own clothes. You don't use whale oil candles. You flip a light to turn on the electricity. Yes, the whale oil industry slowly faded but that's all right.

Is anyone today clamoring for a return to whale oil hunting so we can kill them and dig the white gunk out of their heads to light our houses?

We've always adapted to new technology. Always.

We'll adapt this time too.

But not if we try to kill the technology in its crib and as a horrible side effect we make the whole world a copyright nightmare for a generation.

You're putting your training data to good use, Dan. Keep it coming.