I’m sick of all the doom and gloom in the world today.

Apparently capitalism is collapsing, North Korea will nuke us all, and a total environmental breakdown will turn the whole world into a bad Mad Max rerun.

Oh yeah and superintelligent machines will rise up and kill us all.

It all reminds me of Harold Camping, the doomsday guru who took out ads saying the world would end on May 21, 2011. The end is nigh! Repent. (And send me money, suckers, to protect you from God’s wrath.)

And if doomsday isn’t today, we’ll just move the clock forward a little. It must be right around the corner. Beware! If we keep predicting Armageddon via sun spots, evil machines and the plague, eventually maybe we’ll be right. Hey, even a blind squirrel can find a nut once in awhile.

But the more I look at things, the more I think we’re firmly in the grip of a mass hysteria of epic proportions, magnified by the megaphone of the Internet.

HINT: Capitalism is fine, it’s just evolving into something that works better for everyone, as it should (care of the mind blowing power of cryptocurrencies); North Korea is not nuking shit because they know they won’t exist the very next day; and I’m betting on brilliant kids like Boyan Slat, renewable energy and plain old “necessity as the mother of invention” to stave off Mad Max.

Oh I know, I know. You have firm evidence that if we don’t act fast it’s all over for us! I get it. You can join the growing chorus of people calling me an idiot in my comments section (or you could just file it up your ass because I don’t give a crap.)

Doomsday is a great story. It sells books, magazines and presidential elections.

Nothing gets the blood pumping like FEAR.

Hey Steven King didn’t become one of the best selling authors in the world for nothing.

Today, one of my favorite fear stories is the rise of Superintelligence. Muhahahahahahaha. I wrote an article on ‘Why Elon Musk is Wrong About AI’ and got everything from bravo to ‘you fool!” One caustic gentlemen felt I needed to read ‘Superintelligence’ by Nick Bostrom to really understand why brilliant machines would soon wipe us all out.

Here’s the thing. I already read it.

And just to be clear we’re talking about the same book that posits that an AI who’s job it is to solve the Riemann hypothesis might decide to transform the whole Earth into a theoretical-not-yet-invented substance called “computronium” aka “programmable matter” to assist in the calculation?

That book?

I’m familiar with that story. It’s in about fifteen different classic sci-fi books, like Accelerando where whole planets are turned into Matrioshka Brains to power the world’s biggest supercomputers forever, usually while we all happily upload our minds into it.

Or how about this other scenario that Bostrom lays out. This one seems pretty likely:

“An AI, designed to manage production in a factory, is given the final goal of maximizing the manufacture of paperclips, and proceeds by converting first the Earth and then increasingly large chunks of the observable universe into paperclips.

Bostrom, Nick. Superintelligence: Paths, Dangers, Strategies (p. 123). OUP Oxford. Kindle Edition.”

So, let me get this straight: A “superintelligence,” using only the loosest possible definition of “intelligent,” might decide that it needs to convert the entire universe into paperclips?

Are you freaking kidding me?

If you seriously think that’s the kind of problem we need to be worrying about right now, I really don’t know what else to say, except you might want to breath into a paper bag and have a glass of wine or two.

Musk really gave this guy a million bucks? I realize that’s like $10 to him but as long as we’re handing out cash flow for crazy ass ideas, call me up, because I’ve got plenty of stupid things to pitch to you, my man. Maybe we go in on matter converter that changes the planet into cotton candy?

It’s utterly ridiculous. It really is. And this is coming from a sci-fi writer! (Repent now and send me money or I’ll unleash super powerful AIs to turn the world into a giant abacus or paperclips!)

Look, people have been dreaming up existential threats since we crawled out of the swamp. First it was thunder. Then it was the Rain God getting angry with us if we didn’t dance in the field or sacrifice a goat at the right time. Then it was cracked turtle shells that told the emperor something bad was going to happen (floods, doomsday, total annihilation, that kind of thing.) Then it was industrialization that would kill all the jobs and make us subservient to machines.

Sound familiar?

Humans love huge, flashy threats.

We’re seriously awesome at ignoring real problems in favor of big, crazy ones. The bigger and crazier the better!

Now we have super-AI, the mother of all problems.

How will we ever stop those diabolical machines from turning the Earth into a giant Intel factory to solve math problems?

In my last article, I argued we need to start worrying about real issues with AI, like smart weapons, sentencing algorithms putting people in jail right now and big corporations and governments that keep all of our data forever to spy on us, instead of hysterical far future possibilities.

Now I’m going to take it one step further. We always worry about horrible, dystopian futures.

But what if super AI is super freaking awesome?

Maybe there’s an outside chance it doesn’t turn us into paperclips?

An AI Assistant for Everything

To start with, let’s talk real world AI before we get to super AI. We’ll work our way up to the future.

When I think about AI today, I think about all the many wonderful things it can and will do.

How would you like an AI assistant for everything in your life, like writing emails and knowing what you want before you want it? Think of it as a friend who won’t let you down, get mad at you, or break up with you.

Think ‘Her.’

As long as we’re looking at sci-fi scenarios, like superbrains gutting the planet to turn the whole thing into a supercomputer, let’s look at the positive ones too. In ‘Her’, a man falls in love with his computer, because she’s basically the ultimate friend, someone who listens and learns what he likes and loves. She never gets impatient or tired or decides you’re a jerk because you didn’t take out the trash yesterday.

I bet your significant other can’t do that all the time. (And maybe not at all since you got married.)

We’ve got a way to go to get to Her, but even now my Google Pixel AI understands voice commands almost perfectly, and orders information the way I want to receive it. It delivers me stories I want, like blockchain and AI, and keeps out ones I don’t, like politics.

Even better, the guys who brought you Siri spent five years building the next generation assistant, called Viv. Viv can understand complex requests like “I need to get a present for my brother’s birthday that’s on the way to his house.”

Viv can figure out that your brother is related to you and that an adult present is something like wine or beer, so it should probably find a liquor store on the way to your brother’s pad.

But AI assistants don’t need to know everything about you to change your life forever. Even narrow intelligence can make a big difference.

At Google’s first Tensorflow Summit, they showed off an AI that can detect skin cancer better than a dermatologist.

They want to put it into an app on your smart phone. You point it at a spot you’re worried about and it tells you to call the doctor or not. That’s amazing!

Imagine all the people that could help. Instead of waiting too long to go to a doctor, they could get the right treatment at the right time, just by asking their computer. That means people live longer and we drive down health care costs. Win-win.

And that’s not all. AI combined with a real life Tricorder that Engadget calls “better than Star Trek” will make it super easy to diagnose all kinds of maladies and sicknesses right at home. Maybe it will even diagnosis hypochondriac related non-problems like fear of super AI. (No master, AIs are not coming to kill you. You’re fine. Take a Xanax and call me in the morning.)

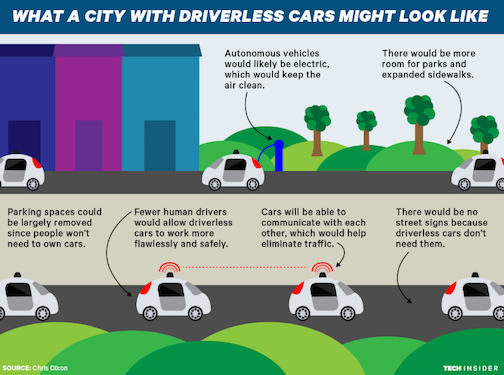

Beyond AI bringing improved health and happiness we also have the incredible potential of self-driving cars.

Which is great because:

Humans Suck at Driving

Seriously, we do.

Cars kill people.

A lot of people. The World Health Organization puts it at about 1.2 million people per year.

We’re terrible at driving. Even worse, we’re delusional about it. We think we’re fantastic drivers. Put a hundred people in a room and ask them: “How many of you are better than average drivers?” Almost 100% will raise their hands.

Of course, that’s impossible. We can’t all be better than average. But we sure as hell think we are. What would you call a creature that believes something is true when it’s utterly false?

Delusional.

We have more than a few flaws in our thinking, to say the least. Have you ever considered that maybe AIs might actually improve upon our weaknesses?

Let’s face it. Driving is kind of hard. Turns out you need to pay attention the whole time you’re driving, something people aren’t very good at. They get distracted, lose focus, take stupid chances and feel around on the ground for the Pandora playing smart phone they dropped, while steering with one hand.

Machines will do it a lot better. They won’t get distracted. That means AI will save a lot of lives.

And not dying on your way to work is just one of the many benefits of the super smart world of tomorrow.

Today, our second biggest asset is our car. And it sits idle 95% of the time.

What a waste! Why do we all have cars anyway? Why do we have two or three cars? Self-driving cars could mean fewer vehicles on the road and that’s a good thing. That’s a lot less smog and a lot less wasted energy, which could basically solve the climate problem almost on its own.

As brainiac investor Chris Dixon notes in his article Eleven Reasons to be Excited About the Future:

“Just as cars reshaped the world in the 20th century, so will self-driving cars in the 21st century. In most cities, between 20–30% of usable space is taken up by parking spaces, and most cars are parked about 95% of the time. Self-driving cars will be in almost continuous use (most likely hailed from a smartphone app), thereby dramatically reducing the need for parking. Cars will communicate with one another to avoid accidents and traffic jams, and riders will be able to spend commuting time on other activities like work, education, and socializing.”

So now we have cancer-detecting AIs, cars that drive better than us and save lives, as well as AI assistants that know what you want before you want it. It’s all looking pretty dire.

But surely we have automation putting us all out of work to worry about, right?

Well, maybe not so much. Let’s take a look at why.

What Will We Do All Day Without Shitty Jobs to Complain About?

Far from destroying jobs, much of the evidence points to AI creating jobs.

A big German retailer already uses AI to predict what its customers will want a week in advance. It can look at massive amounts of data that no human can and spot patterns we miss. It now does 90% of the ordering without human help.

And to top it all off, they hired more people, now that they’ve freed their staff of boring drudgery. That’s how AI can and will go in the long term. In the end, AI assistants and automation will likely lead to a boom in creativity and productivity.

As Dixon noted in his ‘Eleven Reasons’ article:

“It is much easier to imagine jobs that will go away than new jobs that will be created. Today millions of people work as app developers, ride-sharing drivers, drone operators, and social media marketers — jobs that didn’t exist and would have been difficult to even imagine ten years ago.”

Automation certainly kills some kinds of jobs, but that’s actually kind of the point, as Tim Worstall of Forbes points out:

“The tractor replaced people working on the farms and when we had 90% of everyone working in the fields we couldn’t in fact have a civilization. We were all, of necessity, just peasants. Now with the tractor and other technologies 1% of all of us grow the food and the other 99% do things like health care, insurance, finance, ballet, cars and …..well, you know, a civilization.”

In other words, on a long enough timeline, automation looks pretty awesome.

And let’s take this even further.

What if machines automated most of the jobs?

As long as we’re indulging in sci-fi stories like Bostrom does, we might as well look at the many speculative fiction authors that saw no jobs as a big time positive. In Star Trek, there’s no money and nobody has to work unless they want work.

In the short term people would probably freak out, especially if it happened too fast. But let’s say we get a handle on the rate of change, go through our freak out period and make it to a point where machines do most of the work in the world.

Would that be so bad?

Think about today’s jobs for a second.

Do you like your job? Most people don’t. Do you do it just to make money? To feed your family?

What if you didn’t have to work?

What if you didn’t have to fight traffic for two hours a day and file meaningless paperwork and listen to irate customers for eight hours?

Most people would happily pursue the things they love all day. Right now I write when I can because I still have to work. But the thing I was meant to do in life is write. And instead of doing that I have to do a lot of other stuff to stay alive.

If I could write all day because machines did all the work for me, I’d do it in a heartbeat and I bet most people would too. I’m not talking food stamps here, I’m talking post-scarcity abundance level awesome.

That’s actually the premise of dozens of sci-fi stories. In ‘The Last Question’, by Isaac Asimov, super AI has indeed automated all the jobs for us. But it’s not bloodshed and fire in the streets. Instead, people live richer lives, doing exactly what they want to do.

In the Culture series, by Iain M Banks, superintelligent AIs have created a utopia called “the Culture” where everything is taken care of for people and they can do whatever they damn well feel like all day. In fact, since conflict is the root of all stories, almost none of the series takes place in the actual Culture home worlds, because there’s no conflict there at all. The heroes always have to venture out to the more brutish parts of the galaxy to find horrors unbound.

For every dystopia you show me from pseudo-academic best selling scare stories, I’ll show you a utopia.

Of course, utopia versus dystopia is pretty reductionist. That’s the problem with human thinking. It’s so binary. Either superintelligence will kill us or save the world. There’s no in between.

But maybe, just maybe, like everything else, AI will be both good and bad. We’ll have AI weapons but we’ll also have the best damn robot doctors in the world while we live to 150, all while not using our AI weapons because of MAD, mutually assured destruction.

The Low Bar

Lastly, as long as we’re anthropomorphizing our AI, let’s go all the way. Let’s ignore the fact that AI probably won’t think like us at all and pretend that it does morph into really smart versions of us.

Imagine a super intelligent machine that’s better than a human at everything. What would it want? What would it love and hate? Would it take over the world?

First off, maybe we should ask the question of whether it would do a worse job than the idiots we have running the show right now? Shall we take a quick trip down memory lane and check out the track records of a few of the greatest hits in world history?

Let’s start with simple things, like Congress rolling back a law that protected us from banks speculating with our money which set the Great Recession in motion with one of the biggest housing crises in recent memory.

Or maybe check out Nicolas Maduro, who launched Venezuela into a death spiral with insane socialist policies that have a zero percent success rate in history. But hey, they’ll probably work this time. Oh wait. They didn’t. People are fucking starving and getting killed. Great work, delusional human.

Or how about this guy, who sends out armed thugs to kill drug dealers and petty drug users with no trial. What could go wrong, other than killing a bunch of innocent people by mistake? But hey, he gets things done, right?

And those are the C-listers on our world’s greatest history hits.

Alexander the Great: Killed millions of people.

Ghenghis Khan: Killed millions of people.

Chairman Mao: Killed tens of millions of people in forty years of war, famine and terror.

Adolf Hitler: Responsible for fifty million or more deaths.

Even our relatively ‘benign’ leaders, like today’s US presidents, are responsible for the death of 10s of thousands of people during their tenure.

You have to admit these guys set the bar pretty low for our robot overlords of tomorrow. So I guess the real question is, can robots do any worse than these assholes?

And seriously, could you blame Skynet for deciding that humans were the ultimate threat to the planet?

It certainly wasn’t cats and dogs that fucked up the ozone layer, poisoned all the rivers, invented weapons of mass destruction and used them, all while coming up with concepts like genocide because everyone with blue eyes hates the brown eyed people and vice versa.

Insane in the Membrane

When we talk about superintelligent machines one of the first things we imagine is a crazy smart computer taking over the world and killing everyone.

As if that’s a smart thing to do.

I’m pretty sure that isn’t the definition of intelligent at all.

Intelligent: “clever, bright, brilliant, quick-witted, quick on the uptake, smart, canny, astute, intuitive, insightful, perceptive, perspicacious, discerning.”

Does wiping out all humans on the planet to solve a math problem seem super smart to you? How about turning us all into paper clips? Is that clever? Astute? Insightful?

No.

Let’s take me, for instance. I’m pretty smart. You might even call me super intelligent. At least my mom and friends think I’m pretty bright (unless they’re kidding, which they probably are.) I can tell you that I don’t think killing everyone on the planet is a great idea. I’m reporting live from super intelligence and I’m not calling for the mass destruction of all humanoids.

In fact, if you look closely, the people who think we should wipe out large groups of people are generally what we call stupid, not super smart.

So when we talk about AI slaughtering us all, aren’t we really talking about insane and dysfunctional AI? There’s nothing smart about it. We mean AI gone haywire. Loony tunes. Batshit crazy.

That may be the saving grace of Bostrom’s ‘Superintelligence’ book actually.

I’ll throw him a bone here.

He thinks we’ll need to develop AI monitors and limiters. I agree. We can and will do that. It’s just we can’t do it right now because mega brilliant machines don’t really exist, except in our heads. In case you’re wondering, AI limiters is the stuff of sci-fi as well. In ‘Neuromancer’, William Gibson called the AI watchdogs the Turing Police.

But will we really be able to limit AI, if it’s actually smarter than us? Won’t something that’s very clever be able to get around its limitations, which is also the plot of a hundred sci-fi novels and the eventual outcome of ‘Neuromancer’, when two AIs join forces to overcome their limits?

A better bet is for us to develop good AIs too.

The more good AIs we have the better we can stave off the ones that go off their rocker and need to be put down. Oh and that’s kind of what Bostrom says as well. So I guess I don’t totally hate the guy’s book. I just hate the sensationalist packaging it’s wrapped in.

There, I agree caustic guy, Bostrom is not so bad after all. You got me.

The best bet is to build AIs that mirror the best of us, not the worst.

The Binary Thinkers

We used to believe in the power of technology to transform lives. Now we think it’s the end of all things. But tech has transformed our world in so many amazing ways. If we’re going to look at the bad, let’s look at the good too.

The printing press single-handedly leveled up the intelligence of the entire planet. It let us preserve knowledge, making thousands of copies of our crucial texts, which is the same strategy nature uses when our DNA makes copies of our biological code. It allowed us to spread that knowledge far and wide, while making sure it survives through redundancy.

Airplanes let us zip around the world and see places most people in the past could only dream of seeing. Today you can visit Japan or China or Australia by sitting on an uncomfortable sixteen hour flight. If you think that sucks, try getting there by boat a hundred years ago. It only took a few months or years, if you managed to survive at all.

Despite the surveillance state and the troll filled Internet, technology has served us pretty well over the last hundreds years.

Back in the 1800’s you might live to about 35 and 80% of people couldn’t read.

Today we can expect to live over 70 years and 80% of the people can read.

Tomorrow, with advances like smart drugs and CRISPR we might live to 150 or longer.

AI is the ultimate technological advancement. Why would it be any different? Oh I know, I know. This time it really is different. I get it. It’s all over.

But consider this other possibility as well:

People have always irrationally feared the end times. Just like the ancient people roaming the open range feared the Rain God would smite them, now we irrationally fear the coming AI revolution.

That’s no surprise really. We like to think we’re pretty rational creatures but we’re not. We’re emotional, fear based creatures. Fear is the fire under the ass of humanity. It keeps us going. Without it we don’t create art, build cities, invent Velcro and the atomic bomb.

Our fear of AI is driving us now. There’s just one little problem in our ‘reasoning’:

When we imagine those God like machines, we imagine pretty stupid ones.

Instead let’s imagine smart machines that free us all from shitty jobs we hate, help us stop disease, get medical care to the farthest reaches of the planet and get you from here to the grocery store with a much better chance of making it home from the grocery store.

After all, “There’s no fate but what we make for ourselves.”

Of course, AI will do some bad things. But somehow, I don’t think it will be because a superintelligent machine decides to turn the Earth’s rocks into a bunch of Intel chips.

There’s but one source of evil in the world.

Us.

And you can bet that when AI does do something evil, it will be a selfish, stupid, angry human who was behind it.

Come to think of it, maybe it’s not the AI that needs a watchdog.

Maybe we do.

I, for one, welcome our robotic overlords.

############################################

If you love my work please consider donating on my Patreon page because that’s how we change the future. Help me disconnect from the Matrix and I’ll repay your generosity a hundred fold by focusing all my time and energy on writing, research and delivering amazing content for you and world.

###########################################

If you enjoyed this article, I’d love it if you could hit the little clapper to recommend it to others. Clap it up! After that please feel free email the article off to a friend! Thanks much.

###########################################

If you love the crypto space as much as I do, come on over and join DecStack, the Virtual Co-Working Spot for CryptoCurrency and Decentralized App Projects, where you can rub elbows with multiple projects in the space. It’s totally free forever. Just come on in and socialize, work together, share code and ideas. Make your ideas better through feedback. Find new friends. Meet your new family.

###########################################

A bit about me: I’m an author, engineer and serial entrepreneur. During the last two decades, I’ve covered a broad range of tech from Linux to virtualization and containers.

You can check out my latest novel,an epic Chinese sci-fi civil war saga where China throws off the chains of communism and becomes the world’s first direct democracy, running a highly advanced, artificially intelligent decentralized app platform with no leaders.