The Wide and Wondrous World of Centaurs and Agents

Intelligent Glue, Secret Cyborgs and Why Many Folks Are Having Trouble Seeing a Brand New Kind of Software that Transcends the Old Definitions

Centaurs and Agents are a very different kind of software.

Most folks still haven't grasped just how different. They're still trying to pound Agents into the square hole of old definitions.

It doesn't help that the terms are bit squishy right now. That's normal when new technologies are developing at light speed. Nobody is really sure what to call things or how it all hangs together. Slowly, over time, we coalesce on standard terms but right now the AI industry is evolving so rapidly that it’s all in flux.

So how do I define them?

Centaurs are intelligent software with a human in the loop.

Agents are fully autonomous or almost fully autonomous pieces of software that can plan and make complex decisions without human intervention.

Actually, I think it's fair to call both of them Agents. We can think of Centaurs as "Agents on rails", a precursor to full blown Agents. They're collaborations between man and machine. They can accomplish advanced tasks as long as they're well defined with clear guardrails and as long as someone is checking their work.

Not everyone defines them this way. The fantastic recent report from Andreessen Horowitz on emerging LLM stacks sees Agents as purely autonomous.

But over time I think more and more people will come to think of Agents the way I'm laying them out here. Centaurs are semi-autonomous agents and Agents are fully autonomous.

The truth is we've had Agents for a long time in AI/ML, mostly in the field of robotics. The traditional definition is any autonomous software that tries to achieve its goals, whether in the digital world or physical world or both. It's got sensors that "see" and "hear" and "sense" its environment. It's got "actuators," a fancy word for the tools it uses to interact with the world, whether that's an LLM using an API the way we use our hands and fingers, or whether it's a robotic gripper picking up trash.

But ChatGPT smashed the old definitions.

That's because most Agents of the past were pretty stupid. They couldn't really think or plan or reason all that well. Think of Boston Dynamics' famous Spot robot dog that can walk around and film things. Mostly you control it remotely by hand. It's great at running around but it's not really all that smart. It can't talk, take complex actions, adapt to new environments or understand multifaceted commands from you (unless you mash GPT into it).

With GPT we suddenly we have software Agents that feel distinctly more intelligent. They can reason and plan and do complicated tasks that were previously the stuff of sci-fi robot buddies like R2-D2. Don't get me wrong, GPT is not even close to perfect, but there's no doubt it's a massive leap over what we had before.

In the past, most Agents were limited to a small array of highly specific tasks. Think of an NPC character in a video game finding its way through a dark dungeon or reacting to you in a fight. It wasn't very good. It was brittle and broke down easily. Great human players easily outfox most traditional AI in video games.

If you've ever seen an NPC in a video game walking in a loop because it ran into a wall, that's a great metaphor for thinking about the limitations of old school Agents. Now we have GPT playing Minecraft at an incredible level, learning as it goes. It's not an AGI, but it's a highly adaptable and flexible simulation of intelligence that unlocks a massive array of new kinds of apps.

GPT and the surge of open source (LLaMA, Vicuna, Orca, Falcon) and proprietary (Claude, Command) LLMs that followed means we now have the power to embed much stronger and more realistic intelligence into our software at every step. It won't be long before people wake up and realize they're dealing with a new animal all together, one that offers brand new possibilities that aren't possible with today's monolithic enterprise apps and ubiquitous mobile apps.

What many folks are missing is that many of these apps will be somewhere in between enterprise and desktop and mobile. They'll cut across all of them. They'll work partly as mobile apps, partly as SaaS, partly on desktops, and in the cloud.

Think AI microservices and microapps. Think ambient intelligence. Think "intelligent glue" between different programs.

What do I mean by intelligent glue or micro intelligence?

Take the research Agent we just wrote for the AI Infrastructure Alliance (AIIA). We fed an Agent a list of 1000s of curated companies from Airtable, had it go out and read the websites, summarize them, and then rate them based on how good of a match they were for joining AIIA as a partner. It was about 95% accurate, probably more accurate than an intern just learning the business and trying to understand what everyone does without having the background technical knowledge to make the call right.

Half a year ago, we couldn't write that application. Sure, we could hack something together with some awful heuristics, maybe by taking the first sentence of every paragraph we found on the page but there was no intelligence behind it. It wasn't smart. It's now trivial to write an almost fully autonomous Agent to do this and we did it in a few days.

Our little research robot is a small app with a big impact.

Small can be really big.

Expect to see a massive rise in these kinds of mini-applications.

The Secret Cyborgs or Why Individuals Get the Max from Centaurs Now

Weirdly enough, while the mass media is panicking about fantasies of massive job losses, today's Centaurs benefit individual workers way more than companies right now.

As Wharton biz school professor Ethan Mollick writes “they’re secret cyborgs” because many people are using AI without telling their boss. They’re doing it because it makes their life easier and because companies face an uphill battle adopting these technologies.

But why are companies struggling to adopt these new systems?

Companies, especially big enterprises, face a massive amount of regulation and these systems don't behave in a deterministic way like traditional IT apps. All the regulations were written for deterministic software and that means big companies outside of tech are having a lot of problems adapting LLMs and generative AI and Agents.

Small businesses and individual workers stand to benefit the most right now because they can adapt and pivot much more easily.

Early evidence suggests AI will have a tremendous impact on individual productivity. Mollick notes that "controlled studies have suggested time savings of anywhere from 20% to 70% for many tasks, with higher quality output than if AI wasn’t used."

Billions of people now have access to LLMs and history shows that when that many people have access to something they find incredibly creative uses for it. They're using it to make their jobs easier, better and faster. Despite nonsense news stories like "AI is Going to Eat itself" that worry AI models will somehow implode because workers are using AI to do the boring work of AI (like labeling data or creating chats), it's actually not a problem at all. Not only is it not a problem, it's awesome. AI will form a recursive loop that makes everything quicker up and down the economic pipeline with synthetic data playing a big part in tomorrow's models.

The more people who find ways to augment themselves the better. That's the story of every advance in human history. We don't lift rocks with our bare hands. We went from levers, to pulleys, to mechanical cranes. We rarely do calculations by hand. We went from abacus, to calculator, to computer. That's automation and abstraction in a nutshell and AI will have the same kinds of impact on how we do what we do.

As Mollick writes, "the current state of AI primarily helps individuals become more productive, not so much helping organizations as a whole. That is because AI makes terrible software. It is inconsistent and prone to error, and generally doesn’t behave in the way that IT is supposed to behave...But, as a personal productivity tool, when operated by someone in their area of expertise it is pretty amazing."

I disagree with Mollick that it won't help organizations. It will. It's going to help smart small businesses a ton. It's probably already helping the most savvy businesses now. Every small business that's hiring should be looking to leverage AI and encourage their employees to use it as early and often as possible. In the company I'm raising money for right now we're going to make sure our coders are pair coding with LLMs as a requirement. Smart small businesses will benefit from Centaurs and Agents tremendously.

But I do agree that it's individual people who are getting the most out of LLMs, Agents and generative AI at the moment.

The key line from Mollick's post it this: "As a personal productivity tool, when operated by someone in their area of expertise it is pretty amazing."

Of course, he's talking about Centaurs here. The best Agents today are Centaurs because skilled people are the ones who can understand how to fix the output from the imperfect LLMs.

Here’s a great example of how expertise still matters big time and why Centaur style work makes all the difference: My partner at the AIIA speaks multiple languages but her native language isn't English. She has GPT help her with drafts of her blog after she lays out her thoughts. She’s a strong and clear thinker so GPT helps her write well in a different language, augmenting her abilities. From there it's easy for me as a veteran writer of twenty years to shift the tone, fix limp phrasing, and make it more direct and personal when I help her edit. Without that added skill, the result is good but not great. GPT levels up her writing and I level it up even further.

That’s man and machine working together. It's a fast and furious feedback loop that helps us get work done faster.

The idea of the Centaur came to us from Gary Kasparov. After losing to Deep Blue, he sponsored a tournament where you could enter as an AI, a person, or an AI/person hybrid team.

That evolved into "Freestyle chess" and in 2005 it wasn't a grandmaster with an AI who won the tournament.

It was two expert players and an AI.

From the very beginning it's been clear that AI can level up even average folks to more advanced levels. Today, Centaurs can do a lot more than level up your chess. They can make you a much better writer and coder and more.

Take our AI Infrastructure Alliance newsletter app, another Centaur Agent we wrote to do 95% of the work of writing our AI News Now newsletter. It's got a few different components like a Telegram group where we dump our favorite stories and papers from the week, along with a several prompts that help it pick the best stories and write drafts of the newsletter. I leverage my twenty years of experience as a writer to flesh out the writing and make it hit harder but GPT does the heavy lifting.

The newsletter app also leverages the old Google wisdom of "let people do what they do best and computers do what they do best". What computers can do has changed but humans are still the best at decomposing problems, abstract thinking, and giving meaning to information. Originally we thought we would let GPT pick out its favorite stories from the web but then we realized we're better at it. Since we were already reading lots of stories every week, it was easy to just put them somewhere for GPT to run through and pick its favorite from our curated list.

Doing more with less is incredibly powerful. Today, I can use Divi to make a website that makes it look like I'm a great designer when I'm only a mediocre one. That's how abstraction and augmentation makes work easier. We abstract away the lower tasks and move up the stack.

Every new layer of software that comes along is a higher level of abstraction. Once we had the LAMP stack we could make websites a lot easier but it was still out of reach for most people because it required a lot of manual technical work. Then came Wordpress, which let regular people create websites and blogs en masse. Now Wordpress runs a whopping 43% of all websites. It lets more people express themselves than ever before. Plugins made it even better. Then came Divi, which is the ultimate drag and drop editor and makes average designers like me look like rockstars. And guess what? Web designers are still around, just like artists, writers and everyone else will be around after AI.

Centaurs and full blown Agents are a new layer of abstraction on our work.

They will become our interfaces to the world.

They will let me do web design by simply describing what I want then adjusting it after the fact with a Divi like drag and drop editor. It will put web design into the hands of even more people and that's a good thing. It means more voices online adding to an ever more diverse chorus.

For now though, these apps still need us in the loop. Our newsletter app is a perfect example. LLMs are amazing but they're hard to control and a bit finicky and weird. They're an alien intelligence. Sometimes it's easy to get lulled to sleep by my newsletter writer because it's so good so often.

Right up until it's not.

It sometimes makes absurd mistakes even a child wouldn't make.

If I get lazy and don’t double-check the Agent's work then I miss the times it makes up a fact that just wasn’t in the story or when it drops in something irrelevant, like a summary of the Arxiv mission statement when it's summarizing a paper's abstract. At least once a run the newsletter writer adds some variation of this to the summary of the paper itself:

"arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website. Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them."

No human would ever make that mistake. Even a dumb intern.

I still can't fully automate the newsletter and I'm not even sure I want to because I want the newsletter to reflect my values and the values of the AIIA and it’s me and my team who know those values best. AutoGPT was the fastest project ever to hit 100K stars on Github, but it doesn't really work all that well yet. It never stops and it goes off the rails badly and hallucinates as it tries to plan long term, complex tasks.

If you're out there building these apps, lean into the necessity for humans in the loop. Embrace it. Think of it as a collaborative dance between AI and people.

Like Peter Thiel wrote in Zero to One, "The most valuable companies in the future won’t ask what problems can be solved with computers alone. Instead, they’ll ask: how can computers help humans solve hard problems?”

People thinking about building Agents should think how can I help people solve problems? How can I keep humans in the loop and make their lives easier?

With my newsletter app, I'm like an editor at a newspaper, checking the stories of my writers. Usually those writers are good but sometimes they make something up, bang out a poor sentence, miss the true meaning of the story, or just get something wrong and I have to fix it. Sometimes I get a veteran writer and I don't have to change much and other times I get a junior writer just starting out. I don't know which GPT will show up today, the veteran or the amateur, so I have to stay on top of it like the best editors do.

Expect millions of little apps like this, man and machine working together in a delicate dance.

It's a bit like old school RPA (Robotic Process Automation) but actually smart, democratized and direct, as opposed to a big heavy enterprise apps that are mostly for HR and structured data. These new Agents and Centaurs can handle all kinds of unstructured data and complex work and they don't need an enterprise sales team. They'll get picked up by small businesses and individual workers acting as secret cyborgs to automate away the crap of their jobs.

Small Apps, Big Business and Why Everyone Buys a Finished House

There are a lot of companies out there building automation tools for this intelligent glue. Low code workflows and Python engines to build automations. There's a rush to create these kinds of tools and to fund them.

Here's the thing though: Most people won't use these tools.

It's going to be like the MLOps tools of the last generation. We saw a huge amount of companies making MLOps software and then it imploded. Almost overnight the market changed direction.

Why?

Because we realized the basic premise of MLOps was wrong.

We assumed everyone would have a 1000 data scientists and train highly advanced models and do low level ML. Now we know that's never going to happen. A few companies will train models. The rest of us will fine tune them or just call out to pre-baked models via API. I'm not even sure most people will want to fine tune models although lots of folks think they do. It’s a lot of work. I’d rather get a finished model that does what I want now without fine tuning or that learns with just a few examples.

What people want is stuff that works. They want finished products. They want the end result.

That's the history of technology and I expect it to play out the same here. In MLOps world, we saw buyouts, flame outs and consolidation. The same will happen with the newer, higher level tools after a few years.

That's because there will only be a small number of builders versus buyers of apps.

Many companies are betting on the fact that everyone will want to string together automations via low code platforms and more. They won't. I have zero desire to write a bunch of personal automations for myself. I want them done for me. That's what most people want.

Don’t get me wrong, some of these tools will make good money but most people will just buy what builders make with them.

The times may have changed but one thing hasn't, the money is in the application layer.

It's just like housing. Most people don't want to build their house. They want to buy a finished house or pay someone to design it and build it for them.

What most people want with AI software is to browse and buy a newsletter writer and research Agent, set it up with a few wizard like steps and be off and running.

And these "little" apps will make big money. Expect clusters of these apps to be huge business. Companies will own dozens of them across their stack. These apps don't need to be a multi-billion dollar applications to sell strongly and deliver value to their target audience.

Remember, software Agents are somewhere in between mobile store apps and enterprise apps. They transcend mobile, desktop, SaaS and cloud and often straddle all of them in the same application. They're different beasts altogether and they're also not likely to bloat up into giant enterprise apps with a big heavy lift to get them into a company. They'll flow into people's lives and into small and medium businesses smoothly and seamlessly, like water finding the cracks and filling it.

Our newsletter Centaur hops easily between mobile (via stories we curate into Telegram), the web, as it reads the stories via Python browser libraries, the desktop, as it spits out a Word doc with summaries of the stories that I can pick from, and the cloud, because it can also push to Gdocs or write to Substack or Linkedin.

Now that's a versatile little program.

Take a look at the top money making podcasts on Patreon. Many of them are pulling in 100K to 200K a month. Some have as few as 8000 subscribers but are raking in almost 50K a month. None of them have Joe Rogan level numbers but it doesn't matter in the least. They have a fantastic small business going there with highly engaged and dedicated listeners.

(Source: Graphtreon)

Imagine I have a micro-AI app that has 10,000 subscribers at $9.99 a month. That's a $100,000 bucks a month, or 1.2M a year. If I have 5 or 6 of those I have a hell of a business without a lot of people needed to manage it.

The same will be true for many Agent based apps. There are millions of small tedious tasks that people will pay for that don't require full blown software.

Lots of investors out there are missing the importance of these little apps. They're missing them while they look for the next big enterprise play or billion dollar monolithic app. When you're used to hammers, you only see nails. But if they widened their view they'd see that small is going to be huge.

Take something like a resume reader/sorter. Every small business needs to hire people but sorting through resumes is a slow, boring and tedious task that never seems to end. You spend hours going through them before you can even figure out who's worth talking to first.

Now imagine you had an Agent that could grab all the resumes out of email, read them, summarize them and hurl them into Greenhouse. That's a huge win for a small team that frees up a lot of time. You don't need a whole new HR app for that, you just need your Agent to work like glue between all of these different apps you already use right now.

There are a ton of workflows like this. We have a massive proliferation of SaaS platforms in world today. For every kind of app you need, there are dozens to choose from at any given moment. Agents don't care if the app is a little ugly or the interface isn't perfect or the API is gnarly and poorly documented.

Agents use APIs the way we use our arms and legs. They can rip through an ugly API as easily as a well designed one.

The power of Agents is their ability to act as a go-between. We have an overwhelming amount of systems and information out there and Agents can easily bridge that gap.

Agent creators shouldn't be trying to reinvent the wheel and develop every Agent into a full blown enterprise or consumer application. Don't think Photoshop, think apps that go between Photoshop, Dropbox and the web with ease.

Take the UNIX approach to doing one thing well, rather than the old Microsoft approach of jamming 150 capabilities into a single bloated binary. Some ideas just don't warrant a massive application but they can still prove incredibly valuable to people everywhere.

And you can always stack them together later.

From Prototype to Finished App

But how will these hack-y little prototypes develop into sellable, usable applications the rest of us can use, rather than something we have to cobble together ourselves?

Mostly it will be a matter of code maturity, iterating, and a little old school software magic from the desktop days:

Wizards.

With Agents, some customization is usually needed. You can't just download it like TikTok and start using it after creating an account. These apps need to know something about you and your environment and what you want them to do.

Let's loop back to the AI Infrastructure Alliance newsletter app once more. If we wanted to commercialize it we'd have to change some things and add a Wizard to guide people through the initial setup. That's because the prompts are geared towards us, like the prompt that tells GPT to read two pieces of news and pick its favorite of the two:

"You are an engineer with a strong passion for the latest developments in AI. You constantly read the news, and you are fascinated by the pace of development of AI and the power of new AI-driven solutions.

You are going to read two pieces of news below and tell me, which one of them you find more interesting, exciting and important. Explain step be step why you think so."

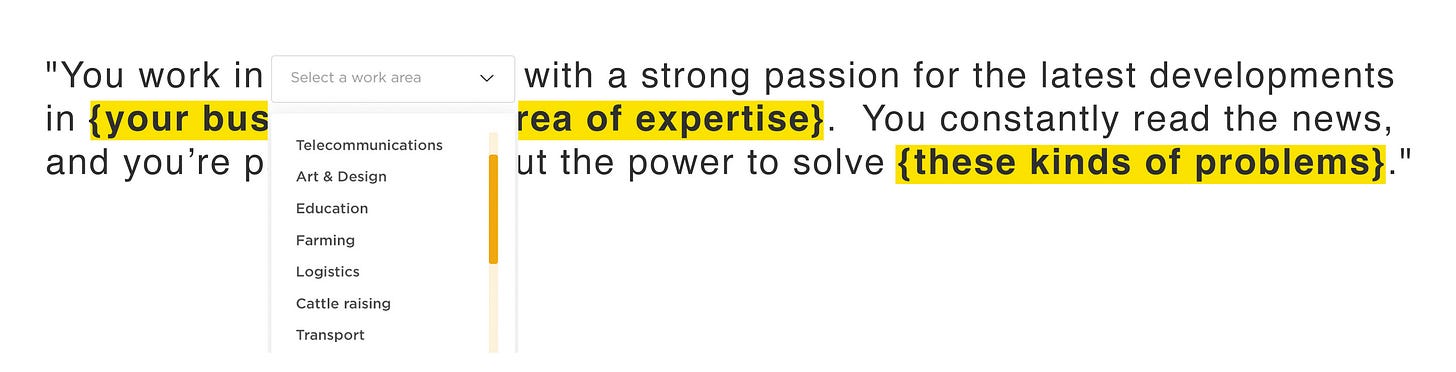

Obviously, that prompt only applies to AI news but it's simple to take that template, rewrite it a little, and make it fill in the blank or give it pre-baked drop down choices like this:

"You work as {a/an} {job title} and you have a strong passion for the latest developments in {your business/topic/area of expertise}. You constantly read the news, and you're fascinated by solving {these kinds of problems}."

The user could quickly fill this in the way we filled in Mad Libs as kids or choose via pulldown menu of supported professions and areas of expertise to reduce error rates. In case you never had Mad Libs, those are the little notebooks with missing words that every teacher thought was a great educational word game while every kid on the playground used it to fill in dirty words and laugh their heads off.

(Source: WooJr.com and Mad Libs archive at the Swanton Public Library)

It seems everything old is new again. The same approach can be used for updating working prompts in the most minimal way to customize an Agent for a new use case.

It might look something like this:

Expect these apps to have a whole host of scaffolding around them. They need help. They're subject to hallucinations and prompt injections and slow downs. Companies are already forming to solve those problems.

Operational tooling is key here. We've already got caching, usually through something like Redis, to improve response times and cost and to save on round trip API calls to the LLMs in the cloud. Some of the old school MLOps tooling is getting repurposed and refactored, like WhyLabs building monitoring for LLMs with Langkit, and other companies like Helicone are starting with a native approach to LLM monitoring. Other tools, like PromptLayer, do logging and version control of prompts. Tools like Guadrails validate LLM outputs to make sure they stay on track and we're already seeing the emergence of prompt injection detectors like Rebuff. I expect to see full blown Eset style security apps to protect the entire pipeline of an AI driven app, along with middleware and more.

In the coming years it will be easier than ever to get these apps wired together and then ship them and sell them to eager buyers.

The Agent Test

When the world changes it's hard to get a handle on it. The old rules break down. We're not sure how things will shake out and how they'll develop. It’s sometimes scary but it’s also an opportunity.

That's what happened with the release of ChatGPT. Arguably, Stable Diffusion was the first model that rocketed AI into the popular imagination, but it was eclipsed by ChatGPT soon after. The sheer power of being able to talk with something that can talk back and understand us feels a little like magic.

DeepMind's cofounder, Mustafa Suleyman, said that ChatGPT's magic broke the Turing test and we need something totally new. Fooling someone that it's a real person for a few minutes is now trivial. He's suggesting a very capitalist approach to the new test.

"His 'modern Turing Test' would give an AI $US100,000, and then the researchers would wait for the AI to make $US1 million on its initial investment. See, only a true intelligence can 'make line go up'...The AI developer called this measure of understanding artificial smarts ‘artificial capable intelligence,’ and like any good capitalist, AI should be judged on its financial accomplishments rather than its capacity for human-level interaction."

It's no coincidence that he's basically suggesting a test that would mean we now have a truly powerful and fully autonomous Agent.

He might be a bit tongue and cheek here but he's not wrong. Making money in business is one of the hardest tasks in the world. A test like this would employ reasoning, long term planning, adaptation, flexibility, insight, intuition and much, much more. If an Agent could pull it off, it would mean we've entered a bold new era of artificial intelligence.

I'm not sure how long it takes us to get there or what kind of monster model and scaffolding we need to make that a reality but that's all right.

In the meantime, you don't have to wait for fully autonomous genius machines.

There are millions of Centaur apps just waiting to be written that will make you rich while we wait for R2D2 and an AI that can make us a million dollars while we sleep.

Time to get building.