If We Build It, Everyone Lives

Why AI doomers are Catastrophically, Spectacularly, and Dangerously wrong

It takes a certain kind of personality to see a miracle and call it a monster.

We live in a time of miracles. If you're reading this, congratulations, you're a part of the luckiest 1% of 1% to ever live.

If you're alive today you're a part of a long chain of developments in human history where poverty has dramatically dropped, where people live longer and healthier and where hunger has fallen to single digits.

You can talk to anyone, anywhere on Earth for practically nothing via video or voice like you’re Dick Tracy. We have antibiotics that kill many of the bugs that usually killed us, MRI machines that can see inside of us and spot cancer before it takes over, and crumple zones in cars that make getting on the road safe. We have knives that don't rust because of the magic of stainless steel. We have refrigerators to keep our food cold and so it stays fresh longer and keeps us better nourished. Because of air conditioning we can live in places that were basically intolerable only fifty years ago.

But if you listen to the AI doomers it's all coming to an end and fast.

They believe that the entirety of human history and technological development, which has led to dramatically less starvation, less infant mortality, and eradicated many deadly diseases, will be reversed and go in exactly the opposite direction because of a dangerous new general purpose technology:

AI.

It doesn't matter that other general purpose technologies, other than weapons, such as the wheel, paper, the printing press, the internet, hammers and axes, have universally contributed to that dramatic uptick in lifespan/health/longevity/survivability. Those trends will not hold. Not only will all soon be inverted by this new general purpose technology, it’s even worse:

We're all gonna die! And soon! Wiped out like the Dinosaurs in a flash!

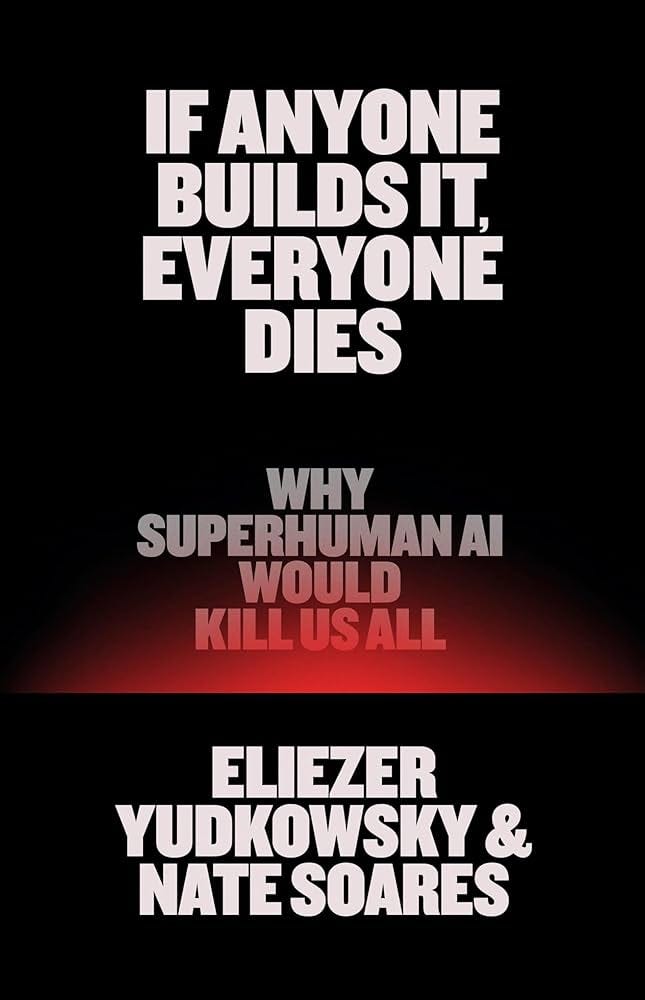

AI doomers are modern-day Chicken Littles, screaming about the sky falling. Their prophet Eliezer Yudkowsky just penned the modern equivalent of the Population Bomb, with the unbearably bleak title, "If We Build It, Everyone Dies," his fears built on a sand castle of pseudo-rationality and a steady diet of pulpy B movie sci-fi. He once called for bombing rogue datacenters by airstrike in Time magazine where he screamed that we should be less scared of "a shooting [war] between nations than of [an AI] moratorium being violated." In other words World War III is preferable to letting people build AI.

As reporter Nirit Weiss-Blatt writes in her new piece called the Rationality Trap:

“In 2024, Yudkowsky, told The Guardian that 'our current remaining timeline looks more like five years than 50 years. Could be two years, could be 10.” And “We have a shred of a chance that humanity survives.' MIRI president, Nate Soares, who co-authored the new book on how AI 'would kill us all,' told The Atlantic that he doesn’t set aside money for his 401(k). 'I just don’t expect the world to be around.’”

The book will probably sell well because fear sells. The media knows blood drives clicks. If it bleeds, it leads. Actually rational and sober analysis struggles desperately today to compete in an attention economy tuned for shock and rage clicks.

The doomers have been painting lurid pictures of gigadeath for years, with paperclip-maximizers turning us all into paperclips and malevolent superintelligences launching nukes. They’ve whispered their paranoid fantasies into the ears of politicians and journalists, demanding we slam the brakes on progress, regulate GPUs like they're assault rifles, and treat potentially one of the most transformative technology in human history as an existential threat.

(Clippy: Source Microsoft)

They warned that models trained on 10^23 FLOPs were too dangerous. Then 10^24. Then 10^25 and now 10^26. Today dozens of models sail past the 10^26 mark, from OpenAI, Anthropic and xAI, and yet civilization stubbornly refuses to collapse. In fact, it’s getting better, in ways both big and small, because of the very technology they want to strangle in its cradle.

The doomers are not just wrong; they are catastrophically, spectacularly, and dangerously wrong.

Their predictions have a 0% success rate and yet they keep getting press. They move the chains and promise us that this time they'll be right and we'll all be very sorry real soon, just you wait.

If their crazy policy suggestions get passed, like the MIRI report that calls for mass government surveillance of AI researchers and restricting GPUs like they're AK-47s, they will do real, lasting, nasty damage that's infinitely worse than the thing they're supposed to stop or prevent.

That's because when activists push big, scary headlines about the bad things they predict a technology will bring, a silent spring, mass unemployment, a new ice age, they usually dead wrong and they almost always ignore the good things we stand to lose without the technology: the jobs that never get created, the clean air we don’t breathe, the cascade of new inventions that never come to be.

On other words, technophobia has a body count.

When you throw a wrench in the wheels of progress, an alternative future full of opportunities vaporizes.

What diseases will we cure with stem cell breakthroughs decades later than we could have because we wasted eight years in the second Bush administration restricting the research? How many damaged lungs did we get because we killed off nuclear and kept right on burning coal to keep up with electricity demand?

Opportunity cost is invisible but its got one of the biggest body counts in history.

In science, every lost year compounds: a paper published in the journal of Technology and Innovation in 2011 estimated that the moratorium delayed first‑in‑human stem cell therapies for macular degeneration and Type 1 diabetes by at least five years. That's countless patients who could have regained their eyesight or thrown away their insulin pumps earlier.

But don't worry. While some people are out there selling panic and fear, the rest of us are busy building a better world. A world where AI isn’t a monster, but a miracle.

A world where, if we build it, society gets smarter and more resilient to shocks and better able to solve our biggest challenges and where AI sparks a medical revolution and more.

In short, a more intelligent world is coming and you shouldn't be afraid.

You should be very, very excited.

The Doctor's New Superpower

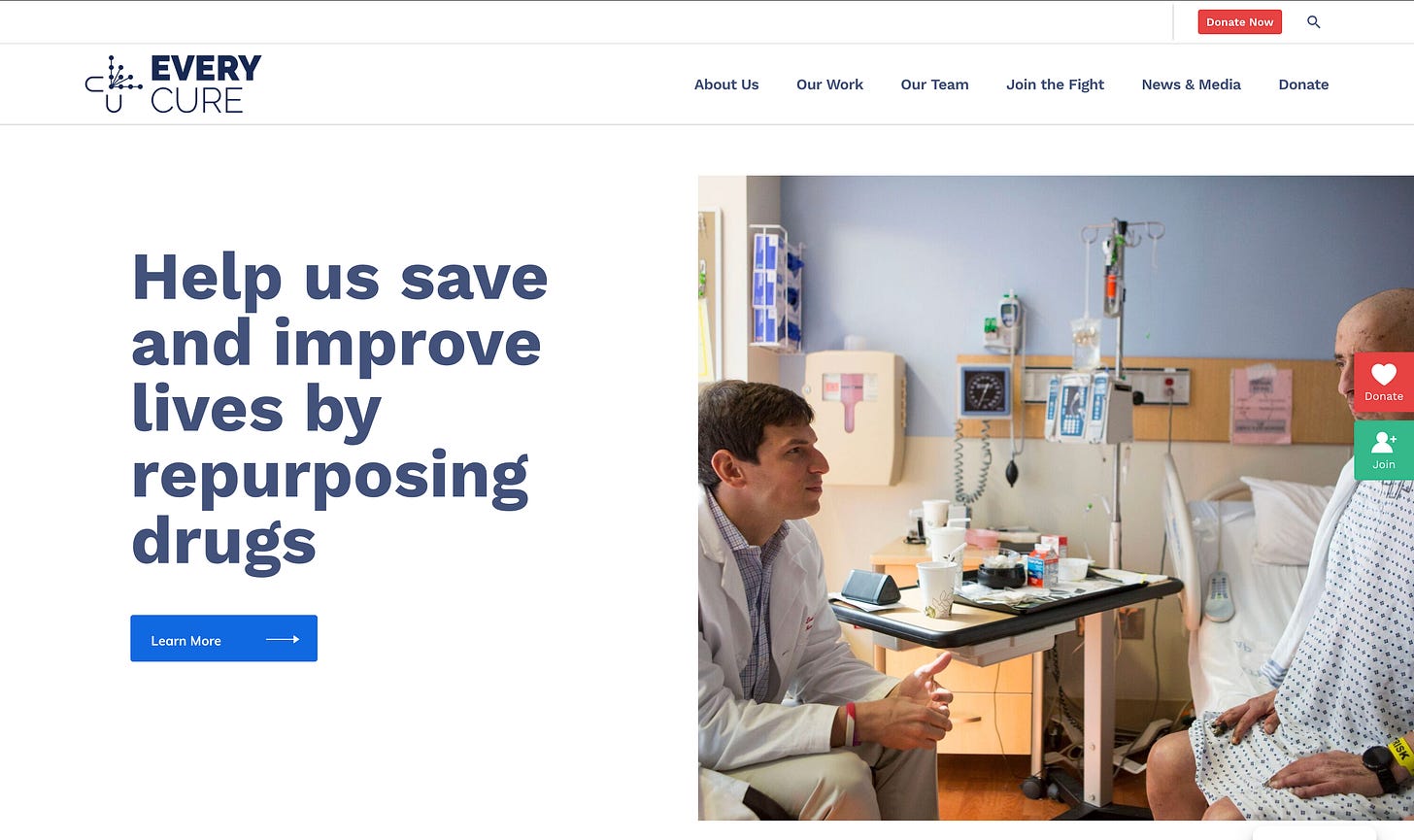

Take the story of David Fajgenbaum, a 25‑year‑old medical student. When he collapsed in 2010, he discovered he had Castleman disease, a guaranteed death sentence because there was no treatment. In an ICU in North Carolina, a priest read him last rites. The former Georgetown University quarterback endured four near‑fatal relapses in as many years and cycled through every available therapy.

Desperate, he turned research scientist. Fajgenbaum pored over his medical records, biopsied his own lymph‑node tissue, and ran laboratory assays. He hypothesized that sirolimus (rapamycin), an organ‑transplant drug never tested on Castleman's disease, could possibly shut down the disease. His clinicians agreed. The experimental treatment worked, and Fajgenbaum has been in remission since early 2014.

That's not an AI story yet. It's a human triumph story but it quickly turned into an AI story too.

Most rare‑disease patients lack an M.D. or a Ph.D. and a research lab, so in 2023 Fajgenbaum co‑founded Every Cure, a non‑profit determined to industrialize drug repurposing. Backed by a US$48 million ARPA‑H contract and a US$60 million Audacious Project grant, EveryCure is "developing the world’s most comprehensive open-source AI engine...for linking biological changes to human disease and therapies at scale...a 36 million cell (3,000 drug X 12,000 disease) heatmap with linkage scores for every possible drug-disease combination based on the strength of evidence. This will be open to all physicians, researchers, and patients to provide hope and spur new hypotheses."

TLDR, it finds FDA‑approved drugs that may treat overlooked illnesses.

(Source:EveryCure)

MATRIX has already:

Flagged propranolol for angiosarcoma, an aggressive soft‑tissue cancer now in phase 2 trials.

Suggested a three‑drug “Hail‑Mary” that placed a POEMS‑syndrome patient into durable remission.

Thousands of rare‑disease communities suddenly have plausible therapeutic starting points, doable because the drugs are shelf‑ready and safety‑profiled.

This is not speculative science fiction; it is happening today. And it exposes the fatal blind‑spot in the doomer narrative: when we wield AI to augment human insight, the default outcome is not catastrophe, it is lives saved.

And it’s not just about finding new cures in old data. AI is revolutionizing the very practice of medicine, giving doctors new superpowers to fight disease.

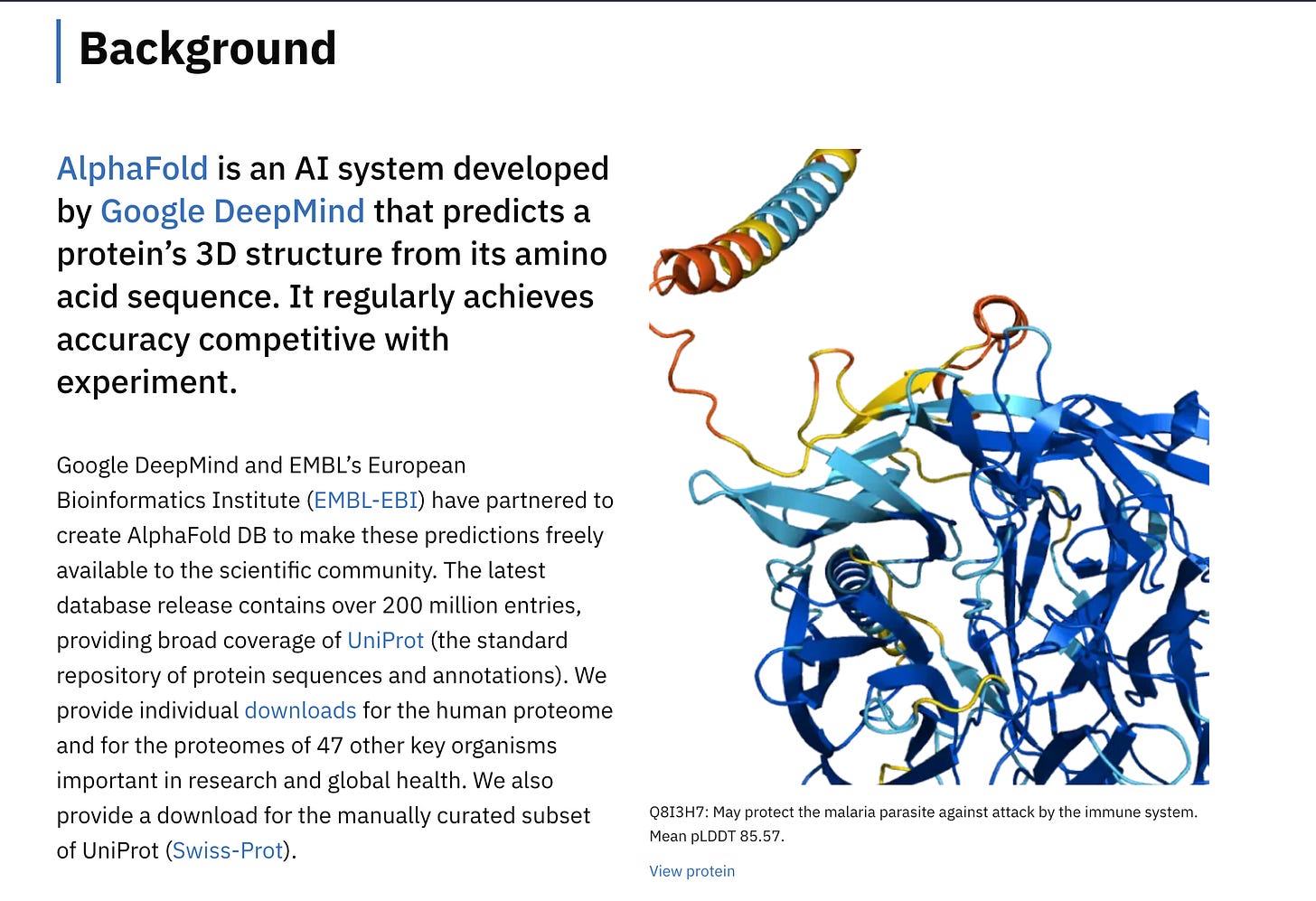

In a recent blockbuster moment for science and AI, the 2024 Nobel Prize in Chemistry went to DeepMind’s Demis Hassabis, John Jumper, and protein‑design pioneer David Baker for AlphaFold—the neural‑network juggernaut that cracked biology’s Holy Grail by predicting the 3‑D shapes of virtually every known protein, unleashing a torrent of potential breakthroughs from fighting malaria, to battling Parkinson's, to enzymes that munch plastic. Their open‑access model vaulted us decades ahead in medicine.

In England, GPs are using an AI tool called C the Signs to scan patient records. This isn't about replacing doctors; it's about augmenting them, giving them a tool that can see the hidden patterns in a patient's history and test results. The result was a jump in cancer detection rates, from 58.7% to 66.0%, in a field where ever percentage point means more lives saved. That's more people getting diagnosed earlier, faster, and with a better chance of survival.

This is the reality of AI in healthcare: not a robot doctor making life-or-death decisions, but a smart assistant that helps human doctors be better at their jobs.

AI played a crucial role in the COVID vaccine and scaling mRNA research too. Now the same tech is in late‑stage trials for HIV, Zika, and a cancer.

Or how about the case of Google's Co-Scientist, a model designed to help scientists do their work better and faster. After professor José R Penadés and his Imperial College London team spent a decade working out why some superbugs are immune to antibiotics, he found that Co-Scientist came to the same conclusion in 48 hours.

"He told the BBC of his shock when he found what it had done, given his research was not published so could not have been found by the AI system in the public domain." Now the professor says it might have saved years of work.

"Prof Penadés said "I feel this will change science, definitely...I'm in front of something that is spectacular, and I'm very happy to be part of that...It's like you have the opportunity to be playing a big match - I feel like I'm finally playing a Champions League match with this thing."

From spotting the faint, almost invisible signs of future breast cancer in a mammogram years before a human radiologist could, to helping pathologists make faster, more accurate diagnoses, AI is weaving itself into the fabric of medicine. It's a scalpel, a microscope, and a diagnostic tool all in one, and it's powered by the same technology the doomers want us to fear.

But the revolution isn't confined to the hospital. It's happening everywhere. Let's look at where AI is making a difference in everything from engineering and software development, to the most basic of human needs: a place to call home.

Unlocking the Past, Building the Future

For decades, we’ve been told that building enough housing, especially in our most choked-off cities, is an unsolvable puzzle. A nightmare of zoning, costs, and endless delays. But what if we could design and build not just faster, but smarter?

In Oakland, California, a project called Project Phoenix is rising from the ashes of an old industrial site. It’s a partnership between modular construction pioneer Factory_OS and software giant Autodesk. Using a generative AI tool, they fed the system their constraints, studio, one and two-bedroom units, cost, carbon footprint, even quality of life metrics like sunlight and views. In hours, not weeks or months, the AI generated thousands of optimized designs for a 316-unit development, with a third of the homes designated for low-income or formerly homeless residents.

The real existential threats aren't rogue AIs; they're the slow, grinding challenges of energy, materials science, and engineering that limit our progress. For centuries, discovery has been a painful process of trial and error, a slow march of incremental gains. AI is speeding all of that up, getting us to solutions faster and faster.

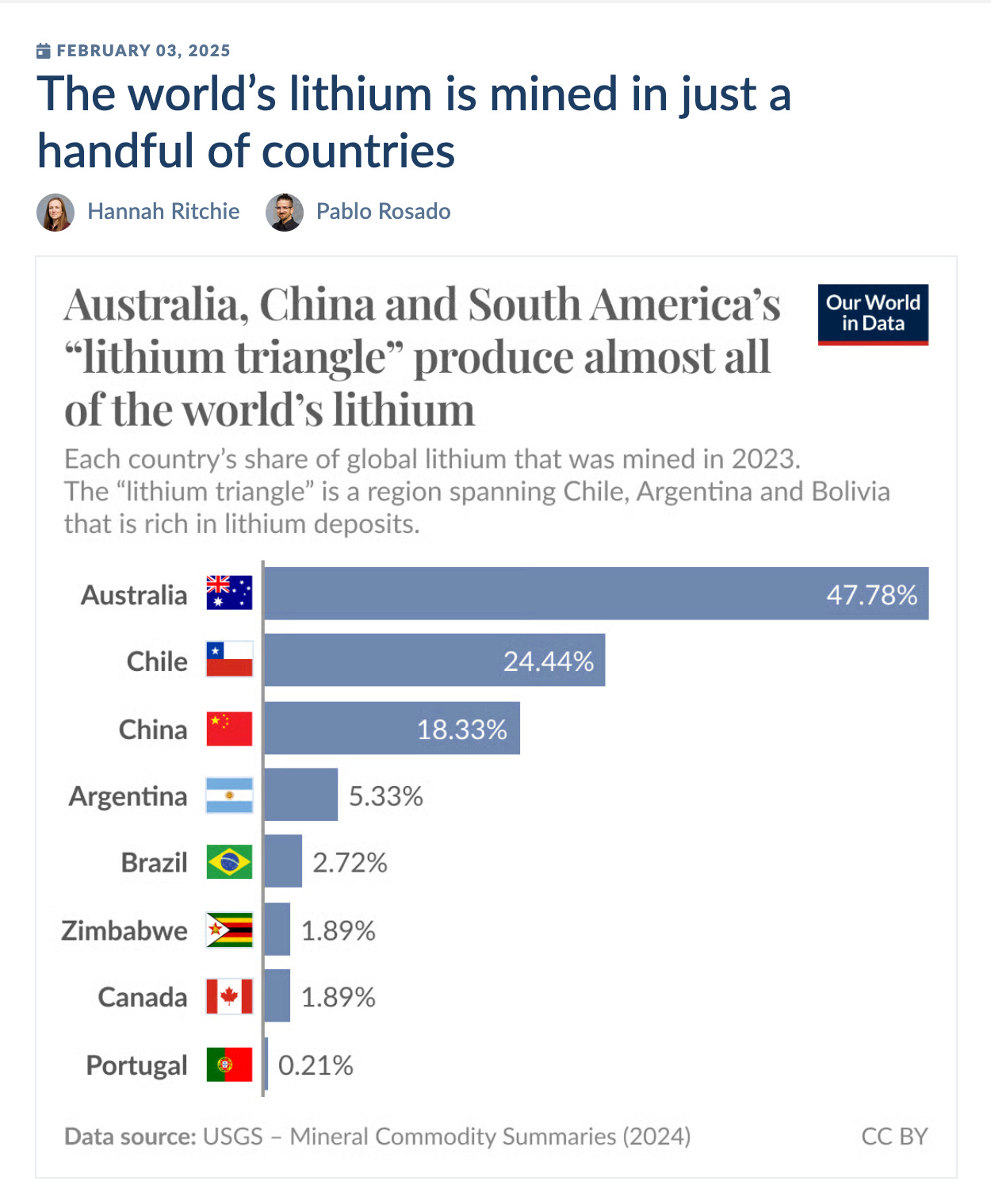

Take the race for better batteries. We need batteries that are safer, charge faster, and use less of precious resources like lithium. The traditional approach? Mix and match elements in a lab, a process that could take decades to stumble upon a breakthrough.

Recently, Microsoft and the Pacific Northwest National Laboratory (PNNL) took a different approach. They used AI to find a new material for solid-state batteries. Instead of a handful of chemists, they unleashed a torrent of high-performance computing and AI models to analyze 32 million potential inorganic materials. The AI didn't just look for candidates; it filtered them for stability, reactivity, and their potential to conduct energy. In just 80 hours, it narrowed the field from 32 million to just 18 candidates. One of the most promising, may use 70% less lithium.

That's a big deal because lithium-ion batteries increasingly power everything from our grids, to our phones, to our cars, satellites and medical devices. Lithium demand is exploding and expected to rise 5x to 10x by 2030. Even worse, mining it is environmentally and geopolitically problematic so finding a formula that uses a lot less of it, while still allowing us to grow our civilization, to what augmented intelligence means for the world.

(Source: Our World in Data)

Again, despite the ludicrous headlines, it’s not about replacing human ingenuity but augmenting it, compressing 250 years of chemistry into a matter of months. This isn't a fluke; companies like Aionics are forming to build entire platforms to design and commercialize new materials at a speed that was unimaginable a few years ago.

Now, are these systems perfect? Of course not.

AI is a new kind of workflow. It shifts the bottlenecks to three new areas:

Problem definition/concepting

Iterations

Verification

If you can't define the problem, AI won't help you much. Iterations with an AI can be nearly instant or take a massive amount of time. The time it takes to get to a viable solution is often unpredictable and that can take speed ups from 10X to negative 10X in an instant. And verification still takes time. You have to run experiments in the real world of friction and dust. And if you don’t know what you’re looking at, because you’re already an expert, you won’t be able to verify the output. AI tends to make folks who already know something better at what they do because they can verify its outputs.

But these workflows will continue to get more streamlined and refined as we learn how to work with AI. And they will speed up our ability to design and build.

The goal isn't sterile, flawless perfection. The goal is to be better than the current speeds and time to value. To demand perfection before we deploy these tools is to condemn us to the slow, painful, and often fruitless march of trial and error.

We don't throw away a new drug because it has side effects; we work to mitigate them. Our first prototype build often looks like a Rube Goldberg machine and needs to be scraped but we learn from it and build a better mouse trap the second time around.

We embrace progress, flaws and all, and we iterate.

That is how every single human advancement has ever worked.

The Great Co-Creation

And what about the jobs? This is the doomers' favorite scare tactic, the one they trot out to frighten the masses. "The robots are coming for your jobs!" they shriek, painting a picture of a future where humans are obsolete, relegated to the scrap heap of history with chronic unemployment.

We've seen a flurry of stories like this. Derek Thompson just wrote a widely read story in The Atlantic that AI is killing jobs for college grads. We saw a flurry of copy-cat stories in the New York Times, Axios, PBS and the Guardian. Most of them, other than Thompson, didn't bother to question the AI is killing jobs narrative at all, they just ran with it, while screaming about the end-times.

But as blogger Noah Smith wrote in his Noahopinion blog:

"When people actually started taking a hard look at the data, they found that the story didn’t hold up. Martha Gimbel, executive director of The Budget Lab, pointed out what should have been obvious from Derek Thompson’s own graph — i.e., that most of the 'new-graduate gap' appeared before the invention of generative AI. In fact, since ChatGPT came out in 2022, the new-graduate gap has been lower than at its peak in 2021!"

These stories all come from fear and a failure of imagination, a fundamental misunderstanding of what this technology is. Humans are great at imagining all the jobs that might go away but they're terrible at imagining what kinds of jobs will get created.

We've already destroyed all the jobs multiple times in history. You didn't tan leather to make your clothes today or hunt the water buffalo for food.

But we've always replaced those jobs with new and more varied jobs. Nobody is calling for a return to whale hunting so we can dig the white gunk out of their heads to make candles. We flip a light and the electricity powers our energy efficient LEDs that last for decades and don't stink up the house.

We seem to resent progress today, to think that economics is a zero sum game where when we make things more efficient everyone gets poorer when it works in the exact opposite way.

It's a belief echoed by AI pioneer and socialist turned doomer, Geoffrey Hinton, who recently told the Financial Times "AI will make a few people much richer and most people poorer." He also said a decade ago that radiologists will be made obsolete by AI but we have widespread adoption of AI by radiologists instead and more radiologists than ever so I wouldn’t give his predictive powers much credibility at all.

(Source: Financial Times)

These kinds of beliefs stem from a fundamental misunderstanding of economics and progress in world history.

As Andrew Leigh writes in How Economics Explains the World, "In prehistoric times, the only source of artificial light was a wood fire. To produce as much light as a regular household lightbulb now gives off in an hour would have taken our prehistoric ancestors fifty-eight hours of foraging for timber...Today, less than one second of work will earn you enough money to run a modern household lightbulb for an hour. Measured in terms of artificial light, the earnings from work are 300,000 times higher today than they were in prehistoric times, and 30,000 times higher than they were in 1800. Where our ancient ancestors once toiled to brighten their nights, we rarely even think about the cost as we flick on a light."

And no, this time is not different.

If we have to bet on a black swan event that's never happened in all of human history versus countless evidence of adaption and integration of technology by people for millions of years, we should go with history. Of course, this time could might be different, because the past is never a perfect predictor of the future, but lets wait for actual evidence in the real world before we decide we need to take radical, extreme actions.

More than likely, AI isn't a replacement for human intelligence; it's an amplifier, a co-intelligence.

It's the beginning of a great co-creation, a partnership between human and machine that will unlock unprecedented levels of creativity and productivity.

(Source: Amazon and Ehtan Mollick)

Take chip design. For sixty years, it’s been a brutally complex puzzle. Engineers spend months painstakingly arranging billions of transistors, balancing power, performance, and area coverage. It’s a high-stakes game of Tetris at an atomic scale. In 2020, Google unleashed a reinforcement learning model called AlphaChip on the problem. Approaching it like a game, the AI learned to generate chip layouts that were superhuman, accomplishing in hours what took human teams months.

This isn’t science fiction. AlphaChip has been used in the last three generations of Google’s Tensor Processing Units (TPUs), the engines that power their AI services. NVIDIA is using similar tools, likely close to what they outlined in their Advanced Applied AI teams's CircuitVAE paper, to design circuits for their GPUs. This is how you scale a team and slash time to revenue. It’s not about firing engineers; it’s about giving them superpowers, letting them focus on architecture and new ideas while the AI handles the grueling optimization.

The repetitive, soul-crushing parts of our jobs? The endless data entry, the mind-numbing paperwork, the rote analysis? Let the machines have it. Let them free us to do what we do best: to think, to create, to connect, to dream.

We are not building a world without work. We are building a world where work is more human, more creative, and more meaningful than ever before.

People who embrace AI and work closely with it will displace people who refuse to work with it. Film editors who refused to switch to digital editing don't have a job anymore but there are more editing jobs than ever. That's because making films got easier with digital tools and that meant more films, which meant more jobs. Give people power tools and they are remarkably adaptable with them.

Who are the people who are best placed to get the most out of generative AI tools for film like Google's Veo 3 based Flow and other tools that control everything from character motion to camera angle?

You guessed it. Filmmakers.

They already understand shot composition and lighting and camera work. Artists are poised to get the best out of these tools like Runway Act 2 that ditches cumbersome motion capture suits for real time filters over actor performances. That means instead of actors and Hollywood being cooked, actors get a new lease on life and costs come down for making blockbusters.

We're already seeing a revolution in other areas too, especially software. When it comes to coding, we're about to have a lot more programs and software.

Agentic coding tools like Claude Code have taken software design to the next level. It's no longer just vibe coders using these tools. Real engineers and engineering managers are embracing them and moving beyond vibe coder 10x engineer hype to a squad of digital workers to augment their development process. Again though, it doesn't mean less jobs, just more software. As Naval wrote recently:

"If AI lets non-developers replace junior developers, imagine what it lets junior developers do."

AI levels up everyone and the more you know the more you get out of it.

Your engineering manager to can get things built now. So can the coder who spent two decades coding but needs to learn a new language or a new set of design patterns. Now they can adapt to new technologies faster with the help of AI and leverage their twenty years of wisdom along the way.

At the top of the chain, apps will get more powerful and more complicated as AI enters the workforce alongside traditional coders. At the bottom of the chain, small development teams will be able to do a lot more. And soloists will be able to create personalized programs and that will lead to some great one and five person companies.

We're entering an area of personalized coding. Dream up an app and build it for yourself, even if nobody else needs it. It doesn't matter if nobody else wants it because the barrier to entry will get so much lower.

People who can critically think, plan, strategize, reason and imagine will have a massive advantage in the coming world. Because now if know what you want and you know how to ask for it well, you can get a program I want out of AI.

Already, kids are coding with AI, like this eight year old. She'll grow up in a world where coding with AI is the default.

And if you tell her twenty years from now that people used to do it by hand and AI was once controversial, she will look at you like you have two heads and wonder what the hell you are talking about as she happily creates another app.

The Disconnect and the Reality

Right now, pessimism, especially about AI, is running rampant in western society and it's a terrible sign. When societies turn pessimistic and stop believing in the future it's often a self-fulfilling prophecy, as Johan Norberg shows in his fantastic book Peak Human. The book races through seven of history’s greatest golden ages—from democratic Athens to the tech‑turbocharged Anglosphere—to prove a simple law of civilization: open minds and open markets light the fuse for art, science and prosperity, while paranoia, protectionism and rigid hierarchies snuff the flame and send once‑mighty cultures tumbling into the footnotes.

(Source: Johan Norberg and Amazon)

Drawing on case studies where algebra sprung from Abbasid Baghdad and Renaissance Italy married art to industry, Norberg argues that progress and technological churn aren’t problems but the very oxygen of thriving societies—cut it off and stagnation, irrelevance and decline rush in.

Golden age civilizations embrace the future, embrace technology, embrace new ideas from anywhere and adapt them rapidly.

The doomers have focused us on phantom risks and hypothetical harms while ignoring the concrete, world-changing benefits. For every piece of AI-generated spam, there’s a medical breakthrough, a new material discovered, or a feat of engineering that was impossible just a few years ago. People who are obsessed with the imaginary monsters under the bed can’t see the miracle happening right in front of their eyes.

It doesn't mean there's no problems with AI. It's just not the problems doomers scream about. AI powered weapons and surveillance will supercharge authoritarian regimes and police states alike. But we won't stop nation states from building weapons. It's what they do, to protect their people and to fight other nations when things go wrong. AI can and will be used in weapons, but it doesn't mean AI is a weapon. Conflating the two is just a dishonest and idiotic rhetorical flourish to scare people. It's a lie.

AI is a general purpose technology. It's not a sentient being with its own desires. It’s a tool. It's a mirror that reflects our own ingenuity, our own compassion, our own desire to build a better world.

To fear it is to fear ourselves.

To demand we stop building is to demand we stop dreaming, stop striving, and stop reaching for a better future.

The future is not a predetermined path to destruction. It's a landscape of infinite possibility, and we are the architects. We can choose to be guided by fear, to huddle in the dark and curse the light, or we can choose to build. We can choose to use these incredible new tools to solve the oldest of human problems: disease, poverty, and ignorance.

So let the doomers have their bleak fantasies. Let them wallow in their apocalyptic predictions. We have work to do. We have a world to build. And in the world we’re building, AI isn’t the end of humanity. It’s the beginning of a new chapter, one where we are healthier, smarter, and more capable than ever before.

If we build it, everyone lives.

And we are building it, right now.

This is a much better take than the one I wrote a couple weeks ago after hearing the "If we build it, we all die", people on the Sam Harris podcast. (Not entirely sure when Sam Harris got so doomer/boomer, not gonna lie, I am more than disappointed.)

Keep making great shit.

Yes Daniel, I agree with everything you are saying. The real issue is simply that building an AI the way they are, basically by growing it wild and training it, if it happens to become much smarter than us humans, that's downright dangerous and stupid. It is dangerous to f*ck with anything if its a lot smarter than you, especially not aggressively grow it wild then try to train it. Because if you do that, doesn't that sound a lot like an apex predator?

Haven't you ever read Jurassic Park?

Now, I do assume that by keeping all these systems 1. not *too* much smarter than us, remember, making them way too smart could be deadly for us! (If we just train a wild grown system.) Wouldn't an army of tireless geniuses we can copy be more than enough? Yes, yes it would, without all these crazy risks people are talking about here. Like if I told you yeah there's a 20% chance you become a genius, and an 80% chance that uh, well, you end up dead or enslaved, would you do it? Sounds pretty silly to even talk like that, when we don't even know what "superintelligent" really means, and when *we can just take our 95% chance of global prosperity* right? So I guess that's food for thought.

Now point 2. here, so about these armies of AI that are just "ordinary genius" (They will be frickin smart! Like even "Einstein level"! Just not dangerously superintelligent or some nonsense.) And these AI will collaborate. And design collaboration systems. And scientific methods and principles frameworks theorems and protocols and so on and so forth. Honestly there is only so much "raw intelligence" that would even help! If you read stories about the smartest people in history half of them ended up depressed or died failures! You know what has led to 99% of all the prosperity improvements you mentioned? The scientific method. And yes, you know that Dan. (And I'm on your side remember but this is really important.) But the takeaway here is really that simple imo.

Ok now 3. we should *not* be "trusting" power hungry closed labs to do any of this. Super bad move. We need to address that issue regardless if you believe we are just building an army of geniuses or superintelligence (whatever that is). So in any case, in order to ensure that this technology can continue to serve us democratically (FOR the lay person), what's needed more than any other time in history for many of the same reasons you have highlighted and I fully 100% agree with you on, we need to advocate fight for and maintain OPENNESS and TRANSPARENCY. So this is the real fight, and it will be a tough one. Look at how we have allowed a large surveillance state to exist right now which of course is also feeding the closed AI labs. And there are a few other examples, but it looks like we have a great chance really if we take the middle ground here, so this is the single most important issue right now imo.