Embracing Cognitive Biases in Artificial Intelligence to Build One Shot Learners

Cognitive biases get a lot of flack. Smart people do their best to avoid them or mitigate them. Scientists try to control for them in their…

Cognitive biases get a lot of flack. Smart people do their best to avoid them or mitigate them. Scientists try to control for them in their experiments. Confirmation bias can kill an experiment faster than bacteria in a Petri dish. Even the name suggests they’re something to avoid at all costs. All in all “bias” is a horribly despicable word. Who wants to be unfairly prejudiced and short sighted? Wikipedia defines cognitive biases as “tendencies to think in certain ways that can lead to systematic deviations from a standard of rationality or good judgment.” Nobody wants to be a bad thinker.

But what if we’re wrong about cognitive biases?

What if naming them biases creates a prison that traps our understanding? Because they’re named something inherently negative our brain automatically assumes they’re all bad, which is a bias in an of itself. You start with a negative belief before you even get out of the gate!

And what if that negative belief structure is blinding us to the fact they’re actually incredibly useful for AI researchers?

In other words: What if they’re a good thing?

You see we might have a serious bias about cognitive biases. We have a blind spot. In fact, I think many cognitive biases (thought not all) are so important that they should be renamed “cognitive shortcuts” or “cognitive heuristics.” And as the science of Artificial Intelligence develops in the coming years, particularly in the realm of smaller data sets and unsupervised learning, I think researchers will look more closely at these “biases” and understand them for exactly what they are: necessary shortcuts that help us deal with learning from little to no information.

As Jack Clark, communications director at OpenAI, notes “We’re trying to build systems that are…‘one shot learning’ systems. The idea is developing systems that can learn useful stuff from a handful or even a single example.”

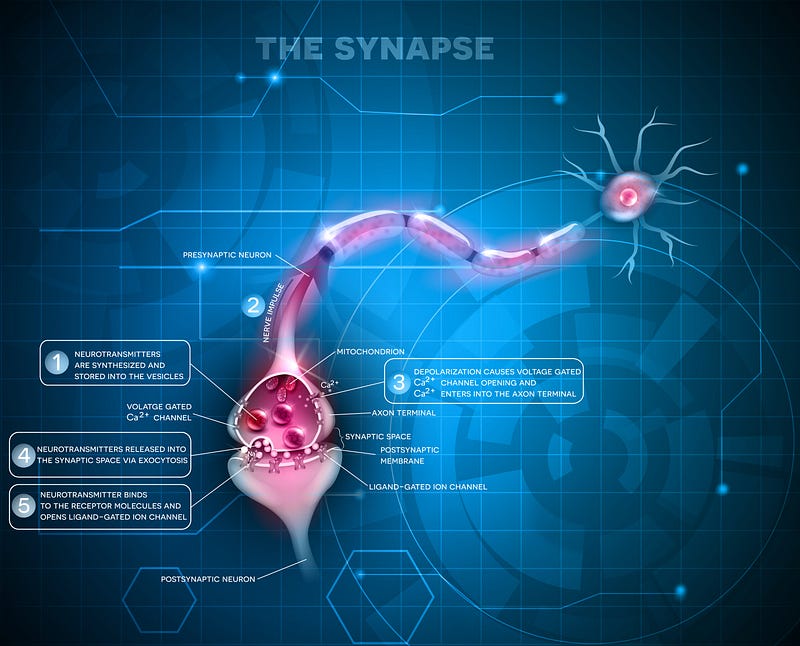

Current successful AI models are dependent on massive amounts of data. With Deep Learning we could feed a machine millions of images of tumors and the neural net might get good enough to pinpoint cancers with an accuracy that puts the best radiologists to shame. But humans can do that with a lot less info. When you take a kid out into the backyard and throw him a ball, he doesn’t need to see ten million hand labeled images of people throwing and catching a ball to learn how to play catch. He just needs his father and a little experimentation. Give him a few weeks and he’ll develop a reasonable aptitude for it. If he goes on to become a pro he’ll certainly study lots more examples but still nowhere near as many as a machine needs to study today. Now some kids will be better than others due to latent talent, aptitude and desire but all humans seem to have some built in universal learning algorithms. They can learn with surprisingly little input, which is something that computers just cannot do yet.

AI researchers have looked to develop universal learning algorithms since we first dreamed of intelligent machines.

What if evolution already gave us the answers to creating “one shot” learners but we missed them because we called them biases?

Cognitive shortcuts developed as a good way for us to deal with the world. They’re algorithms and filters. They allow us to make quick decisions, avoid danger, find food, build relationships and understand the things around us, while conserving our energy. There’s a reason you brain wants to conserve energy too. It may need it for something really dangerous. You brain’s primary job is to keep you alive for as long as possible. If you find yourself in a burning building you’ll thank your brain for hanging onto that energy so you can find your way out.

Frankly, we couldn’t live without our biases. We need them. They’re evolution’s elegant gift to you. They solve a very serious problem. As Buster Broom notes in his excellent cognitive “biases” roundup article there’s too much information in the world. Your brain couldn’t possible deal with it all at once. You don’t have enough time or energy. Literally. If you had to pick out every leaf on every tree you ever saw, you’d crumple into a fetal position and have to eat 24 hours a day just to have enough energy to get out of bed for five minutes before crashing again. Your brain is trying to pick out the most important bits as quickly as possible so you can take action in your environment.

We developed cognitive shortcuts as an elegant way to limit the torrent of information coming at us every single second of every day. When you don’t know what to do next, it’s a useful strategy to check out what everyone else is doing and copy them. If you don’t have a lot of examples to work with than it’s not necessarily a bad thing to give added weight to the few examples that you have at hand or remember to see what kind of initial conclusions you can draw. In fact, if your brain gave added weight to that memory it’s probably for a damn good reason. You brain isn’t just delivering the first memory it pulls from a random search. It’s giving you one that it decided mattered most to you. It may be because it was the most painful or pleasurable, which is how nature adds “weights” to our neural networks.

A great example of a good bias is that you’re wired to spot fast movement. Why? Because something moving fast could kill you, like a snake. In fact, snakes were so deadly to our ancestors that we evolved specific heuristics to spot them even when they’re utterly still and camouflaged. Humans can spot snakes faster than anything else in any setting or image. We can do this even though we completely miss other things that remain motionless in complex backgrounds. That’s an example of the brain evolving to deal with what’s most important in our world. For us that is surviving and reproducing. For computers it will be different but we should train computers to have cognitive biases, not avoid them. How else will a self-driving car make it in the world if it can’t spot something coming at it too fast? That’s a threat to the car and everyone in it so it better pay attention and take action.

But hold on, you’re thinking. Without a doubt some cognitive biases are bad. Take stereotyping for example. Certainly we can agree that stereotyping entire groups of people is inherently wrong and dangerous? It’s led to some of the worst abuses in the history.

But can stereotypes be useful? Stereotyping at its most basic level is nothing more than looking for patterns in groups. Are their characteristics that many folks in a group share? Certainly. It’s safe to say that some stereotypes are pretty damn accurate. For example there are more liberals drawn to the arts than conservatives. Or another example is that conservatives tend to favor established traditions over new ideas. A stereotype can certainly be helpful at a dinner party. If I correctly guess that the group of folks at dinner are conservative leaning I might best avoid particular hot button subjects like climate change if I’m progressive and my goal is to have a nice dinner without a big debate and yelling and hard feelings.

On the other hand there are countless examples of stereotypes leading to terrible results. If a judge assumes a black man is more likely to become a repeat criminal based on his bias that blacks are more likely to commit crimes he might hand out a harsher sentence. On the flip-side if a police officer assumes a white man in a suit doesn’t have a gun because he doesn’t fit his stereotype of a criminal it could get him killed. At it’s worst stereotyping stands as the major justification for the worst of human horrors like genocide.

That leads to a serious question. How does a cognitive shortcut become a cognitive bias? What’s the difference? How is it useful in some cases and a total disaster in others? The answer is buried in the Wikipedia entry on stereotyping:

“[Stereotyping leads to an] unwillingness to rethink one’s attitudes and behavior towards stereotyped groups.”

Ah! It’s when we don’t bother to consider new information. We might make a judgement about people at first then when we get new information that should alter our understanding we ignore it instead of embracing it. We stick to the original conclusion at all costs. That is the REAL cognitive bias! It’s when we stop learning.

In other words laziness.

To my mind laziness is the only actual flaw in human thinking. It’s the refusal to go further, to push ourselves, to reexamine our models of the world. It goes back to our need to conserve mental energy. That’s useful up to a point but when taken to an extreme it makes us short sighted, rigid and inflexible. In short, it makes us biased. Even worse, we believe we’re absolutely clear, right and justified, which traps us in a loop of wrong and dangerous thinking that reinforces our weaknesses and not our strengths.

Cognitive shortcuts give us the first answers. But we should adapt and change with new information. Humans often don’t do that so well. The good news for AI researchers is that computers won’t have that problem. They’re not worried about conserving all their energy to dodge a snake bite. We can develop algorithms that mimic cognitive shortcuts to make machines better “one shot” learners but computers won’t suffer from laziness. Computers will become continual learners, adjusting their understanding over time, as new information becomes available.

My challenge to AI researchers it to rethink your ideas about cognitive biases. Start by calling them shortcuts instead. Free your mind first and then go back and look at the old research papers. I’m positive I’m not the only one to consider it from this angle. With a quick search, I did find a few papers where people explored going with biases instead of trying to mitigate them. Of course most of the papers out there are called “overcoming cognitive biases” or something similar but that’s to be expected. I encourage you to look deeper. Go past the first few Google pages. Many ideas in AI are coming back to us from the past because we have the horsepower and the datasets to actually make them work. I have no doubt that some hidden gems are still buried in the papers of the 1960’s-1990’s. To find them we just have to overcome that one human flaw and push ourselves a little further.

If we have the courage to keep learning and adjusting our understanding there’s no bias we can’t overcome.

A bit about me: I’m an author, engineer and serial entrepreneur. During the last two decades, I’ve covered a broad range of tech from Linux to virtualization and containers. You can check out my latest novel, an epic Chinese sci-fi civil war saga where China throws off the chains of communism and becomes the world’s first direct democracy, running a highly advanced, artificially intelligent decentralized app platform with no leaders. You can also check out the Cicada open source project based on ideas from the book that outlines how to make that tech a reality right now and you can get in on the beta.

Hacker Noon is how hackers start their afternoons. We’re a part of the @AMI family. We are now accepting submissions and happy to discuss advertising & sponsorship opportunities.

If you enjoyed this story, we recommend reading our latest tech stories and trending tech stories. Until next time, don’t take the realities of the world for granted!