Agents, Agents, Everywhere, All At Once

AI Agents are about to Change The Way We Work, Play and Learn and Almost Nobody Sees Them Coming

(Parts of this article are condensed version of passages I wrote for a major report on Agents, LLMs and the Smart App Revolution coming out in late Sept from the AI Infrastructure Alliance)

It’s 2033 and you're a video game development company with a 150 employees and a fleet of incredibly flexible AI Agents that do tremendous heavy lifting for you every day.

Even with this small team, you've put out three major AAA games over seven years on the AR net that dominates modern gaming. A decade ago it would have taken 5000 people and almost a billion dollars to make a top tier game. Now it costs 50M-100M to and much of that goes to marketing the hell of it to make it go viral.

The difference is a ubiquitous layer of AI agents everywhere who can do incredibly complex jobs that were sci-fi not ten years earlier. They do marketing, research, localization, coding and bug fixing, web design, compliance and video production and so much more.

But that hasn't meant less games and less jobs. A flurry of smaller specialized satellite game studios have risen up to challenge the big game houses of the old guard. They're staffed with people who are puppet masters of swarms of AI that can augment them and make everything they do faster and easier. A small team of 10 people even managed to put out a mega-hit last year that took the gaming world by storm.

It's a golden age for AR, VR and classic 2D video games running on massive GPU and AI ASIC farms in the cloud and streaming to lightweight consoles that don't need as much heavy processing and power anymore. Not long ago, people were screaming about the tremendous energy footprint of AI but it's become one of the most energy efficient industries on Earth, with sprawling datacenters fed with geo-thermal, wind, water and solar power. Some of those datacenters have even moved to space, taking advantage of the low temperatures to cool banks of AI chips, the satellites launched from Musk's Starlink and Bezo's Blue Origin racing to get the most AI chips into orbit, along with satellites that can stream down concentrated solar energy from the void to power datacenters back here on Earth.

Breakthroughs in analog and semi-synthetic neurochips have made the chips 100X more efficient, edging ever closer to the gold standard of the human brain for max power efficiency, while also speeding up tremendously and enabling a massive explosion of intelligence in every industry on Earth. AI chips now boast over 2.5 trillion transistors on standard sized chips and 260 trillion on wafer scale chips, well beyond the capacity of the human brain. And recent quantum chip breakthroughs are now poised to leave those numbers in the dust and accelerate intelligence to a fantastic new scale.

The descendants of LLMs have stormed into every aspect of software, at every level.

Call them LATMs, Large Adaptable Thinking Models.

They're general purpose, adaptable, long and short term reasoning and logic engines, with state, massive memory, grounding and hooks into millions of outside programs and models that they can use to get just about any complex task done right.

They're adaptable because they've incorporated new algorithms that are closer to the universal learning capabilities of the human mind. If someone lived in the forest their whole life and you uprooted them and put them in a city, they might be overwhelmed but they'd still know how to navigate around. The same wasn't true for older AI models, like LLMs. If you changed the rules on them overnight they broke down but the new models are capable of learning on the fly and updating their neural net, their weights no longer fixed and frozen.

These new models can keep learning from real world experience, download new insights and weave that into their neural networks with averaging and weight merging and they can abstract and adapt on the fly.

They're a million times more powerful than the old LLMs that had Luddites and Singularians alike screaming about AGI and the end of the world. The end of the world never came and the paranoid moved on to other worries that would surely mean the end of the world this time.

These powerful mini-brains orchestrate the workflow of tools and other models at every stage of game development, like a puppet master.

Your marketing team fed the marketing Agent swarm a list of the 5,000 potential partners for your upcoming game release and the system went out and read all their websites and researched them in depth, going through their Github, reading any articles it could find about them, watching news videos about them and more.

It would have taken months to do all that and it was done in a few days.

The agent wrote up an analysis of the partners, summarized them and highlighted the top ones to reach out to first. Then it crafted some potential outreach messages via chat, email, LinkedIn and the new AR platform everyone’s using now. The letters are super personalized, based on everything it learned about the best candidates.

Your marketing team reviews them, makes some corrections and adds some of their own flare and then gets to work on connecting with the partners.

Another team from support just created a fantastic series of educational videos tutorials for the community on your best selling game. Overnight your LATM-driven evangelism agent extracted all the key points from the video and wrote fantastic blog tutorials about it. Then it localized all the videos into 100 languages and recorded the voiceovers with subtlety and nuance in the voice of the original actor.

It then digitally edited the videos to match the lip sync so it looks like the speaker is actually speaking the language natively.

Your digital content team will have to check all the videos and clean up some imperfectly lip sync and fix a few words and sentences that didn’t translate with the right flavor and flair, but it’s saved your team 1000s of hours of work and you have content that is ready to go worldwide.

Overnight the forums for one of your most popular games filled up with complaints of a new game breaking bug that stops players from being able to progress.

The code agent swarm goes through all the logs, reads all the complaints from different users and categorizes them on similarity, and then it reads the code and traces the problem to a new update pushed last week. It fixes the code, writes a unit and regression test, tests it and pushes the fix to the Github repo for approval.

It also writes a message to the users that it’s discovered the root cause of the issue and written a fix that will go live later that day. That calms down chatter in the forums and people take a deep breath.

A supervising programmer checks the code the next day and approves it for a push late that day.

But how did we get here?

Let's roll backwards to the present day LLMs and how they’ll evolve into LATMs.

LLM to LATM to Agents to Her

It all started as engineers across the world worked hard to solve the limitations and weaknesses of LLMs in the real world.

LLMs are fantastic but they have well known weaknesses.

No real grounding or way to establish tell truth/beliefs

New kinds of security attacks

Hallucinations

Making up information

The list goes on and on.

We can think of all of these as bugs the same way we think of traditional software bugs.

When technology comes out of a lab and regular engineers get their hands on it, they can tweak it and find solutions to mitigate weaknesses. That's how it's always worked and that's how it will work this time. Early refrigerators blew up and engineers figured out how to use different gasses that didn't combust when exposed to air, as well as how to manufacture the components so they were more airtight.

You can't fix that problem before it happens. Problems can only be fixed in the real world.

Anyone can imagine a million problems before they exist but it's talented engineers to fix real world problems as they play out in the real world. That's how technology has always evolved and that's how it will evolve this time too. It's just the basic, fundamental nature of reality.

The general public and lay people focus WAY too much on these bugs. Engineers and researchers will solve them and they are already solving them now. And, of course, with the billions and billions and billions of dollars pouring into AI foundation models right now, you can expect new architectures, not to mention continual learning breakthroughs and ways to keep adding new skills to old models without catastrophic forgetting. I wrote a lot about this in the Coming Age of Generalized AI so I won't rehash too much of it here. Continual learning is the key, and neural networks that can learn and adapt on the fly, adjusting part of their weights and freezing others as they trial and error their way through new problems.

We will have better, smarter, more adaptable digital minds and researchers are already hard at work on it, and programmers are using outside tools to ground today's models with things like knowledge retrieval from trusted sources, guardrails and formatting answers and more.

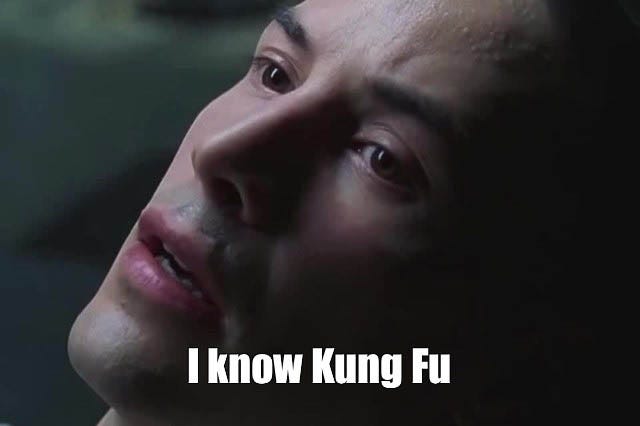

The key will be letting these digital minds learn and download new concepts over time, like Neo learning Kung Fu.

One approach is to have a smaller, faster learning neural net for learning new tasks and then a massive neural net with everything its learned in the past, aka progress and compress. The new net learns a new task and then we prune the weights of the new task to simplify it, then partially freeze the weights of the old neural net and then average them into the big neural net. That would also give us machines we could upgrade with skill packs and bug fixes and new tasks. Expect more ideas like this to come fast and furious over the next few years, while programmers and engineers use traditional ways to make systems more stable and scalable and robust.

Engineers will mitigate most of these problems with new training and architectures, middleware, knowledge databases, security overlays and new approaches to keeping these powerful models on the rails. Count on it. Engineers will make them more reliable and production ready in over the next few years.

It’s already happening. I talk to these companies and engineers all the time.

But this begs the question why are some people are so pathologically focused on the flaws of LLMs and missing their potential? Because of science-fiction, a culture that increasingly sees risks instead of rewards and general misunderstandings about what AI can and can’t do. It’s also a misunderstanding of how fast technology progresses and what the capabilities of current AI are now.

We went right from LLMs to the movie, Her, in people’s minds. That’s way too fast. It’s as if in just two years we’re all be talking to superhuman AI that will understand us perfectly and then go off and create a rock solid marketing plan, write the copy, write our emails to stakeholders, make the advertisements and run the campaign all without any human intervention.

Don’t believe it.

We missed a step along the way.

(Source: Spike Jonze's Her)

We missed the human in the loop step. Co-planning with AI and agents. Working with them and them working with us. Programmers layering on traditional logic to mitigate the flaws of LLMs and boost their tremendous potential.

It might be disappointing to some to realize we’re not at AGI and sci-fi level AI but where we are now is actually very cool and very powerful.

We have incredible new possibilities that were impossible only a year ago. LLMs, woven into Agents, can go out and read text, interact with APIs, write software, fix bugs, do planning and basic reasoning and more.

We’re about to see an explosion of apps built with these new capabilities in mind that save people lots of time and money. People just need to embrace the Tao of realistic expectations.

If developers ground themselves in today's reality, with realistic expectations, they can build some amazing things that they simply couldn’t build before and that is amazing.

The key is that human in the loop step.

Long range planning and reasoning is still a work in progress with AI. It will get better.

But for now remember the old Google maxim: Let humans do what they do well and computers do what they do well.

Computers do scale, simple reasoning, brute force counting, speed, while humans give meaning to information, abstract ideas and complex reasoning.

But we don't need tomorrow's adaptable minds to build agents today. Today LLMs are already the brains of today's most complex Agents. They use tools to do tasks and make decisions in a semi-autonomous or fully autonomous way. They are the only truly general purpose models, capable of a wide range of tasks, from question answering, to summarizing, to text generation and multi-media generation, to logic, reasoning and more.

Agents, Next-Gen Stacks and AI Driven Apps Get Build Now

The next-gen stack to build these kinds of agents is already coming into focus. But just to be clear, these kinds of stacks take time to evolve. Programmers need to figure out the best design patterns and abstractions. They change over time as people learn and innovate and share information. It's a slow process. It doesn't happen overnight. Frameworks come and go and get outmoded.

But here's what that stack is looking like now:

LLMs

Task Specific Models

Frameworks/Libraries/Abstractions

External Knowledge Repositories

Databases

Vector DBs

NoSQL Databases

Traditional DBs

Front Ends

APIs

UI/UX

I'm also seeing the rise of a secondary set of components that will likely become more important over time.

Model Hosting

Fine Tuning Platforms

Monitoring and Management

Middleware

Security

Deployment

Let's look at the majors for now so we understand why these tools are evolving so quickly.

The first is code itself. It could be traditional, hand written code by programmers, automatically generated code created by the LLM on the fly, collaboratively generated code with the programmer and the model interacting or any combination of the above.

The second major component are frameworks like LangChain or LLamaIndex or Haystack or Semantic Kernel that abstract common tasks like fetching and loading data, chunking the data into smaller bites so it can fit the LLM's context window, fetching URLs, reading text, receiving prompts from the user and more. Most of these are Python libraries that will evolve over time to be more comprehensive in their capabilities and become true frameworks which are more comprehensive set of libraries or tooling. These frameworks do the heavy manual lifting of an agent, fetching and streaming data back and forth, taking in and outputting prompts and answers and the like.

Most developers and teams I talk to are using their own custom frameworks though. That tells me that none of these current frameworks have hit upon the right abstractions and secret sauce to make it the default way of doing business.

Most advanced developers still prefer to write their own abstractions or libraries at this point. You can expect that to change over time as AI applications become more ubiquitous and we get better abstractions in many different libraries. Today it would be almost absurd to write your own Python web scraping library when you can use Scrapy. You don't write your own scientific and numerical calculator instead of using NumPy unless you have a damn good reason or you suffer from not-invented-here syndrome. It's incredibly rare for anyone not to use well written and performant libraries to save time and to avoid reinventing the wheel. I expect the same to prove true with agent frameworks.

The third major component are task specific models. These are models that do one thing and one thing well. Gorilla was trained from the ground up on APIs so it interacts with APIs incredibly well. Stable Diffusion is a well know open source image generator. Whisper delivers automatic speech recognition. Wizard Coder excels at code interpretation and development.

The fourth major component is external knowledge repositories like Wolfram Alpha which uses symbolic logic and various algorithms to give clear, structured answers to specific kinds of questions, like doing math or giving the correct population of Papua New Guinea. LLMs are notorious at making up information.

They also don't have the ability to say "I don't know" or "I don't feel confident" so they just make up something that sounds plausible but that might be total nonsense. External knowledge repositories ground the model in the real world, giving them facts and data they can pull from to give clear, crisp, precise answers. It's a marriage of symbolic logic and neural nets in an unexpected way.

The fifth major component are databases, including traditional SQL style databases like Postgres, NoSQL databases like Redis, and vector databases like Pinecone. A program may use any or all three style of databases.

Vector Databases are new for many organizations and developers. There are a flurry of them hitting the market like Pinecone, ChromaDB, Weaviate, Activeloop Qdrant, Milvus, and Vectara. They store info as vectors and lets developers use it as a kind of long term memory of the LLMs, because it can retrieve similar prompts and answers without finding exact matches.

NoSQL databases excel at large scale document management that would be hard or impossible to store in traditional databases. That lets developers load up huge unstructured data repositories of documents like legal archives or web articles.

Lastly, we have the old workhorses of the databases world, row and column based databases like Postgres that store simpler information that might be extracted during the applications work.

Time will tell whether we need or want specialized vector databases or a database that combines functions on a single distributed scalable platform like pgvector. A lot of developers I talk to are just going the pgvector route at the moment. Why manage two DBs when you can manage one.

The rest of the stack will evolve over time as developers figure out how to abstract away problems and as we learn the shortcomings of these systems. They’ll build in better security, mitigations against failure and more.

The Two Kinds of AI Driven Apps Right Now

Many agent and AI driven applications have gotten a reputation that they’re nothing but a “wrapper” on top of GPT-4. That kind of dismissive talk usually comes from folks who've never tried to develop an AI driven application and dealt with the promises and pitfalls that it brings. Developing an agent or AI driven app is much more challenging than simply writing a prompt and hoping that it works over the long haul.

While the wrapper dig might be true for embedding GPT-4 into a website to answer questions, more advanced applications are much more complex. Even a seemingly simple application like using an LLM to generate recipes on a website might involve knowledge retrieval from a database of recipes, blacklists of terms that might produce dangerous or poisonous outputs, some action planning and more.

There are broadly two kinds of AI driven applications:

Ones that don’t need an LLM at all or use an LLM in a more straightforward way, such as summarization

Ones that need the planning, reasoning and orchestration of LLMs

The ones that don’t need LLMs are usually pipelines or workflows of code and models that accomplish a task (though the workflow is often hidden from the user):

Generate 10 images of X -> test them for flaws -> discard the ones that have that flaw, like mangled hands-> gen new ones and test until you have 10 good images -> upscale them with a GAN

These non-LLM apps are usually more straightforward to design and have more deterministic properties. They are heavily code and workflow driven. As noted, these workflows may use an LLM in a more limited capacity, such as asking it to summarize text on a website, but that LLM will typically have no interaction with the user and it will not be required to make decisions or come up with a plan.

None of this is to say that these kinds of applications are easy to design or build. As noted, they are more deterministic, but they also include many elements of non-determinism with the models themselves.

A workflow run of an imagine generator pipeline may produce a 100 great images for more common concepts like “a bald eagle” but fail with more complex composition or ideas that it was never exposed to, such as a “centaur with a hat made of avocado.

Agent style applications are more complex. They require reasoning and planning by the LLM and often allow the LLM great freedom in how to orchestrate other software or models to accomplish their task.

Most teams I talk to say they need more than GPT or Claude or Llama 2 to build apps. They need code, additional models.

What I'm seeing from developers is usually a mashup of several major lines of research and concepts. Most often I see a HuggingGPT approach combined with one of the various ways researchers are developing to get better reasoning and planning out of LLMs such as Reasoning Action and Planning (RAP), Tree of Thoughts, creating a swarm of LLMs that each offer their opinion and then combining or averaging the outputs of those LLMs.

The HuggingGPT paper is maybe my favorite paper in all of AI. It's the way I see most agents developing over the next few years.

The HuggingGPT approach uses HuggingFace’s vast descriptions of models as a way for LLMs to figure out what each model can do. With those text descriptions it was enough for GPT to figure out what task specific model it could call to do a particular task, such as “please generate an image where a girl is reading a book, and her pose is the same as in example.jpg, then please describe the image with your voice.”

(Source: HuggingGPT paper)

In this case the agent does the complex reasoning that might be hard coded in a more consistent and limited workflow design pattern. Hard coded workflows are easier when you generally want the same consistent output such as “correct facial imperfections” or “blur the background” like Lensa is doing. But if the application allows for more open ended workflows it's nearly impossible to hard code it. In the above example the user does the following:

Uses an example image that has a pose they want to mimic

Prompts for an image of a girl in that pose reading a book

Describes what is in the resulting image

Voices that description with text to speech

This ultimately involves several different models to do that work. We can see from the graphic that it uses OpenCV’s OpenPose model that extracts a skeleton like outline of a pose from an existing image, then use a controlnet model (which injects that pose into the diffusion process along with the text description from the user to get better outputs), an object detection model, detr-restnet-101, an vision transformer image classifier model called vit-gpt2-image-captionin, a captioning model called vit-gpt2-image-captioning to generate the text caption, and a text to speech model called fastspeech-2-en-ljspeech to give voice to the text.

Essentially this involves four core steps:

Task planning: LLM parses the user request into a task list and determines the execution order and

Model selection: LLM assigns appropriate models to tasks

Task execution: Expert models execute the tasks

Response generation: LLM integrates the inference results of experts and generates a summary of workflow logs to respond to the user

As you might imagine this is an incredibly complex pipeline where results may vary dramatically. It is also something that was literally impossible to do even a year ago, even if you had $50 billion dollars and a small army of developers because there was no software capable of this kind of automatic selection of tooling based on complex natural language instructions from a user.

Putting this kind of pipeline into production will require a suite of monitoring and management tools, logging, serving infrastructure, security layers and more. These kinds of applications will form the bedrock of new kinds of software apps in the coming years.

Most teams I talk to are not taking this kind of totally open ended approach to letting the LLM pick its own models to accomplish its tasks (yet). Many teams are using a series of curated models in their pipelines to augment the capabilities of their agent and hence the workflow is broadly similar. Also keep in mind that using the HuggingGPT approach to picking models from a live Hub style platform would run into some major legal problems as many of these models are not licensed for commercial use or have restrictions on use cases, so choose wisely and read carefully.

While I expect new approaches to present themselves over the coming years, I think the basic design pattern of these applications will remain much the same.

LLM + outside knowledge repos + DB + code + specialized models.

In an article in FT Rob Thomas, head of software at IBM, said "this points to a paradox about the use of generative AI in business. The race is on to make general-purpose language models more useful, building ever-larger models and training them on larger mountains of data. But when it comes to dealing with specific tasks inside a company, according to Thomas, 'the narrower the model, the more accurate and the better [it is].'"

This is not a paradox.

LLMs and specialized models working together is the heart of Agents today and for the foreseeable future.

We might end up with super powerful LLMs or post-LLMs that are crazy multi-modal, trained on text and video and images and code and everything else researchers at mega-companies can stuff into the model, with a little AlphaGo style reinforcement learning to boot, aka Gemini's approach, but the much stronger possibility is that you will still need outside knowledge repositories, other expert models and other code to power those intelligent platforms.

Embrace Imperfection, Secret Agent Man

If you’re a developer, start building AI driven apps that solve real problems in the real world.

You can deliver new kinds of capabilities that were impossible to do only a year ago.

If you’re a business, try lots of applications. There are tons of them and not all of them are good. Many of them require you to test them to really understand if they can deliver consistently. It’s not as easy as comparing features and bullet points.

But there are good ones you can find right now!

Agents will be everywhere, interacting with us at every level of our lives, from research assistants, to marketing masterminds, to agents that help us react faster to the competition and to write code and fix problems faster and faster.

This is a good thing. While many folks feel we need to somehow magically solve the problems of AI in a lab by anticipating everything that can go wrong, it's just not possible. Problems are found as systems interact with the world. And they're fixed in the real world too.

We'll have smarter, more capable, more grounded and more factual models, they are safer and more steerable and powerful frameworks to help us build better models.

But people have to remember that these systems will never be deterministic. Forget that. They are not going to magically do what we want every time, just like other another non-deterministic thing that we know well:

People.

We have to accept variability and imperfections. That’s the nature of intelligence and the nature of these new apps. It's both their Achilles heal and their genius. Just like people. Humans get crazy ideas in their head, do idiotic things, don't understand things while imagining they do, and a million other fallacies of reasoning and thinking. They make mistakes, do things wrong, waste time, go off the rails. These systems are no different. That is what non-deterministic systems are. They're engines for dealing with uncertainty in an uncertain world.

If you are out their building agents, don’t fight it.

Embrace imperfection.

So start building now, and the technology will continue to evolve with us as we enter into the age of ambient AI.

@Daniel Jefferies I have recommended your newsletter to my subscribers can you also recomend my newsletter to your subscribers so we can both grow each other